Software People Stories Podcast: Exploring Cybersecurity Innovation with Jasson Casey

TL;DR

Full Transcript

Welcome to the software people stories. I'm Shiv.

I'm Chitra. And I'm Gayathi.

We bring you interesting untold stories of people Associated with the creation Or consumption of software based solutions. You'll hear stories of what worked And sometimes what didn't.

You will also hear very personal experiences.

And insights that would trigger your thoughts And inspire you to do even greater things.

Before we jump into today's conversation, a special thanks to PM Power Consulting for encouraging and supporting this show right from the start.

With over a thousand person years of collective experience, PM Power has helped many organizations, teams, and individuals chart out their own journeys of excellence and make progress. Thank you, PM Power.

Now let's move to today's episode.

In this episode of the Software People stories, Jasson Casey, CEO and cofounder of Beyond Identity, shares his career journey and insights into the world of cybersecurity.

Jasson discusses his early fascination with understanding how things work, leading him into programming and eventually into cybersecurity.

He explains his work at Beyond Identity, emphasizing the importance of removing passwords to reduce security risk and incorporating strong device security.

Jasson highlights the significance of fundamental principles in cybersecurity and the evolving challenges with AI, including supply chain attacks and data poisoning.

He also covers the importance of trusted computing and deterministic controls for ensuring security.

Jasson provides career advice for early and mid career professionals in cybersecurity, stressing experimentation, curiosity, and continuous self education.

And finally, he shares his personal practices for managing stress and staying grounded, including exercise, sleep regulation, and maintaining strong personal relationships. Listen on. Hi, Jasson. Welcome to the Software People stories.

Hi. Thanks for having me.

Yeah. We usually start with a self introduction on how you got into IT or more specifically cybersecurity, what has been your career trajectory and so on. And then we will take it from there.

Okay.

So, yeah, I'm I'm Jasson Casey. I'm the CEO and cofounder of a company called Beyond Identity. How did I get into to IT?

I mean, I've always been fascinated with how do things work, and I remember as a kid just taking apart, like, little toys to try and figure out how they worked. And at some point, I realized that I could actually make a toy behave slightly differently, and you could make your own toys. So I got into programming fairly early on kind of just by playing.

First job where I actually got to write software was to help pay for college.

I knew a certain amount of math, knew how to write and see. I got an internship with a scientific computing company where the researchers didn't wanna bore themselves with like the basic geometries.

And so because they were really advanced mathematicians, and so they're like, hey, you go do this, and I thought it was the coolest thing ever. And how did I get into cybersecurity?

Ever since I started working kinda truly full time, I've always been working on infrastructure. So when I say infrastructure, think like routers and switches that operate at the carrier, not like in your closet at home, but like big things. And security is a function of those. And so I've always kind of been enamored with it started with basic filtering, and then it got into these active controls.

Like, how do you handle denial of service? How do you handle distributed denial of service? And eventually, got into analytics. Like, how do you tell if someone is running a covert c two channel?

And then, you know, from there it it got into, well, why are they doing this? What's going on that's causing them to do this? What exactly is going on between Russia and Europe that might make them wanna do this thing to this particular company? And I would say the theme in all of it is curiosity, but but it's all been very related, at least from my perspective.

Wonderful. Maybe I'll start with trying to understand when you said that you loved the way you could make toys do things.

Yeah.

Is that somewhat of a hacker mindset or a builder? Yes.

So so the old school definition of hacker is is just almost more about just being curious and and trying to figure out, like, how does something actually work? And can you make it do something it wasn't necessarily intended to do? Right. Almost for the joy of it, right?

So I guess in that mindset, sure. I mean, I've never really thought of myself as a hacker, but like, I'm definitely curious. I definitely want to understand how things work. I know this annoys my colleagues to this day.

But yeah, it was fun. It was like, and I know a lot of people are like this, right? Like when you finally figure it out, you get the almost like a shot of serotonin in your brain, right? Like you get that excitement, like I figured it out, I made it do that thing.

And yeah, like, my my parents didn't care for it because they didn't understand why I was destroying these things they bought. But but for me, it was fun.

Yeah.

Because one thing that I'm always intrigued about when talking to anybody working in the cybersecurity area are, of course, couple of things. One is also about the physical security. But beyond that, how do you stay one step ahead of the people who try to break things?

Well, there's always someone who you're not one step ahead of, otherwise there wouldn't be an industry.

But I think this answer really is similar to how do you stay one step ahead of your competition? How do you stay one step ahead of almost anyone?

And I think it comes back to fundamentals and kind of persistence in practice.

It's the early lessons that I remember having all the way back in like the late nineties about how does a computer actually work?

What is it How does a program actually execute? How does it go from being a file to being this running process that's doing things? And there really are fundamentals in how that happens. There really are fundamentals in how an operating system works. There really are fundamentals in how a compiler takes this thing that we trivially understand into this thing that what a machine understands.

And to this day, I swear it's still those fundamentals that I use and that I would argue the more advanced folks use when they're thinking about offense and defense.

It really is a game of fundamentals. A computer only works in a certain way, an operating system only behaves in a very specific way, or it has these regular constructs, it doesn't matter if it's Mac or Linux or Windows or variations of those. Like processes run, processes are loaded, processes can create other processes. They have to map into memory, they have privilege levels, there's ways of interacting.

Shared libraries have to be readable and executable for certain things to happen.

And by the fact that they're readable and executable, you can actually inspect them without privilege.

It's a very fundamental thing, but it's kind of mind blowing in terms of wondering, wait a minute, is the system library that I'm relying on, like libc, has it been hooked by adversary malware or maybe by an EDR?

I guess just it's an interesting question, and the answer is it still comes back to fundamentals.

Yeah. Like you said, I think where I find it really interesting when I said is that it's no longer monolithic systems that we build.

And at either build time or run time, there's a whole supply chain.

Services that you consume, services that you feed, etcetera.

And it's not just the typical, you know, SQL injection type of thing that used to be there. You have supply chain attacks. And now with more of the AI enabled things, they're talking about poisoning the data or trying to trick these systems into behaving or exhibiting behaviors that they're not supposed to do. Right? Which means that it is no longer completely predictive system.

Oh, for sure.

Right? So how does that play out?

So, again, I still think it comes back to fundamentals. Let's take AI for instance. What actually is AI under the hood? AI, at least the way most people use it, is next token prediction. It's really, really good next token prediction. And maybe there's emergent behavior in this next token prediction around being able to understand things like semantics as opposed to just syntax. But it still is next token prediction, which means it's probabilistic.

And it still operates on a baseline computer, which is actually doing deterministic steps. And so there's a lot of ways to actually analyze these things. There's a lot of ways to work with these things that still kind of brings you back to fundamental concepts.

One of the areas that we actually work on at Beyond around AI is we're really interested in this question of how do you know what's real? And because we think that's probably the question that society is going be most focused on over the next, at least the foreseeable future. We have deepfakes of video. We have deepfakes of audio.

We have engines that basically make you sound you can sound like anyone in email and text. And so we've thought pretty deeply about this. And there's this probabilistic engine that can be really good at mimicry. And there's a whole host of companies that build detectors, right?

Like that you can use their software to figure out, wait a minute, is this image maybe a fake image? But we look at that and kind of going back to fundamentals, our approach is, well, wait a minute, how does an adversarial network work? How would I actually train a generator? And the answer is, well, I'd use their detector to train my next generator.

So that kind of feels like an arms race. And then we started thinking, it's like, wait a minute. Maybe a maybe the answer to solving, this problem isn't to come at a probabilistic tool with another probabilistic tool. Maybe the answer is to change the equation completely.

And the thing that really got us to think differently was kind of this counter thought, was if AI is really around the corner, if everyone's gonna use AI, if AI is gonna explode, then my sister's gonna join a video call with a real time AI image that's going to be a blemish remover. She's going to turn on the real time language translation so you can hear her in Spanish, even though she doesn't speak Spanish, right? So there are all these valid uses where if it's really going to explode, we need to assume all of this is going to happen. So what does it even mean to ask, is this a deepfake when it's valid use?

And so we took that as basically a realization that the world is kind of asking the wrong question.

The right question isn't, is this a deepfake? The right question is, who authorized this? Whose device is this coming from?

And can I answer that deterministically? And so that's an example of, I think, if you go back to first principles and you think about why is the problem hard?

And you realize that maybe I have to completely change the equation of how I'm responding to this problem.

So when we talk about building, let's say, these secure systems, like you said, going beyond just the GenAI, even if you're gonna be using, say, earlier terms that are popular in terms of big data or AI, ML and all that, there's probably a lot of noise around there, like you said, whether it is deep fakes or something else.

And these need to operate pretty much in real time, right, Whenever you need to do that.

So is that going to increase the cost of computing overall?

Is it going to increase the cost?

I I that's a good question. I don't know. Let's think about that real quick. If we look at the operating model of the big companies that run the frontier models, right, like OpenAI and Anthropic, we know that they still don't even make money at two hundred dollars a month for a pro subscription. Right?

So clearly the expense is immense. Right? The the amount of energy that you spend to answer a question of where should I go to dinner tonight on on OpenAI is is shockingly high. But number one, the research is also starting to show us that we don't necessarily need these massive models to get intelligent answers.

Like we could actually use these techniques of distillation. Use a big model to generate sequences that you can then use to train smaller parameter model. Smaller models basically are more efficient. Where is the floor in that work?

We don't know yet, but like it's clearly progressing.

There's also clearly advances around computing in terms of not just building processors that are wider and can kind of execute more instructions in parallel, clearly speed this thing up, but analog computing. So like, again, first principles, if we think about what is a large model at its core, what are the operations that are actually happening? There are multiplies, there are summations, and then there are comparisons against zero.

And I'm arguing most of the time. Clearly, the activation function can be a little bit different than other models, but generally, you multiply a matrix by some inputs, you then consolidate the answers for all of those rows, right? You sum them, and then you run those answers through an activation function. And generally you're asking, is it above zero, is it not above zero, and you filter them. So if we're doing that over and over and over again, and if we think about what does the circuit look like that does that, it's really inefficient. It's a ton of gates to do that basic computation.

Analog computing is really, really effective at doing the exact same operations that I just described in a much faster and much more energy efficient way. And so, again, like, I think we're still at the beginning, but there's a ton of companies.

Mythic is the one I read about the most recently, although I'm sure there are others.

And they're basically building analog computers or analog processors to essentially both accelerate and make energy efficient, these kind of primitives of model computation or at least neural net computation. So I think I think there's a lot of avenues in engineering and science that are going to drive the cost down. But right now, it it clearly is more expensive than than anything we've seen.

Yeah. Yeah. No. My question is more focused on building a security layer, the cost of building layer.

Particularly if we need to take action in real time with so many, the noise to be filtered out and also making sure that you are protected.

I think that's actually gonna drive more deterministic thinking. So if you look a lot of security controls today, they're probabilistic. Like, hey, if this violates these heuristics, generate an alarm and have someone respond.

And then there's some other things that are, maybe they're a little bit better than that, but generally there are these probabilistic techniques. They have some sort of noise threshold above that threshold, they generate an alarm and a human is gonna respond.

And again, there's a host of companies that will offer you AI enabled incident response, so you can kind of respond at the speed of the adversary. And I think there's a place for that.

Personally, I'm more interested in that as a fundamentals question. When we think about that, do we really wanna, if the adversary is setting the pace of the attack, they're now controlling our response if we do the same thing that they're doing, if we kind of jump to these AI response, incident responders. And it kind of makes me wonder, that is the right solution, but at least for a minute, take a step back and ask, well, how do we change the game? Is there something that we could do that's different?

Could we change the equation where it almost doesn't matter the amount of computation they have and things that they could kind of throw at us? Is there a more deterministic solution? And I think we've kind of found something like that in trusted computing and some of kind of the identity based defense that we talk about here at Beyond Identity. So for instance, phishing.

Phishing works really, really well, and it scales at the ability of a threat actor building carefully crafted messages and sending them out.

With AI, the scale is completely gonna change.

Yeah.

Right? So if we look at the statistics today, before AI even showed up, the average company fails a phishing the average company with training fails or at least clicks through on a phish four percent of the time.

It turns out the average company without training does it twenty percent of the time. Let's do some basic mathematics. How many unique phishes have to happen with that failure rate before a company absolutely is gonna have to deal with a security incident? It's anywhere from four for an untrained company to, like, twelve for a trained company. Now let's introduce so so number one, that basically means you're always dealing with security incidents. Right? Now let's introduce AI that's gonna make those perfectly crafted, right, where you can't even tell the difference.

And so our argument is if you wanna play that game, you're letting the adversary decide the pacing and the space where you're going to interact with them, and we think that's a losing battle. What we argue is if you go back to fundamentals, you can actually do things like using some of the trusted computing concepts that already exist in your environment to say, hey. Click on the bad links. How do I ensure nothing bad actually happens?

And, you know, we can get into the details of how how all that works, but for now, I'll just say there's a way for you to change your authentication where even if your your people always click on the bad link, the session still can't be hijacked.

What about the more defensive things like honeypots? Is that a space where you could be a little more proactive?

Possibly. Right?

So so a honeypot honeypot's really good at kind of collecting collecting new samples. You then put a reverse engineer on that sample to figure out like how does it work. If your reverse engineer is good enough, can do something called sinkholing, right? Like if you can figure out the C2 mechanism of the sample, then generally you can kinda get some of those samples to kinda connect back into your sinkhole and then you can kinda get telemetry. Maybe AI can kinda speed that up.

I'm sure there's some avenues for that to actually work in that industry. I do kind of question though, EDRs are really, really good at detecting malware today, and so the adversaries have really shifted a lot of their tactics to be more based on living off the land. And so a lot of their c two now is is almost based on existing existing things that almost have to occur in an enterprise for an enterprise to work. So while you could certainly apply AI to speeding up kind of that that that that process of from honeypot to reverse engineer to sinkhole the c two, The broad scale effectiveness, I don't know how much that would be because of the shift in adversarial tactics.

Some of the things that I think are really interesting right now so Russia was running this campaign focused on diplomatic cores. I think Microsoft's calling it secret blizzard.

And so their persistence mechanism is kind of ingenious. They basically drop a certificate under their control onto the infected machine's root store, and they control ISPs both in Russia, Belarus, and kind of Russian influenced territories. So they interject TLS connections, and because they have the root store, they can sign for the name and they can kinda go about their business. I think they have a variation where they do it with a browser plugin as well.

So that's not something that you're going to sinkhole, right? But it's persistent and it's an interesting C2 channel.

So where I think is a good example of maybe thinking about how do I change the game and how do I look for more deterministic controls?

The interesting question there is, wait a minute, why is device posture not part of authentication?

When you get on an airplane, you have to prove that you're the right person and you have no guns, knives, explosives.

But in security and IT, for some reason, the security questions and the IT questions usually come together like a C level officer of the company.

When a computer is executing some sort of transaction, if part of that transaction wasn't just prove that you have the right key, but also prove give me a list of what's in your Root Trust store right now. Right? That I actually understand the level of compromise that's even possible. Give me a listing of the browser plug ins that are actually installed in your browser.

So, like, I I think there are these adjacent but different questions that we could ask that give us more deterministic controls to actually handle both adversarial threat, its evolution, but also in the face of AI. I think I think AI is our biggest challenge as a security person or as a defender. And I think the easy mistake and the easy button and the easy mistake that we can all make is kind of reach for AI to go combat AI, right? Like sending robots to fight robots, it feels reactionary.

And maybe I'm wrong, maybe this is the right approach, but maybe this is a moment where we actually have to rethink our security controls and and try and seek out more deterministic versus probabilistic controls.

So still staying on the topic of AI Know, with this whole, let's say, fancy of wide coding, the software development is moving away from probably a centralized control, more enterprise class Yeah. Development teams to something that someone probably creates and then just deploys on the the corporate network.

Yeah.

How do you think that is going to play out or what can be done to have some guardrails for those?

I think so I so number one, I'm a big fan of exploration and experimentation and and doing that sort of thing. I do it myself. I've got a small project right now where I'm really invested and interested in certain types of low level attacks on embedded systems, and I'm using an AI assistant to actually just help me go faster. But I'm not asking it to build an entire system. It's kinda like I'm treating it like a junior engineer and I'm a senior engineer and I'm specifying interfaces and types and asking it to kinda fill in the differences.

This is how I feel confident and comfortable, and I understand what I'm getting, and I have some way of checking it. I know there's other ways of interacting with it where you just kind of ask it for, hey, build me a utility that does this.

That makes me a little bit more nervous, and part of it has to do with just my personality. Part of it has to do with where I work in the stack. If you don't actually understand what's happening, you can't really vouch for the safety of what's happening.

Maybe you work in an area where security doesn't matter, and that's fine.

For example, if I'm just trying to learn quickly and build little experiments, it's totally fine and it's totally acceptable. But if you're gonna deploy something into production, how do you actually know, how do you prove to yourself that you have the absence of all these classes of vulnerabilities? I don't think you can actually let yourself not think about these things, and I don't think you can just say, I'm gonna look that up later.

I still think experience matters, and that's how you will actually be a better Vibe coder by actually treating the AI assistant as this very directed tool that's really helping you stitch together these gadgets that you've come up with in your mind.

Clearly, it's also an opportunity for more, again, these more deterministic controls. Like I think trusted computing is gonna have a lot to offer the world with the more AI generated code that we actually put out there.

I also think it's gonna open the door for a little bit more responsibility on the programmer.

I may not be able to analyze all of your code, but I'm gonna require you to now sign your code cryptographically so that when I'm building and I have traceability exactly back to you, and you'll bear some sort of responsibility for essentially what that guarantees.

And we certainly see that in defense and government applications already.

Yeah, so that was one thing. Recently, I was talking to one team working in the aerospace area where their challenge was once they put something out there, and then you load a lot of power into that in terms of being able to remotely monitor, control, take decisions, maybe issue alerts, and so on and so forth. So their challenge was how do we kind of protect those kinds of systems since you mentioned embedded systems.

I was wondering how one would do that where it's impossible to go physically and do something to the device. And it's also there is a lag even if it's a few seconds. Even after you detect what you need to do, you know, you're there is a lag. Right?

Yeah. Yeah.

So what does one do in those kinds of systems?

There's a couple of things. The area that I think is most interesting for that kind of work, it's called trusted computing, and it's a formal research area in academia, but it's also made its way into aerospace. It's made its way into defense. And and there's a there's a there's, like, a couple different angles of trusted computing, but at a high level, it's like, how do I know, both at compile time or at runtime, that the thing that I think is true is actually true? So I'll give you an example. There's a little coprocessor called a TPM, trusted protection module.

And a TPM is used by Microsoft in in the secure boot process

So that Microsoft can actually know that it's only going to decrypt its disks if from the original ROM loader to the boot loader to the kernel to the kernel loaded drivers that they all have been unmodified since Microsoft produced them. And so the way the TPM does this is it has these little registers. And these registers, you can't actually read the registers and you can't write to the registers. This processor can use it. And so when you create a key, you can staple that key to the register values.

And so only if those registers have those values will that key works. So Microsoft creates their encryption and decryption keys, and there's this other operation called extend.

And so as the system is booting, both from like, all the way back to the boot ROM, to the UEFI boot process, to the boot loader, to the to the kernel itself, it's calling extend over the image of what it's about to map into memory. So you get this kind of linear stable sequence.

And so if I were to change any of that and then ask this coprocessor for a decryption, because the register values are not correct, the key wouldn't work.

So that's like a small example of hardware based trusted computing that software can leverage to understand that nothing has been modified in its ancestor line and establish some level of trustworthiness. So there are things like that that operate at the scale and speed of a processor that actually give you fairly high controls. Like the assumption there is that the foundry that printed the chip has not been compromised, which maybe it has been, but that's a high bar.

I wanna do a little segue. We've been talking more about technology and you are very comfortable with this, and then you talk with definitely a lot of experience and authority. This is more about beyond identity.

Okay. Starting with what was the the genesis of Beyond Identity, and then how did you jump into this?

Yeah. The genesis of Beyond Identity, it's pretty interesting.

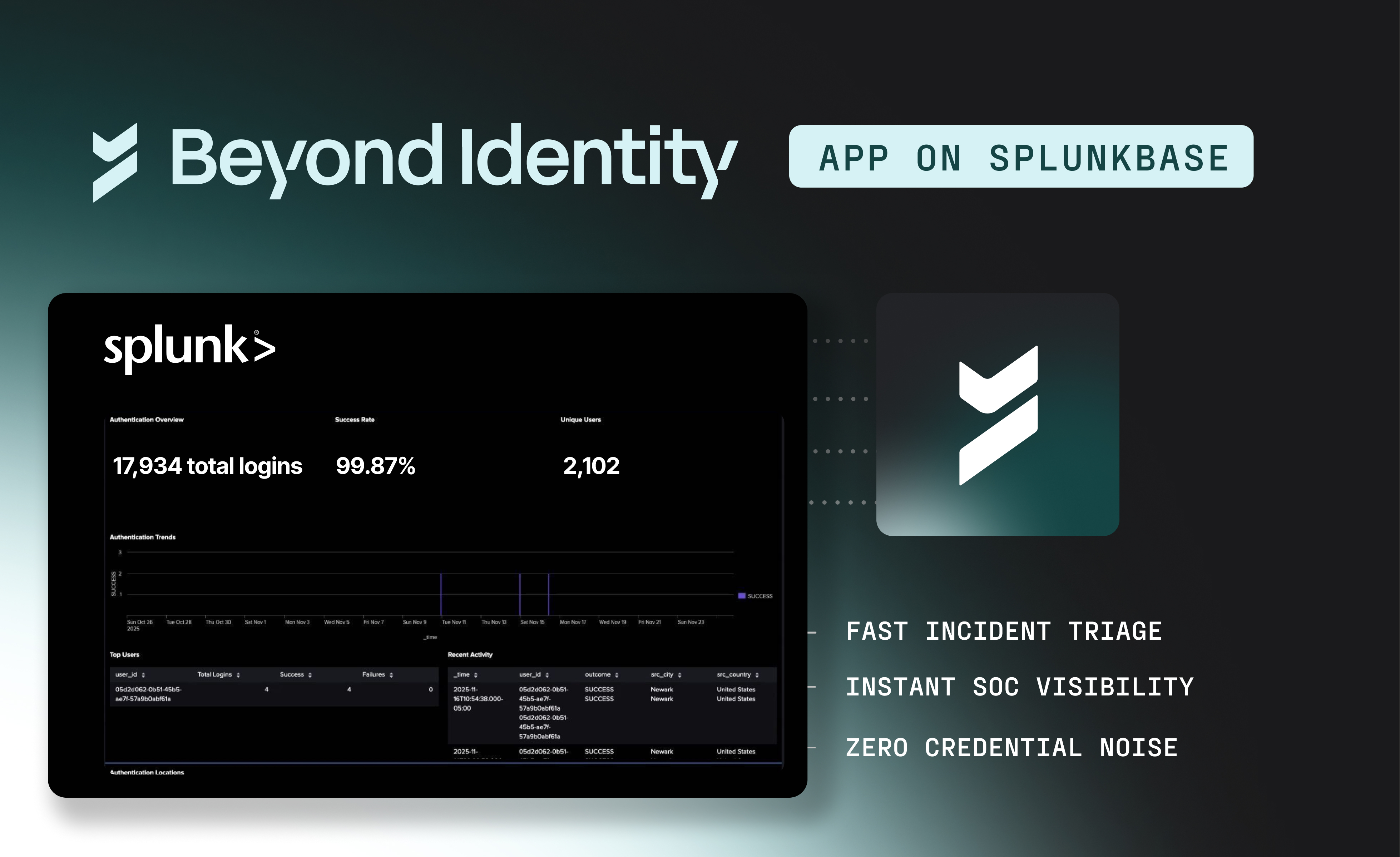

So I I used to work at a company called Security Scorecard. I was their CTO. I ran the research and development department. And you can think of Security Scorecard as like a cybersecurity intelligence company. Right? Like, we were basically building the equivalent of a credit score for a company, but over their cyber.

And so a lot of the work that I did was around how do we look at all of the cyber signals that a company gives off that an attacker can see? And turn that into a score that's predictive of whether a bad thing will happen to them.

And through that, we had a partnership with a bunch of cyber insurance vendors. And so we could actually see what companies were basically getting payout or were basically making claims.

And so we did some studies around like of everything we see, what's most predictive of a future breach.

And the strongest signals were around password management, multifactor, and local endpoint patching. So like, is the computer that's gaining the access, is it compromised or is it safe?

Or could it easily be compromised? And so I left ScoreCard in twenty nineteen and I wanted to work on that problem. I thought that was an interesting insight.

And if that's the number one cause, maybe there was something I could do there.

But I was focused more on the analytics side of things.

I got an email at that time for someone who worked for, or they claimed they worked for Jim Clark.

This was the Jim Clark who created Netscape. This is the Jim Clark who created Silicon Graphics. This is like the Jim Clark who's like one of the gods of Silicon Valley, right? Like we've all read about it.

And so of course, my response is this must be spam and I deleted it.

And I got another email two days later and it was basically a repetition of the same thing. It was, hey, I'm such and such. I work with Jim. Jim's wanting to do this thing again. And so I'm thinking like, all right, I'll respond.

And so I responded to the guy, and I'll bore you the details, but sure enough, it was the real Jim. Jim lived in New York.

Jim was older, but it turns out Jim's still an engineer at heart and he can't stop tinkering. Him and a couple other people had been building this authenticator for their homes and their boats. And they had figured out how to do away with the password because they thought the password was kind of the stupid concept. It was easy to steal.

It was hard to use. People always forget it. What's with these stupid password policies of like twelve unique digits and I have to change? Like Jim's like a UX guy and in his mind, he's like, this is just asinine.

It's stupid. Can't we do better?

And he wanted to turn this into a full time startup And he wanted someone with a security background to kind of help them get it started.

And I looked at what they were doing and I said, Hey, that's interesting.

But the thing that clicked in my brain was the number one cause of incidents for a company is really this identity system, how this initial authentication is handled. And so he's figured out a way to remove the password. So if there's no password, if there's nothing to steal, the credential theft goes away.

But the other thing they were doing, and they were doing it for convenience, but they were running it on the device you were working from. So you didn't have to go get a second device, right? So it's a better user experience.

But when I saw that, what I got excited by is like, well, wait a minute. It's a strong credential. It can't be stolen. I'm on the device I'm working from. So I could actually detect man in the middle.

And I could incorporate a small sensor, kind of like an EDR sensor into the authenticator itself, and we could answer that safety question, like who are you, on what device are you, and is your device safe enough for what you're asking for? And so I got excited and that's how we got started back in twenty nineteen.

So what's the beyond part and the beyond identity?

We wanna take you beyond the mistakes that they created when they started Netscape in nineteen ninety four.

So, know, Jim and there's a couple other personalities involved that come back to those days. Yeah, Taher Algumol.

He actually created SSL or was part of the team that helped create SSL at Netscape.

And they thought they came up with a fairly elegant solution for proving server origin and server names, but they kind of punted on the client side.

And they felt like they stuck the world with passwords, which was kind of a blemish.

So, yeah, Beyond Identity was about kind of fixing that blemish from the late 90s or the early 90s and letting people kind of go beyond the old way and get rid of these security problems. Like how do we actually be both effortless and secure, whether it's consumer applications buying things, right?

Or just getting to work remotely.

Yeah, and I was curious whether it was inspired by the infinity and beyond from the Toy Story.

I don't know if Buzz Lightyear was kicking around in anyone's brains when that came out, but was one night we were sitting around at Jim's house and it was a handful of us.

Jim was his usual gracious self sharing these wines that I would probably never even see, let alone taste. And throughout the night we converged on Beyond Identity as the name.

Was really about kind of going beyond these traditional methods and kind of the legacy history.

Nice. So a related question is also, how has this transition from being a CTO to a CEO been for you?

Yeah.

That's interesting. There's so many answers to that question. It's been fun, is probably the first thing. It's been wild.

You really are in charge of everything, and you basically have to do whatever's not getting done.

I've never had any problem with working whatever job needed to work to get the team over the finish line. So it wasn't necessarily hard in that regard.

But every now and then I do You leave something behind, right?

You can be technical still, but you're not gonna work a technical project, right? That's not your job. Your job is to move the company forward. So there was an adjustment in kind of figuring out what the job is.

The other thing that was probably very clear is, know, TJ ran the company for the first four years. TJ had a very specific kind of strategy and way about himself. TJ is also another kind of Valley legend, and I'm not. And so the things that TJ is comfortable doing and kind of planning, it's just, you know, we're just different people.

So I had to kind of it took me a while to realize and develop kind of what's my strategy and how do I kind of execute and then get the team to kind of execute my strategy. And that was probably my first real learning in the job, and that was pretty interesting.

And the second is I thought I traveled a lot before.

I've put my old travel record to shame. You're on the road a lot. You're always meeting with customers, prospects, partners, investors, future investors.

It can be grueling, but there's ways of actually making it fun as well.

The people that care the most about protecting their organizations typically have the most to lose, which means they're very interesting organizations, they're very interesting people.

And as we kind of started the podcast, I'm a pretty curious person. I really like knowing why.

And so a lot of this customer interaction is actually kind of filled that where I lost my curiosity access running R and D. I have to have someone run R and D now. I've been able to kind of replace it with kind of understanding customer problems, customer setups, what are the macroeconomic and geopolitical pressures that are causing them to either be in the situation or want to get to a certain situation. So that's been a lot of fun, but it's a very, very different job.

Yeah. Okay. I have one kind of curiosity question based on that before we get to that.

What career tips would you have for, say, two segments of people?

One are those who are considering a career in cybersecurity, whether they are just now entering IT or doing this. And the second category, particularly among the listeners of this podcast, are people who are mid career, who either want to do something different or they feel that they've reached the end of what they can do in their current roles.

If they were to consider security as an area, what would be your So we'll start with early career.

So the most important thing, I think, for an early career person is experimentation.

By definition, you don't have experience, right? So you're not qualified to work on anything that requires experience.

And it can feel really disheartening because no one's gonna hire you even in a junior role because you don't have the experience to get certain start up.

So the way you break that logjam is you just start, right?

We live in a time in world history where you don't have to spend a ton of money to gain experience. You don't have to spend a ton of money to build a workshop to then learn how to use a welder.

You probably already have a computer. You can spin up a virtual machine, you can run a Docker, you can experiment on your own.

Experimentation, running projects, building projects, fueling that activity, it's the easiest way of gaining some experience, and it's still not work experience, but that'll pull you into things. That'll show you what you're naturally interested in.

And if you it right, it'll pull you into whatever your next job is. That I feel pretty strongly. The other thing I would say is sometimes young folks can be a little cavalier around kind of principled knowledge. And I saw this when I was actually teaching at a university.

There's a couple classes in university around operating systems, compilers, and microarchitecture.

And you don't necessarily have to go to university to learn these skills, but you do have to learn these skills to be a really effective engineer in the cybersecurity world.

This isn't something that you can oftentimes, I'll hear engineers say, I'll look that up when I need to. This is not something you can just look up because understanding how a computer works, by really understanding how an instructions stream executes through a processor, by really understanding the difference between static linkage, dynamic linkage, lazy loading, address space randomization.

By really understanding how that stuff works, you get yourself into a pattern. You build these mental pathways. Sometimes we think of it as muscle memory, and that's what primes you to kind of understand the more meaty topics. If you always treat those subjects as something that you're going go look up when it's important, you're never really going to understand those more meaty subjects.

You're never really going to be authoritative on those subjects. So you do need the principles. You don't have to go to university to get the principles, but you do have to get those principles. For mid career, I still think it comes back to curiosity and exploration.

I would hire like, I hire people every day that just have an internal drive and curiosity, even if they don't necessarily have the experience of someone that has lower curiosity and low drive.

Because it's that drive that's really gonna pay things off. So even for mid career, like what are you interested in? What are your projects? How do you experiment? How are you teaching yourself in that area?

It's easy to help someone who's already helping themselves. And that kind of internal drive, I think is what pays off the most for that person, for that future employee and for the company.

So it's a little bit different. Like a mid career person's gonna have fundamentals, they're gonna have the principles, but life takes hold. Sometimes it's hard to have time to tinker. And the thing where you've got to spend the time, you've got to make the time, right? And, you know, I can't help myself. I still do it myself, right? Like, got a little FPGA board on a dev board that I'm playing around with a little radar project.

It has nothing to do with work, it's, know, number one, it feeds the brain, but number two, it actually does start to lead into work. Right? Like, we have these identity projects now that that impinge upon drones and understanding a little bit about the drone operating environment, radars, effective radars that work against drones, what the processing pipeline looks like, how would you help someone understand if software on the drone has been compromised or not compromised. It gives you more authority, it gives you more context, it makes you better.

But you make it sound easy, but how do you find time to keep up to date with so many things happening?

I mean, short answer is you don't.

There's no way I'm up to date on everything.

It's just impossible.

Solve for the thing you can solve for. Everybody has some amount of time. Nobody has no time.

That's the excuse of I'm too busy walking to work to buy a car.

I guess you're always going to walk to work. You can always make some amount of time and feed something that's interesting. If you're not interested in it, you'll do it for a couple days and then you'll go back to walking to work.

There's so much exciting things happening in the world today.

Won't believe for a minute that one or two of those many, many things is not innately interesting to you. So let's pick on AI. Everybody's interested in AI right now. Here's a fun project that you'll probably never actually use in your work, but I'd be willing to bet money it will help you get that job mid career.

How does a large language model actually work?

And don't give me words.

Give me code, and don't go use PyTorch.

Give me code that actually goes back to the multiplies, the ads, and the comparisons.

And you're like, well, wait, that's a lot. Like, don't people go get PhDs in it? All right, let's back off. Let's back off.

Let's just do one neuron. Let's just do one neuron. Code it up. How does it actually work? All right, once you've got because anyone can do one neuron. And if you can do one neuron, you can probably do a three layer network.

And if you can do a three layer network, you can actually do signature or basic pattern recognition. So what is it? MNIST, M N I S T, has this character set that's already been uploaded. These people used to run competitions against it.

It's old stuff, right? You're not gonna break any records. You're not gonna cut it. You're not gonna carve any new ground, but you're gonna really teach yourself, how does this work?

How do I take this three layer network and really train it on these characters with these labels? And then can I get at least the same level of prediction as what's actually going in the historic sets? And yeah, you could vibe code it, and I guess that's better than nothing, but write it yourself.

You'll really start to understand things. You'll make mistakes. You'll make mistakes in understanding the backpropagation. You'll make mistakes in understanding pick something you're interested in, try and do it, realize that it's a professional thing and you're not gonna be able to do the professional thing. So what's the essence of it? And the essence of all of the AI that everyone's kind of fueling over right now is this neuron linked together in a network, and there's multiple different ways of building the network, but how does it actually work?

How do you train and how do you predict?

Or how do you classify?

And I would argue, if you can just do that experiment, it shouldn't take you more than a month, even if you're only given it a few hours a week, and you'll learn so much more, you'll feel so much more comfortable talking about things, you'll feel so much comfortable reading about some of the more advanced techniques, and you'll surprise yourself. It won't take you that long before you could actually then start reading the papers on convolution nets and recurrent neural nets and long short term memory, and then all of a sudden, now you actually have the mental backing to understand transformers. What is a transformer, and how does it really work? You can do this.

If you've gotten into IT, you can do this.

That's really a very nice way of structuring the whole curriculum that, you know, I can do that.

Shifting slightly a little bit, as the CEO of a company that is providing security for a lot of clients

Do you have sleepless nights?

Yeah.

So just to put things in context, I wanna say I was still having dreams in my thirties about showing up for a college exam, not having studied the material. So part of it, I think, is personality and wiring, But absolutely, right? What we do, we do identity. So identity is like the critical infrastructure of any business.

Number one, if identity is down, your workers can't work, right? If identity is down, your customers can't buy stuff. They can't do whatever they use on your product, right? So identity is critical in that it has to always be up.

Identity is also critical because if the bad guy gets into your identity system, they can access anything.

So, yeah, we have to treat it as critical infrastructure.

And this plays into a lot of what we think about and how we hire people. Who we hire, the backgrounds we look for, it plays into how we engineer, like how we go about the engineering process. So there's parts of our products that we consider, we call it, so we're all a bunch of X router and firewall people, so we have what we call the fast path, the data plane, the slow path, the control plane, and then the super slow path, the management plane.

So there's different engineering rules when we're working at the different areas, right? And there's different levels of isolation, but the short of it is, for that fast path, we can never fail, so we have to be meticulous and we have to be slow.

And for that slow path, failure is not catastrophic, so we can afford to take more experiments. So we think about this and we do it in a different way.

From a security perspective, know that we get all of the threat actors, right? We get the bored teenagers, we get the hacktivists, we get the organized crime, and we get the nation states. So we try and recruit from organizations that are very comfortable and competent defending against all of those.

Yeah, also try and Some of the concepts I was talking about earlier around like, how do you change the game? How do you not play the game the adversary wants you to play?

Trusted computing is really important to us. We try and spend a lot of time around how do we know what is true and what's our level of proof?

And how does that play into almost everything we do?

But even with all of that, right? Things can still go wrong. There's always the stuff that you haven't thought about. It's a never ending process, but it's fun.

It's mentally stimulating.

Your work matters. You protect people's livelihoods. We're not making trinkets that people waste money on. We're literally protecting their ability to feed their family.

So it's meaningful work, it's fun work, we hire the best people we can find, we give it everything we can give.

But yeah, it absolutely still breeds anxiety, right? Because even the best engineers are always self critical and everyone is always wondering about what about that next thing and what about that next thing? So there's always the next thing.

You just have to learn how to live with it.

So I guess we have time for just one more curiosity question.

Do you have any personal practices to stay grounded? And if you don't mind sharing.

Yeah.

Personal so I would say practices and people.

The longer I go in isolation, the worse the stress and all the anxiety gets. So trying to just make sure that I'm not away for too long, coming home, spending time with the wife.

I don't know if you can see them, but I've got two very lazy sleepy dogs in my office with me. There's something about dogs are fun, right?

I wish I was the person my dog thinks I am.

So there's something just very grounding about being responsible for an animal that looks at you in that way and you truly take care of in all facets.

Having a partner who actually can kind of remind you to slow down and take a moment, you can't do everything.

I've got a really good team at work that does a similar function.

Exercise is probably the If there was a secret weapon, it's exercise.

Even if it's a stationary bike, getting on a stationary bike twice a week does so much for regularized sleeping and stress management.

Across a lot of time zones a lot, and so I've started using a little bit of help to make sure I sleep. I forget what are the pills called?

We've known about it for a long time, but it's like a sleep regulator pill. I'm forgetting the name of it now.

Something that controls daylight response or something like that.

Exactly. So I used to be like macho and stuff for Melanonin. Yeah.

They're melatonin pills.

Melanonin. Yeah.

But I used to be macho and I would just try and like struggle through it. And then finally I just whenever I cross a lot of time zones, I try and hit the gym, I have a good meal, and then I take a melatonin and dark shade the room and just try and get good sleep.

It's hard, but yeah.

Friends, friends, friends, family, routine, sleep, exercise.

So on that note, thanks a lot, Jasson, for sharing very candidly both on the technical side and also your own journey of CTO to CEO and everything that comes with it.

We thank Siddharth for the music and Anita for promoting the software people stories. If you like this episode Please subscribe on your favorite podcast client. And spread the word in your network.

If you'd like to share your story Contact us at pm-powerconsulting.com.

TL;DR

Full Transcript

Welcome to the software people stories. I'm Shiv.

I'm Chitra. And I'm Gayathi.

We bring you interesting untold stories of people Associated with the creation Or consumption of software based solutions. You'll hear stories of what worked And sometimes what didn't.

You will also hear very personal experiences.

And insights that would trigger your thoughts And inspire you to do even greater things.

Before we jump into today's conversation, a special thanks to PM Power Consulting for encouraging and supporting this show right from the start.

With over a thousand person years of collective experience, PM Power has helped many organizations, teams, and individuals chart out their own journeys of excellence and make progress. Thank you, PM Power.

Now let's move to today's episode.

In this episode of the Software People stories, Jasson Casey, CEO and cofounder of Beyond Identity, shares his career journey and insights into the world of cybersecurity.

Jasson discusses his early fascination with understanding how things work, leading him into programming and eventually into cybersecurity.

He explains his work at Beyond Identity, emphasizing the importance of removing passwords to reduce security risk and incorporating strong device security.

Jasson highlights the significance of fundamental principles in cybersecurity and the evolving challenges with AI, including supply chain attacks and data poisoning.

He also covers the importance of trusted computing and deterministic controls for ensuring security.

Jasson provides career advice for early and mid career professionals in cybersecurity, stressing experimentation, curiosity, and continuous self education.

And finally, he shares his personal practices for managing stress and staying grounded, including exercise, sleep regulation, and maintaining strong personal relationships. Listen on. Hi, Jasson. Welcome to the Software People stories.

Hi. Thanks for having me.

Yeah. We usually start with a self introduction on how you got into IT or more specifically cybersecurity, what has been your career trajectory and so on. And then we will take it from there.

Okay.

So, yeah, I'm I'm Jasson Casey. I'm the CEO and cofounder of a company called Beyond Identity. How did I get into to IT?

I mean, I've always been fascinated with how do things work, and I remember as a kid just taking apart, like, little toys to try and figure out how they worked. And at some point, I realized that I could actually make a toy behave slightly differently, and you could make your own toys. So I got into programming fairly early on kind of just by playing.

First job where I actually got to write software was to help pay for college.

I knew a certain amount of math, knew how to write and see. I got an internship with a scientific computing company where the researchers didn't wanna bore themselves with like the basic geometries.

And so because they were really advanced mathematicians, and so they're like, hey, you go do this, and I thought it was the coolest thing ever. And how did I get into cybersecurity?

Ever since I started working kinda truly full time, I've always been working on infrastructure. So when I say infrastructure, think like routers and switches that operate at the carrier, not like in your closet at home, but like big things. And security is a function of those. And so I've always kind of been enamored with it started with basic filtering, and then it got into these active controls.

Like, how do you handle denial of service? How do you handle distributed denial of service? And eventually, got into analytics. Like, how do you tell if someone is running a covert c two channel?

And then, you know, from there it it got into, well, why are they doing this? What's going on that's causing them to do this? What exactly is going on between Russia and Europe that might make them wanna do this thing to this particular company? And I would say the theme in all of it is curiosity, but but it's all been very related, at least from my perspective.

Wonderful. Maybe I'll start with trying to understand when you said that you loved the way you could make toys do things.

Yeah.

Is that somewhat of a hacker mindset or a builder? Yes.

So so the old school definition of hacker is is just almost more about just being curious and and trying to figure out, like, how does something actually work? And can you make it do something it wasn't necessarily intended to do? Right. Almost for the joy of it, right?

So I guess in that mindset, sure. I mean, I've never really thought of myself as a hacker, but like, I'm definitely curious. I definitely want to understand how things work. I know this annoys my colleagues to this day.

But yeah, it was fun. It was like, and I know a lot of people are like this, right? Like when you finally figure it out, you get the almost like a shot of serotonin in your brain, right? Like you get that excitement, like I figured it out, I made it do that thing.

And yeah, like, my my parents didn't care for it because they didn't understand why I was destroying these things they bought. But but for me, it was fun.

Yeah.

Because one thing that I'm always intrigued about when talking to anybody working in the cybersecurity area are, of course, couple of things. One is also about the physical security. But beyond that, how do you stay one step ahead of the people who try to break things?

Well, there's always someone who you're not one step ahead of, otherwise there wouldn't be an industry.

But I think this answer really is similar to how do you stay one step ahead of your competition? How do you stay one step ahead of almost anyone?

And I think it comes back to fundamentals and kind of persistence in practice.

It's the early lessons that I remember having all the way back in like the late nineties about how does a computer actually work?

What is it How does a program actually execute? How does it go from being a file to being this running process that's doing things? And there really are fundamentals in how that happens. There really are fundamentals in how an operating system works. There really are fundamentals in how a compiler takes this thing that we trivially understand into this thing that what a machine understands.

And to this day, I swear it's still those fundamentals that I use and that I would argue the more advanced folks use when they're thinking about offense and defense.

It really is a game of fundamentals. A computer only works in a certain way, an operating system only behaves in a very specific way, or it has these regular constructs, it doesn't matter if it's Mac or Linux or Windows or variations of those. Like processes run, processes are loaded, processes can create other processes. They have to map into memory, they have privilege levels, there's ways of interacting.

Shared libraries have to be readable and executable for certain things to happen.

And by the fact that they're readable and executable, you can actually inspect them without privilege.

It's a very fundamental thing, but it's kind of mind blowing in terms of wondering, wait a minute, is the system library that I'm relying on, like libc, has it been hooked by adversary malware or maybe by an EDR?

I guess just it's an interesting question, and the answer is it still comes back to fundamentals.

Yeah. Like you said, I think where I find it really interesting when I said is that it's no longer monolithic systems that we build.

And at either build time or run time, there's a whole supply chain.

Services that you consume, services that you feed, etcetera.

And it's not just the typical, you know, SQL injection type of thing that used to be there. You have supply chain attacks. And now with more of the AI enabled things, they're talking about poisoning the data or trying to trick these systems into behaving or exhibiting behaviors that they're not supposed to do. Right? Which means that it is no longer completely predictive system.

Oh, for sure.

Right? So how does that play out?

So, again, I still think it comes back to fundamentals. Let's take AI for instance. What actually is AI under the hood? AI, at least the way most people use it, is next token prediction. It's really, really good next token prediction. And maybe there's emergent behavior in this next token prediction around being able to understand things like semantics as opposed to just syntax. But it still is next token prediction, which means it's probabilistic.

And it still operates on a baseline computer, which is actually doing deterministic steps. And so there's a lot of ways to actually analyze these things. There's a lot of ways to work with these things that still kind of brings you back to fundamental concepts.

One of the areas that we actually work on at Beyond around AI is we're really interested in this question of how do you know what's real? And because we think that's probably the question that society is going be most focused on over the next, at least the foreseeable future. We have deepfakes of video. We have deepfakes of audio.

We have engines that basically make you sound you can sound like anyone in email and text. And so we've thought pretty deeply about this. And there's this probabilistic engine that can be really good at mimicry. And there's a whole host of companies that build detectors, right?

Like that you can use their software to figure out, wait a minute, is this image maybe a fake image? But we look at that and kind of going back to fundamentals, our approach is, well, wait a minute, how does an adversarial network work? How would I actually train a generator? And the answer is, well, I'd use their detector to train my next generator.

So that kind of feels like an arms race. And then we started thinking, it's like, wait a minute. Maybe a maybe the answer to solving, this problem isn't to come at a probabilistic tool with another probabilistic tool. Maybe the answer is to change the equation completely.

And the thing that really got us to think differently was kind of this counter thought, was if AI is really around the corner, if everyone's gonna use AI, if AI is gonna explode, then my sister's gonna join a video call with a real time AI image that's going to be a blemish remover. She's going to turn on the real time language translation so you can hear her in Spanish, even though she doesn't speak Spanish, right? So there are all these valid uses where if it's really going to explode, we need to assume all of this is going to happen. So what does it even mean to ask, is this a deepfake when it's valid use?

And so we took that as basically a realization that the world is kind of asking the wrong question.

The right question isn't, is this a deepfake? The right question is, who authorized this? Whose device is this coming from?

And can I answer that deterministically? And so that's an example of, I think, if you go back to first principles and you think about why is the problem hard?

And you realize that maybe I have to completely change the equation of how I'm responding to this problem.

So when we talk about building, let's say, these secure systems, like you said, going beyond just the GenAI, even if you're gonna be using, say, earlier terms that are popular in terms of big data or AI, ML and all that, there's probably a lot of noise around there, like you said, whether it is deep fakes or something else.

And these need to operate pretty much in real time, right, Whenever you need to do that.

So is that going to increase the cost of computing overall?

Is it going to increase the cost?

I I that's a good question. I don't know. Let's think about that real quick. If we look at the operating model of the big companies that run the frontier models, right, like OpenAI and Anthropic, we know that they still don't even make money at two hundred dollars a month for a pro subscription. Right?

So clearly the expense is immense. Right? The the amount of energy that you spend to answer a question of where should I go to dinner tonight on on OpenAI is is shockingly high. But number one, the research is also starting to show us that we don't necessarily need these massive models to get intelligent answers.

Like we could actually use these techniques of distillation. Use a big model to generate sequences that you can then use to train smaller parameter model. Smaller models basically are more efficient. Where is the floor in that work?

We don't know yet, but like it's clearly progressing.

There's also clearly advances around computing in terms of not just building processors that are wider and can kind of execute more instructions in parallel, clearly speed this thing up, but analog computing. So like, again, first principles, if we think about what is a large model at its core, what are the operations that are actually happening? There are multiplies, there are summations, and then there are comparisons against zero.

And I'm arguing most of the time. Clearly, the activation function can be a little bit different than other models, but generally, you multiply a matrix by some inputs, you then consolidate the answers for all of those rows, right? You sum them, and then you run those answers through an activation function. And generally you're asking, is it above zero, is it not above zero, and you filter them. So if we're doing that over and over and over again, and if we think about what does the circuit look like that does that, it's really inefficient. It's a ton of gates to do that basic computation.

Analog computing is really, really effective at doing the exact same operations that I just described in a much faster and much more energy efficient way. And so, again, like, I think we're still at the beginning, but there's a ton of companies.

Mythic is the one I read about the most recently, although I'm sure there are others.

And they're basically building analog computers or analog processors to essentially both accelerate and make energy efficient, these kind of primitives of model computation or at least neural net computation. So I think I think there's a lot of avenues in engineering and science that are going to drive the cost down. But right now, it it clearly is more expensive than than anything we've seen.

Yeah. Yeah. No. My question is more focused on building a security layer, the cost of building layer.

Particularly if we need to take action in real time with so many, the noise to be filtered out and also making sure that you are protected.

I think that's actually gonna drive more deterministic thinking. So if you look a lot of security controls today, they're probabilistic. Like, hey, if this violates these heuristics, generate an alarm and have someone respond.

And then there's some other things that are, maybe they're a little bit better than that, but generally there are these probabilistic techniques. They have some sort of noise threshold above that threshold, they generate an alarm and a human is gonna respond.

And again, there's a host of companies that will offer you AI enabled incident response, so you can kind of respond at the speed of the adversary. And I think there's a place for that.

Personally, I'm more interested in that as a fundamentals question. When we think about that, do we really wanna, if the adversary is setting the pace of the attack, they're now controlling our response if we do the same thing that they're doing, if we kind of jump to these AI response, incident responders. And it kind of makes me wonder, that is the right solution, but at least for a minute, take a step back and ask, well, how do we change the game? Is there something that we could do that's different?

Could we change the equation where it almost doesn't matter the amount of computation they have and things that they could kind of throw at us? Is there a more deterministic solution? And I think we've kind of found something like that in trusted computing and some of kind of the identity based defense that we talk about here at Beyond Identity. So for instance, phishing.

Phishing works really, really well, and it scales at the ability of a threat actor building carefully crafted messages and sending them out.

With AI, the scale is completely gonna change.

Yeah.

Right? So if we look at the statistics today, before AI even showed up, the average company fails a phishing the average company with training fails or at least clicks through on a phish four percent of the time.

It turns out the average company without training does it twenty percent of the time. Let's do some basic mathematics. How many unique phishes have to happen with that failure rate before a company absolutely is gonna have to deal with a security incident? It's anywhere from four for an untrained company to, like, twelve for a trained company. Now let's introduce so so number one, that basically means you're always dealing with security incidents. Right? Now let's introduce AI that's gonna make those perfectly crafted, right, where you can't even tell the difference.

And so our argument is if you wanna play that game, you're letting the adversary decide the pacing and the space where you're going to interact with them, and we think that's a losing battle. What we argue is if you go back to fundamentals, you can actually do things like using some of the trusted computing concepts that already exist in your environment to say, hey. Click on the bad links. How do I ensure nothing bad actually happens?

And, you know, we can get into the details of how how all that works, but for now, I'll just say there's a way for you to change your authentication where even if your your people always click on the bad link, the session still can't be hijacked.

What about the more defensive things like honeypots? Is that a space where you could be a little more proactive?

Possibly. Right?

So so a honeypot honeypot's really good at kind of collecting collecting new samples. You then put a reverse engineer on that sample to figure out like how does it work. If your reverse engineer is good enough, can do something called sinkholing, right? Like if you can figure out the C2 mechanism of the sample, then generally you can kinda get some of those samples to kinda connect back into your sinkhole and then you can kinda get telemetry. Maybe AI can kinda speed that up.

I'm sure there's some avenues for that to actually work in that industry. I do kind of question though, EDRs are really, really good at detecting malware today, and so the adversaries have really shifted a lot of their tactics to be more based on living off the land. And so a lot of their c two now is is almost based on existing existing things that almost have to occur in an enterprise for an enterprise to work. So while you could certainly apply AI to speeding up kind of that that that that process of from honeypot to reverse engineer to sinkhole the c two, The broad scale effectiveness, I don't know how much that would be because of the shift in adversarial tactics.

Some of the things that I think are really interesting right now so Russia was running this campaign focused on diplomatic cores. I think Microsoft's calling it secret blizzard.

And so their persistence mechanism is kind of ingenious. They basically drop a certificate under their control onto the infected machine's root store, and they control ISPs both in Russia, Belarus, and kind of Russian influenced territories. So they interject TLS connections, and because they have the root store, they can sign for the name and they can kinda go about their business. I think they have a variation where they do it with a browser plugin as well.

So that's not something that you're going to sinkhole, right? But it's persistent and it's an interesting C2 channel.

So where I think is a good example of maybe thinking about how do I change the game and how do I look for more deterministic controls?

The interesting question there is, wait a minute, why is device posture not part of authentication?

When you get on an airplane, you have to prove that you're the right person and you have no guns, knives, explosives.

But in security and IT, for some reason, the security questions and the IT questions usually come together like a C level officer of the company.

When a computer is executing some sort of transaction, if part of that transaction wasn't just prove that you have the right key, but also prove give me a list of what's in your Root Trust store right now. Right? That I actually understand the level of compromise that's even possible. Give me a listing of the browser plug ins that are actually installed in your browser.

So, like, I I think there are these adjacent but different questions that we could ask that give us more deterministic controls to actually handle both adversarial threat, its evolution, but also in the face of AI. I think I think AI is our biggest challenge as a security person or as a defender. And I think the easy mistake and the easy button and the easy mistake that we can all make is kind of reach for AI to go combat AI, right? Like sending robots to fight robots, it feels reactionary.

And maybe I'm wrong, maybe this is the right approach, but maybe this is a moment where we actually have to rethink our security controls and and try and seek out more deterministic versus probabilistic controls.

So still staying on the topic of AI Know, with this whole, let's say, fancy of wide coding, the software development is moving away from probably a centralized control, more enterprise class Yeah. Development teams to something that someone probably creates and then just deploys on the the corporate network.

Yeah.

How do you think that is going to play out or what can be done to have some guardrails for those?

I think so I so number one, I'm a big fan of exploration and experimentation and and doing that sort of thing. I do it myself. I've got a small project right now where I'm really invested and interested in certain types of low level attacks on embedded systems, and I'm using an AI assistant to actually just help me go faster. But I'm not asking it to build an entire system. It's kinda like I'm treating it like a junior engineer and I'm a senior engineer and I'm specifying interfaces and types and asking it to kinda fill in the differences.

This is how I feel confident and comfortable, and I understand what I'm getting, and I have some way of checking it. I know there's other ways of interacting with it where you just kind of ask it for, hey, build me a utility that does this.

That makes me a little bit more nervous, and part of it has to do with just my personality. Part of it has to do with where I work in the stack. If you don't actually understand what's happening, you can't really vouch for the safety of what's happening.

Maybe you work in an area where security doesn't matter, and that's fine.

For example, if I'm just trying to learn quickly and build little experiments, it's totally fine and it's totally acceptable. But if you're gonna deploy something into production, how do you actually know, how do you prove to yourself that you have the absence of all these classes of vulnerabilities? I don't think you can actually let yourself not think about these things, and I don't think you can just say, I'm gonna look that up later.

I still think experience matters, and that's how you will actually be a better Vibe coder by actually treating the AI assistant as this very directed tool that's really helping you stitch together these gadgets that you've come up with in your mind.

Clearly, it's also an opportunity for more, again, these more deterministic controls. Like I think trusted computing is gonna have a lot to offer the world with the more AI generated code that we actually put out there.

I also think it's gonna open the door for a little bit more responsibility on the programmer.

I may not be able to analyze all of your code, but I'm gonna require you to now sign your code cryptographically so that when I'm building and I have traceability exactly back to you, and you'll bear some sort of responsibility for essentially what that guarantees.

And we certainly see that in defense and government applications already.

Yeah, so that was one thing. Recently, I was talking to one team working in the aerospace area where their challenge was once they put something out there, and then you load a lot of power into that in terms of being able to remotely monitor, control, take decisions, maybe issue alerts, and so on and so forth. So their challenge was how do we kind of protect those kinds of systems since you mentioned embedded systems.

I was wondering how one would do that where it's impossible to go physically and do something to the device. And it's also there is a lag even if it's a few seconds. Even after you detect what you need to do, you know, you're there is a lag. Right?

Yeah. Yeah.

So what does one do in those kinds of systems?

There's a couple of things. The area that I think is most interesting for that kind of work, it's called trusted computing, and it's a formal research area in academia, but it's also made its way into aerospace. It's made its way into defense. And and there's a there's a there's, like, a couple different angles of trusted computing, but at a high level, it's like, how do I know, both at compile time or at runtime, that the thing that I think is true is actually true? So I'll give you an example. There's a little coprocessor called a TPM, trusted protection module.

And a TPM is used by Microsoft in in the secure boot process

So that Microsoft can actually know that it's only going to decrypt its disks if from the original ROM loader to the boot loader to the kernel to the kernel loaded drivers that they all have been unmodified since Microsoft produced them. And so the way the TPM does this is it has these little registers. And these registers, you can't actually read the registers and you can't write to the registers. This processor can use it. And so when you create a key, you can staple that key to the register values.

And so only if those registers have those values will that key works. So Microsoft creates their encryption and decryption keys, and there's this other operation called extend.

And so as the system is booting, both from like, all the way back to the boot ROM, to the UEFI boot process, to the boot loader, to the to the kernel itself, it's calling extend over the image of what it's about to map into memory. So you get this kind of linear stable sequence.

And so if I were to change any of that and then ask this coprocessor for a decryption, because the register values are not correct, the key wouldn't work.

So that's like a small example of hardware based trusted computing that software can leverage to understand that nothing has been modified in its ancestor line and establish some level of trustworthiness. So there are things like that that operate at the scale and speed of a processor that actually give you fairly high controls. Like the assumption there is that the foundry that printed the chip has not been compromised, which maybe it has been, but that's a high bar.

I wanna do a little segue. We've been talking more about technology and you are very comfortable with this, and then you talk with definitely a lot of experience and authority. This is more about beyond identity.

Okay. Starting with what was the the genesis of Beyond Identity, and then how did you jump into this?

Yeah. The genesis of Beyond Identity, it's pretty interesting.

So I I used to work at a company called Security Scorecard. I was their CTO. I ran the research and development department. And you can think of Security Scorecard as like a cybersecurity intelligence company. Right? Like, we were basically building the equivalent of a credit score for a company, but over their cyber.

And so a lot of the work that I did was around how do we look at all of the cyber signals that a company gives off that an attacker can see? And turn that into a score that's predictive of whether a bad thing will happen to them.

And through that, we had a partnership with a bunch of cyber insurance vendors. And so we could actually see what companies were basically getting payout or were basically making claims.