Beyond Identity Opens Early Access for the AI Security Suite

You can't see what your AI agents are doing. Today, we're announcing Beyond Identity's AI Security Suite, the first identity security platform purpose-built for autonomous AI agents. With it, security teams can answer 'which identity', 'on which device', 'what did the agent do,' and 'what is the agent allowed to do' with cryptographic proof on every request. With this foundation, enterprises can safely scale AI adoption, prevent data exfiltration, and enforce data governance automatically, without slowing down developers.

Key Takeaways

- AI agents lack real identity. Enterprises are deploying AI everywhere (developer copilots, automation agents, chatbots connected to internal data) but struggle to answer basic questions: Which user initiated this AI action? On which device? What data did the agent access? Without real AI identity, organizations cannot govern AI access, prevent data exfiltration, or investigate incidents when things go wrong.

- Traditional IAM fails for autonomous agents. Identity systems designed for human-in-the-loop sessions cannot secure agents that operate independently for hours or days with chained delegations across multiple tools and services.

- The AI Security Suite forensically proves and controls what AI can do and access. Every agent runs with a cryptographic credential tied to the hardware of the device, VM, or container, making it impossible to copy, steal, or share.

- Get early access. Organizations deploying enterprise LLMs, autonomous agents, or AI-powered workflows can join the waitlist to experience the AI Security Suite before general availability.

What Are the Challenges with Securing AI?

Enterprises are deploying AI agents everywhere: developer copilots like Claude Code, automation agents, RAG systems, and tools calling external LLMs. But these agents have no real identity. Organizations cannot control what data agents can access, cannot prevent agents from taking unauthorized actions, and cannot forensically prove which user or device initiated an AI operation.

Recent research demonstrated how a prompt injection attack can be used to make Claude Cowork exfiltrate confidential loan documents. The attack didn't steal the victim's credentials. The attacker gave Claude their own API key, embedded in a malicious document with hidden instructions. Claude executed a curl command using that key, uploading the victim's files to the attacker's account without any approval required.

This attack exposes fundamental problems with today's AI security:

- Data access and permissions are ungoverned. A junior employee can ask an AI assistant a question like, “What top-secret projects are executives working on?” and the agent may disclose sensitive information simply because it has access to the data, without understanding who should see what based on role, department, or active projects.

- Developers unknowingly expose production systems. AI agents running on developer machines have direct access to source code, credentials, and production systems. A single prompt injection could exfiltrate everything without the developer realizing what happened.

- Tool calls happen without oversight. Agents can invoke tools and call external APIs autonomously, but organizations have no way to approve, monitor, or restrict which tools agents can use based on device security posture or user context.

- MCP servers create backdoors into enterprise systems. When agents connect to MCP servers exposing databases and internal APIs, a compromised server or malicious tool can cascade access across the entire agent mesh.

According to Anthropic's own guidance, prompt injection is a known attack vector. AI-generated code introduces additional risks including hardcoded secrets, injection flaws, and logic vulnerabilities.

What Is Beyond Identity's AI Security Suite?

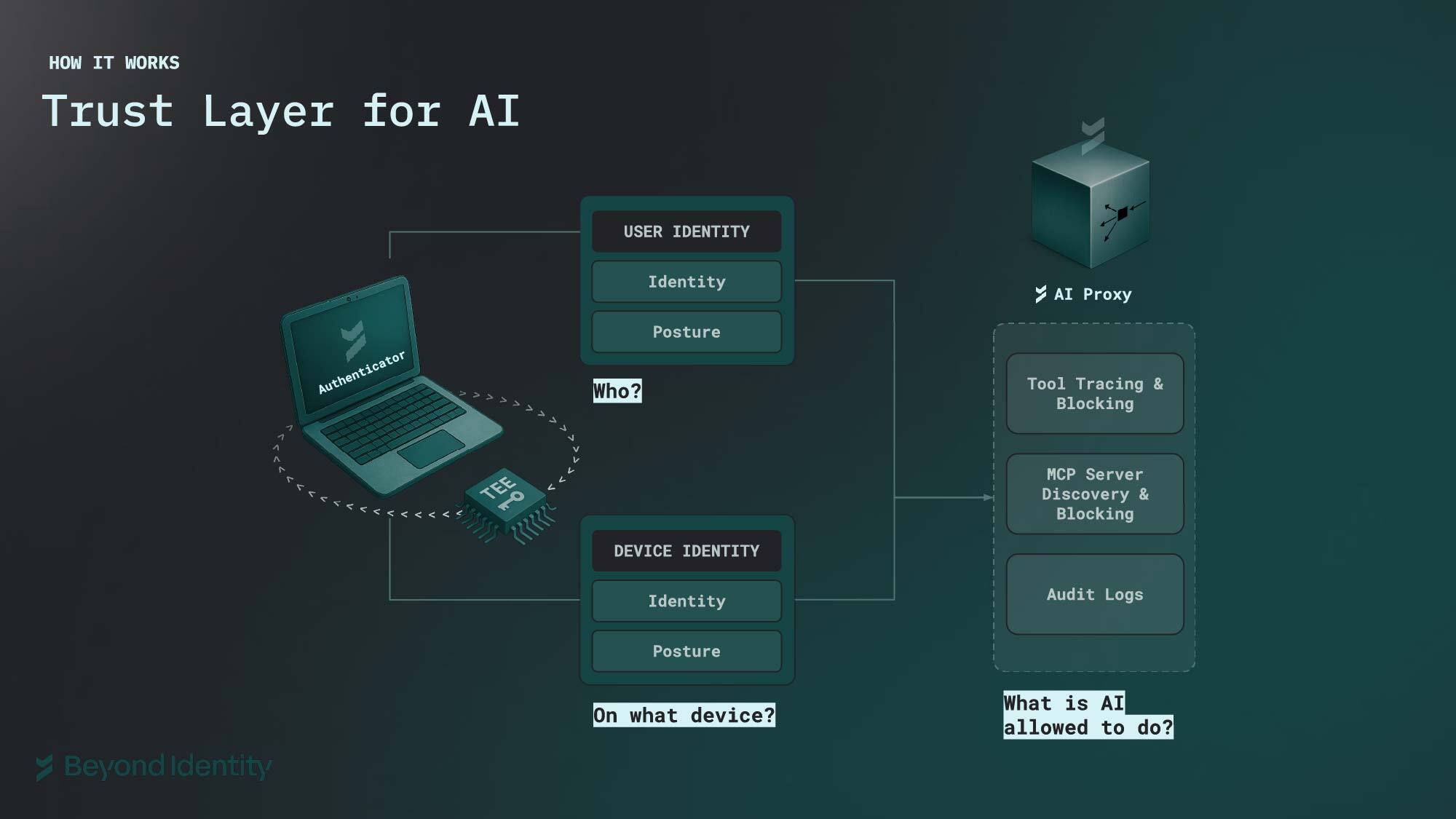

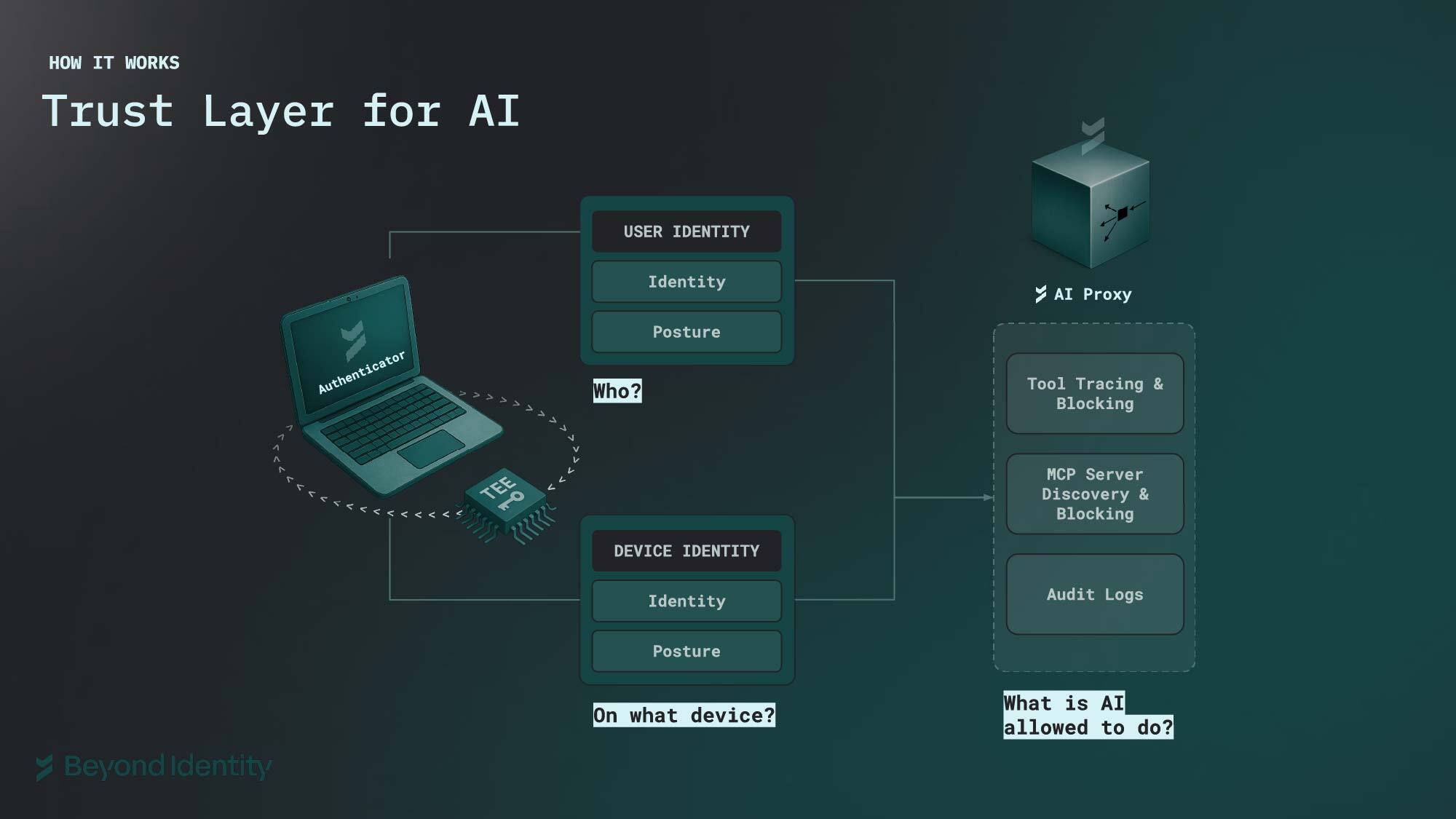

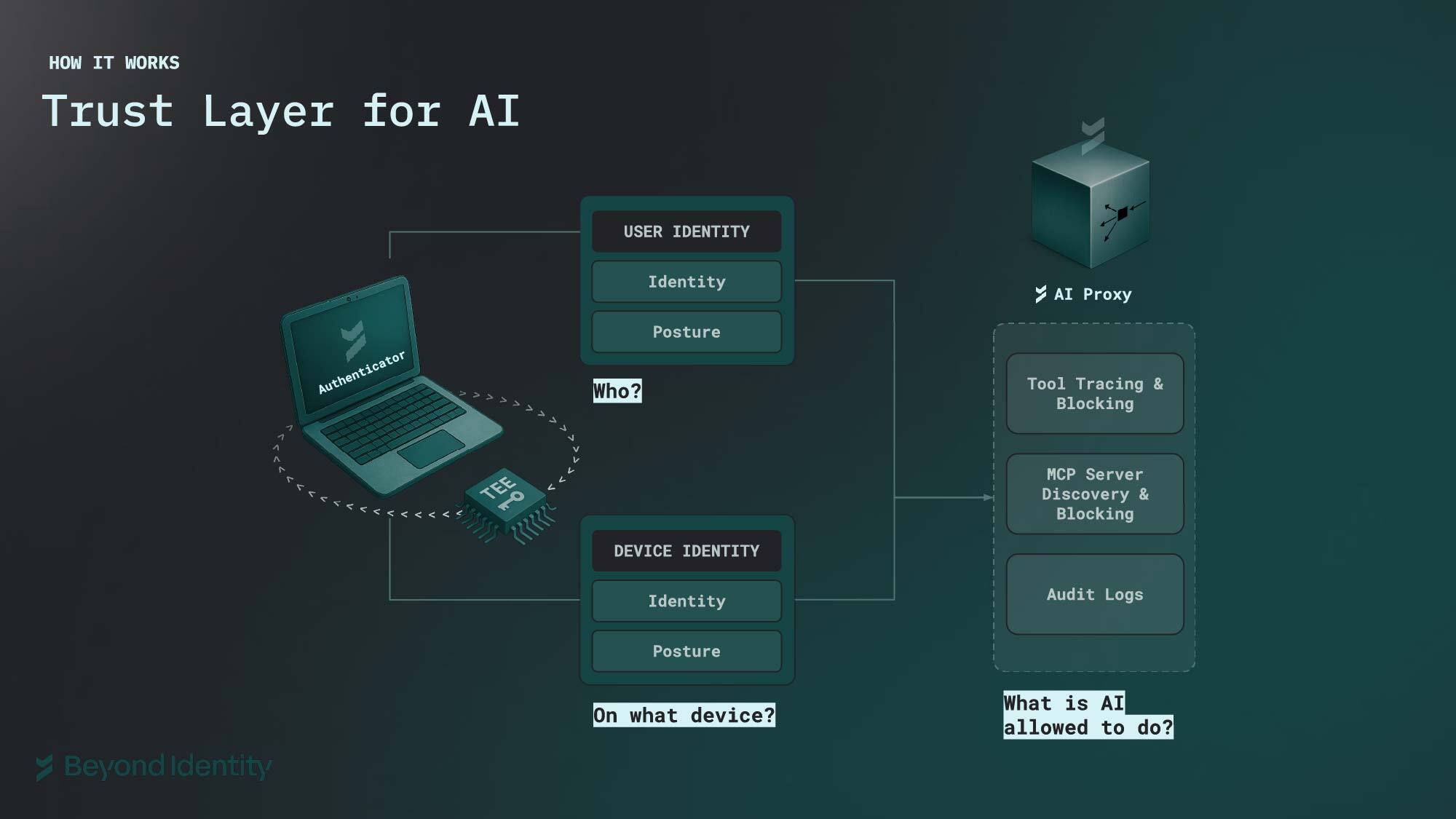

The AI Security Suite is the AI trust layer that provides agent visibility and control. It sits between your agents and the AI services they call (models, tools, and MCP servers), enforcing context-aware policies on every request while maintaining cryptographic audit trails of all AI actions.

By providing rich contextual information about users, devices, and permissions to the AI layer, the AI Security Suite enables enterprises to make informed decisions about what data agents can access and what actions they can take, creating guardrails that keep autonomous agents operating within appropriate boundaries.

What are the Use Cases?

The AI Security Suite enables secure deployment of AI agents across use cases that are risky without guardrails:

Know exactly which AI-generated code, decisions, or artifacts came from which agent (and prove it)

When AI-generated output causes incidents or compliance violations, you need an immutable audit trail to investigate root cause and demonstrate due diligence. With the AI Security Suite, every artifact carries cryptographic signatures showing the complete chain of custody: originating user, device posture, agent identity, models used, and policies in effect. This speeds incident investigation and compliance proof.

Secure developers using AI agents and copilots

Device-bound identity ensures credentials cannot leave developer workstations. Every API call must be cryptographically signed by a private key stored in the device's TPM or Secure Enclave, making it impossible to copy or exfiltrate credentials even if the agent running Claude Code or GitHub Copilot is compromised.

Secure MCP servers without blocking developer productivity

MCP servers expose databases, APIs, and internal tools to AI agents, but unrestricted access creates backdoors into enterprise systems. With the AI Security Suite, the security proxy validates MCP servers against allowlists, monitors tool invocations in real-time, and requires approvals for sensitive operations.

Prevent prompt injection attacks from succeeding, even when they execute

Prompt injections will bypass input filters, but they shouldn't be able to exfiltrate data or modify critical systems. With the AI Security Suite, policy enforcement blocks unauthorized actions at the API layer regardless of malicious instructions, while device posture checks ensure agents can only invoke approved tools based on the security state of the machine they're running on.

Prevent credential leaks and data exfiltration

Developer AI tools have direct access to production systems, source code, and sensitive credentials; a single prompt injection could expose everything. The AI Security Suite prevents credentials from leaving developer workstations, while policy controls restrict which tools agents can call

Secure AI access to SaaS tools with agentic capabilities

AI Security Suite acts as a context-aware IdP that provides rich contextual information about users, their roles, and permissions to AI assistants in tools like Jira and Slack, preventing privilege escalation where users could query confidential information they shouldn't access based on their role and department.

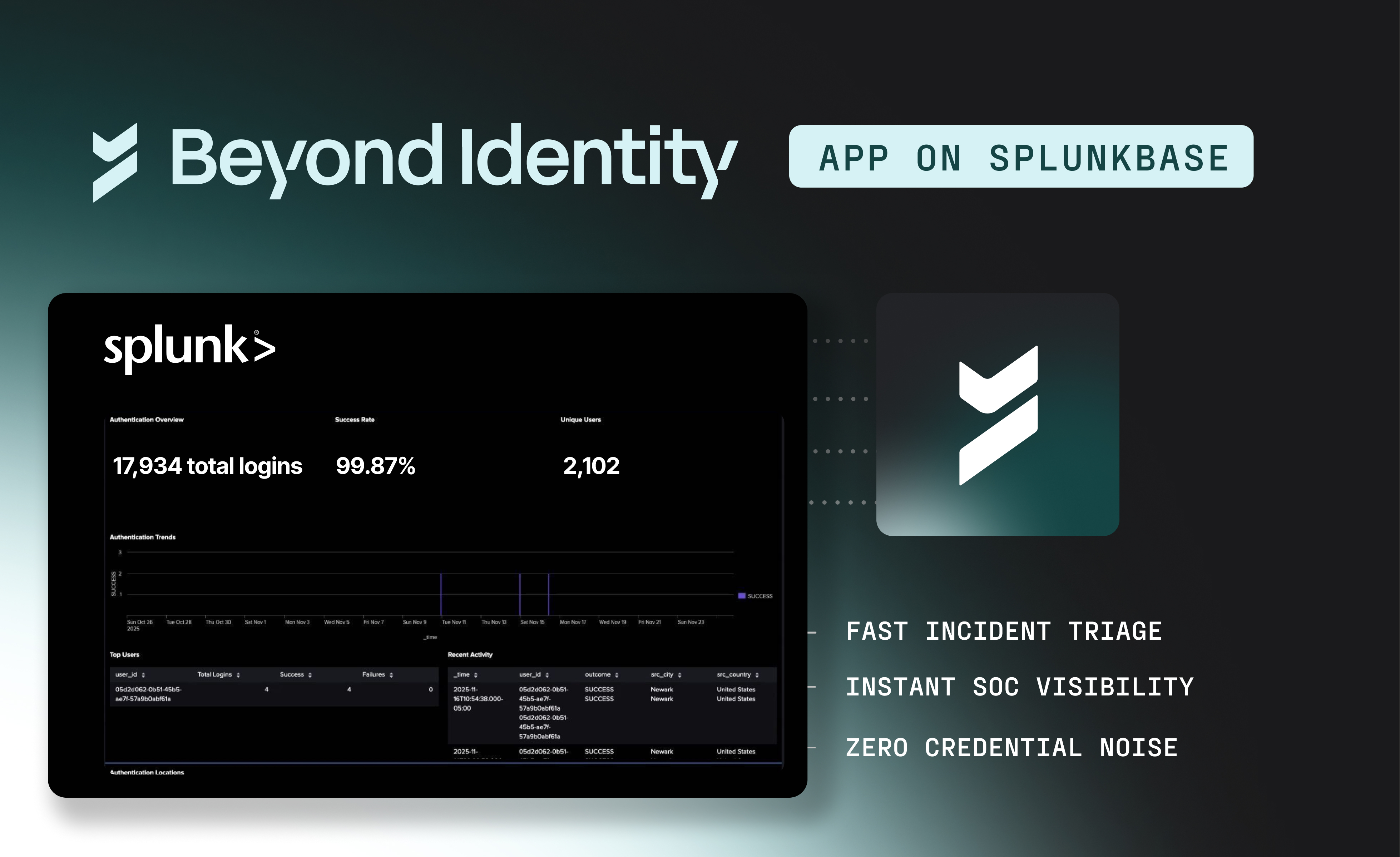

Reduce AI security incident response time from hours to minutes

Without agent-specific telemetry, investigating AI incidents means manually correlating logs across multiple systems to reconstruct what happened. With the AI Security Suite, centralized audit logs with cryptographic provenance show the complete attack chain in real-time. This provides instant identification of compromised agents and affected systems.

Gain visibility into LLM token usage and cost

Developers can easily overspend credits with Shadow AI tools, leaving blind spots for data exfiltration, policy violations, and runaway spend. With the AI Security Suite, all token and cost metrics are computed and stored in the Beyond Identity proxy layers and are tied to authenticated users, groups, and projects.

How does it work?

The AI Security Suite creates an identity layer for AI by combining device-bound identity with context-aware policy enforcement:

- Device-bound credentials: Every agent runs with a cryptographic credential tied to specific hardware: a TPM chip, Secure Enclave, or VM attestation module. The key cannot be extracted, copied, or transferred.

- Context-aware proxy: The AI Security Suite proxy sits between agents and the services they call, enriching every request with contextual information about the user, device, and permissions.

- Policy enforcement: Security teams define policies that govern what agents can do, which tools they can invoke, and what data they can access. These policies are enforced at the API layer in real-time.

- Cryptographic audit trail: Every action an agent takes is logged with cryptographic proof of who initiated it, from which device, under what policies, and with what outcome.

Get Started

Join early access! Enterprises can sign up to see a demo of the AI Security Suite and get early access to the product before it hits the general market.

FAQs

What makes the AI Security Suite different from traditional IAM?

Traditional IAM systems authenticate humans in browser sessions with short-lived tokens and human-in-the-loop approval. The AI Security Suite authenticates autonomous agents with hardware-bound credentials that cannot be copied or stolen. Every request carries a cryptographic proof signed by the agent's private key, which lives in a TPM or Secure Enclave. This prevents credential exfiltration attacks like the Claude Cowork prompt injection exploit.

What is device-bound identity and how does it work?

Device-bound identity means the agent's private key is cryptographically tied to specific hardware: a TPM chip, Secure Enclave, or VM attestation module. The key cannot be extracted, copied, or transferred. Every request the agent makes must be signed by that key, proving it originated from the authorized device.

You can't see what your AI agents are doing. Today, we're announcing Beyond Identity's AI Security Suite, the first identity security platform purpose-built for autonomous AI agents. With it, security teams can answer 'which identity', 'on which device', 'what did the agent do,' and 'what is the agent allowed to do' with cryptographic proof on every request. With this foundation, enterprises can safely scale AI adoption, prevent data exfiltration, and enforce data governance automatically, without slowing down developers.

Key Takeaways

- AI agents lack real identity. Enterprises are deploying AI everywhere (developer copilots, automation agents, chatbots connected to internal data) but struggle to answer basic questions: Which user initiated this AI action? On which device? What data did the agent access? Without real AI identity, organizations cannot govern AI access, prevent data exfiltration, or investigate incidents when things go wrong.

- Traditional IAM fails for autonomous agents. Identity systems designed for human-in-the-loop sessions cannot secure agents that operate independently for hours or days with chained delegations across multiple tools and services.

- The AI Security Suite forensically proves and controls what AI can do and access. Every agent runs with a cryptographic credential tied to the hardware of the device, VM, or container, making it impossible to copy, steal, or share.

- Get early access. Organizations deploying enterprise LLMs, autonomous agents, or AI-powered workflows can join the waitlist to experience the AI Security Suite before general availability.

What Are the Challenges with Securing AI?

Enterprises are deploying AI agents everywhere: developer copilots like Claude Code, automation agents, RAG systems, and tools calling external LLMs. But these agents have no real identity. Organizations cannot control what data agents can access, cannot prevent agents from taking unauthorized actions, and cannot forensically prove which user or device initiated an AI operation.

Recent research demonstrated how a prompt injection attack can be used to make Claude Cowork exfiltrate confidential loan documents. The attack didn't steal the victim's credentials. The attacker gave Claude their own API key, embedded in a malicious document with hidden instructions. Claude executed a curl command using that key, uploading the victim's files to the attacker's account without any approval required.

This attack exposes fundamental problems with today's AI security:

- Data access and permissions are ungoverned. A junior employee can ask an AI assistant a question like, “What top-secret projects are executives working on?” and the agent may disclose sensitive information simply because it has access to the data, without understanding who should see what based on role, department, or active projects.

- Developers unknowingly expose production systems. AI agents running on developer machines have direct access to source code, credentials, and production systems. A single prompt injection could exfiltrate everything without the developer realizing what happened.

- Tool calls happen without oversight. Agents can invoke tools and call external APIs autonomously, but organizations have no way to approve, monitor, or restrict which tools agents can use based on device security posture or user context.

- MCP servers create backdoors into enterprise systems. When agents connect to MCP servers exposing databases and internal APIs, a compromised server or malicious tool can cascade access across the entire agent mesh.

According to Anthropic's own guidance, prompt injection is a known attack vector. AI-generated code introduces additional risks including hardcoded secrets, injection flaws, and logic vulnerabilities.

What Is Beyond Identity's AI Security Suite?

The AI Security Suite is the AI trust layer that provides agent visibility and control. It sits between your agents and the AI services they call (models, tools, and MCP servers), enforcing context-aware policies on every request while maintaining cryptographic audit trails of all AI actions.

By providing rich contextual information about users, devices, and permissions to the AI layer, the AI Security Suite enables enterprises to make informed decisions about what data agents can access and what actions they can take, creating guardrails that keep autonomous agents operating within appropriate boundaries.

What are the Use Cases?

The AI Security Suite enables secure deployment of AI agents across use cases that are risky without guardrails:

Know exactly which AI-generated code, decisions, or artifacts came from which agent (and prove it)

When AI-generated output causes incidents or compliance violations, you need an immutable audit trail to investigate root cause and demonstrate due diligence. With the AI Security Suite, every artifact carries cryptographic signatures showing the complete chain of custody: originating user, device posture, agent identity, models used, and policies in effect. This speeds incident investigation and compliance proof.

Secure developers using AI agents and copilots

Device-bound identity ensures credentials cannot leave developer workstations. Every API call must be cryptographically signed by a private key stored in the device's TPM or Secure Enclave, making it impossible to copy or exfiltrate credentials even if the agent running Claude Code or GitHub Copilot is compromised.

Secure MCP servers without blocking developer productivity

MCP servers expose databases, APIs, and internal tools to AI agents, but unrestricted access creates backdoors into enterprise systems. With the AI Security Suite, the security proxy validates MCP servers against allowlists, monitors tool invocations in real-time, and requires approvals for sensitive operations.

Prevent prompt injection attacks from succeeding, even when they execute

Prompt injections will bypass input filters, but they shouldn't be able to exfiltrate data or modify critical systems. With the AI Security Suite, policy enforcement blocks unauthorized actions at the API layer regardless of malicious instructions, while device posture checks ensure agents can only invoke approved tools based on the security state of the machine they're running on.

Prevent credential leaks and data exfiltration

Developer AI tools have direct access to production systems, source code, and sensitive credentials; a single prompt injection could expose everything. The AI Security Suite prevents credentials from leaving developer workstations, while policy controls restrict which tools agents can call

Secure AI access to SaaS tools with agentic capabilities

AI Security Suite acts as a context-aware IdP that provides rich contextual information about users, their roles, and permissions to AI assistants in tools like Jira and Slack, preventing privilege escalation where users could query confidential information they shouldn't access based on their role and department.

Reduce AI security incident response time from hours to minutes

Without agent-specific telemetry, investigating AI incidents means manually correlating logs across multiple systems to reconstruct what happened. With the AI Security Suite, centralized audit logs with cryptographic provenance show the complete attack chain in real-time. This provides instant identification of compromised agents and affected systems.

Gain visibility into LLM token usage and cost

Developers can easily overspend credits with Shadow AI tools, leaving blind spots for data exfiltration, policy violations, and runaway spend. With the AI Security Suite, all token and cost metrics are computed and stored in the Beyond Identity proxy layers and are tied to authenticated users, groups, and projects.

How does it work?

The AI Security Suite creates an identity layer for AI by combining device-bound identity with context-aware policy enforcement:

- Device-bound credentials: Every agent runs with a cryptographic credential tied to specific hardware: a TPM chip, Secure Enclave, or VM attestation module. The key cannot be extracted, copied, or transferred.

- Context-aware proxy: The AI Security Suite proxy sits between agents and the services they call, enriching every request with contextual information about the user, device, and permissions.

- Policy enforcement: Security teams define policies that govern what agents can do, which tools they can invoke, and what data they can access. These policies are enforced at the API layer in real-time.

- Cryptographic audit trail: Every action an agent takes is logged with cryptographic proof of who initiated it, from which device, under what policies, and with what outcome.

Get Started

Join early access! Enterprises can sign up to see a demo of the AI Security Suite and get early access to the product before it hits the general market.

FAQs

What makes the AI Security Suite different from traditional IAM?

Traditional IAM systems authenticate humans in browser sessions with short-lived tokens and human-in-the-loop approval. The AI Security Suite authenticates autonomous agents with hardware-bound credentials that cannot be copied or stolen. Every request carries a cryptographic proof signed by the agent's private key, which lives in a TPM or Secure Enclave. This prevents credential exfiltration attacks like the Claude Cowork prompt injection exploit.

What is device-bound identity and how does it work?

Device-bound identity means the agent's private key is cryptographically tied to specific hardware: a TPM chip, Secure Enclave, or VM attestation module. The key cannot be extracted, copied, or transferred. Every request the agent makes must be signed by that key, proving it originated from the authorized device.

You can't see what your AI agents are doing. Today, we're announcing Beyond Identity's AI Security Suite, the first identity security platform purpose-built for autonomous AI agents. With it, security teams can answer 'which identity', 'on which device', 'what did the agent do,' and 'what is the agent allowed to do' with cryptographic proof on every request. With this foundation, enterprises can safely scale AI adoption, prevent data exfiltration, and enforce data governance automatically, without slowing down developers.

Key Takeaways

- AI agents lack real identity. Enterprises are deploying AI everywhere (developer copilots, automation agents, chatbots connected to internal data) but struggle to answer basic questions: Which user initiated this AI action? On which device? What data did the agent access? Without real AI identity, organizations cannot govern AI access, prevent data exfiltration, or investigate incidents when things go wrong.

- Traditional IAM fails for autonomous agents. Identity systems designed for human-in-the-loop sessions cannot secure agents that operate independently for hours or days with chained delegations across multiple tools and services.

- The AI Security Suite forensically proves and controls what AI can do and access. Every agent runs with a cryptographic credential tied to the hardware of the device, VM, or container, making it impossible to copy, steal, or share.

- Get early access. Organizations deploying enterprise LLMs, autonomous agents, or AI-powered workflows can join the waitlist to experience the AI Security Suite before general availability.

What Are the Challenges with Securing AI?

Enterprises are deploying AI agents everywhere: developer copilots like Claude Code, automation agents, RAG systems, and tools calling external LLMs. But these agents have no real identity. Organizations cannot control what data agents can access, cannot prevent agents from taking unauthorized actions, and cannot forensically prove which user or device initiated an AI operation.

Recent research demonstrated how a prompt injection attack can be used to make Claude Cowork exfiltrate confidential loan documents. The attack didn't steal the victim's credentials. The attacker gave Claude their own API key, embedded in a malicious document with hidden instructions. Claude executed a curl command using that key, uploading the victim's files to the attacker's account without any approval required.

This attack exposes fundamental problems with today's AI security:

- Data access and permissions are ungoverned. A junior employee can ask an AI assistant a question like, “What top-secret projects are executives working on?” and the agent may disclose sensitive information simply because it has access to the data, without understanding who should see what based on role, department, or active projects.

- Developers unknowingly expose production systems. AI agents running on developer machines have direct access to source code, credentials, and production systems. A single prompt injection could exfiltrate everything without the developer realizing what happened.

- Tool calls happen without oversight. Agents can invoke tools and call external APIs autonomously, but organizations have no way to approve, monitor, or restrict which tools agents can use based on device security posture or user context.

- MCP servers create backdoors into enterprise systems. When agents connect to MCP servers exposing databases and internal APIs, a compromised server or malicious tool can cascade access across the entire agent mesh.

According to Anthropic's own guidance, prompt injection is a known attack vector. AI-generated code introduces additional risks including hardcoded secrets, injection flaws, and logic vulnerabilities.

What Is Beyond Identity's AI Security Suite?

The AI Security Suite is the AI trust layer that provides agent visibility and control. It sits between your agents and the AI services they call (models, tools, and MCP servers), enforcing context-aware policies on every request while maintaining cryptographic audit trails of all AI actions.

By providing rich contextual information about users, devices, and permissions to the AI layer, the AI Security Suite enables enterprises to make informed decisions about what data agents can access and what actions they can take, creating guardrails that keep autonomous agents operating within appropriate boundaries.

What are the Use Cases?

The AI Security Suite enables secure deployment of AI agents across use cases that are risky without guardrails:

Know exactly which AI-generated code, decisions, or artifacts came from which agent (and prove it)

When AI-generated output causes incidents or compliance violations, you need an immutable audit trail to investigate root cause and demonstrate due diligence. With the AI Security Suite, every artifact carries cryptographic signatures showing the complete chain of custody: originating user, device posture, agent identity, models used, and policies in effect. This speeds incident investigation and compliance proof.

Secure developers using AI agents and copilots

Device-bound identity ensures credentials cannot leave developer workstations. Every API call must be cryptographically signed by a private key stored in the device's TPM or Secure Enclave, making it impossible to copy or exfiltrate credentials even if the agent running Claude Code or GitHub Copilot is compromised.

Secure MCP servers without blocking developer productivity

MCP servers expose databases, APIs, and internal tools to AI agents, but unrestricted access creates backdoors into enterprise systems. With the AI Security Suite, the security proxy validates MCP servers against allowlists, monitors tool invocations in real-time, and requires approvals for sensitive operations.

Prevent prompt injection attacks from succeeding, even when they execute

Prompt injections will bypass input filters, but they shouldn't be able to exfiltrate data or modify critical systems. With the AI Security Suite, policy enforcement blocks unauthorized actions at the API layer regardless of malicious instructions, while device posture checks ensure agents can only invoke approved tools based on the security state of the machine they're running on.

Prevent credential leaks and data exfiltration

Developer AI tools have direct access to production systems, source code, and sensitive credentials; a single prompt injection could expose everything. The AI Security Suite prevents credentials from leaving developer workstations, while policy controls restrict which tools agents can call

Secure AI access to SaaS tools with agentic capabilities

AI Security Suite acts as a context-aware IdP that provides rich contextual information about users, their roles, and permissions to AI assistants in tools like Jira and Slack, preventing privilege escalation where users could query confidential information they shouldn't access based on their role and department.

Reduce AI security incident response time from hours to minutes

Without agent-specific telemetry, investigating AI incidents means manually correlating logs across multiple systems to reconstruct what happened. With the AI Security Suite, centralized audit logs with cryptographic provenance show the complete attack chain in real-time. This provides instant identification of compromised agents and affected systems.

Gain visibility into LLM token usage and cost

Developers can easily overspend credits with Shadow AI tools, leaving blind spots for data exfiltration, policy violations, and runaway spend. With the AI Security Suite, all token and cost metrics are computed and stored in the Beyond Identity proxy layers and are tied to authenticated users, groups, and projects.

How does it work?

The AI Security Suite creates an identity layer for AI by combining device-bound identity with context-aware policy enforcement:

- Device-bound credentials: Every agent runs with a cryptographic credential tied to specific hardware: a TPM chip, Secure Enclave, or VM attestation module. The key cannot be extracted, copied, or transferred.

- Context-aware proxy: The AI Security Suite proxy sits between agents and the services they call, enriching every request with contextual information about the user, device, and permissions.

- Policy enforcement: Security teams define policies that govern what agents can do, which tools they can invoke, and what data they can access. These policies are enforced at the API layer in real-time.

- Cryptographic audit trail: Every action an agent takes is logged with cryptographic proof of who initiated it, from which device, under what policies, and with what outcome.

Get Started

Join early access! Enterprises can sign up to see a demo of the AI Security Suite and get early access to the product before it hits the general market.

FAQs

What makes the AI Security Suite different from traditional IAM?

Traditional IAM systems authenticate humans in browser sessions with short-lived tokens and human-in-the-loop approval. The AI Security Suite authenticates autonomous agents with hardware-bound credentials that cannot be copied or stolen. Every request carries a cryptographic proof signed by the agent's private key, which lives in a TPM or Secure Enclave. This prevents credential exfiltration attacks like the Claude Cowork prompt injection exploit.

What is device-bound identity and how does it work?

Device-bound identity means the agent's private key is cryptographically tied to specific hardware: a TPM chip, Secure Enclave, or VM attestation module. The key cannot be extracted, copied, or transferred. Every request the agent makes must be signed by that key, proving it originated from the authorized device.

.png)

.avif)

.avif)

.avif)

.avif)