Securing Agentic AI: From MCPs, Tool Use, to Shadow API Key Sprawl

Key Takeaways

Full Transcript

Happy New Year, boys and girls. Welcome back to the Hacker News webinar. I hope everyone had a great and amazing New Year. We're in the month of January where that means that everyone is starting fresh.

But you know what isn't fresh? AI. It's been around for a while. It's a buzzword.

It's a thing. Right? Sarah, welcome back to the hacker news webinar. Colton, welcome.

So, folks, we've got a packed webinar today. We've got a live demo that Colton is gonna execute flawlessly because, you know, it's live and nothing ever goes wrong when we do anything live here ever.

But in that event, thank you all for taking time out of your busy schedules to be here with us today. Our friends from Beyond Identity and Sarah, who's been a regular at this point, we should just have her cohost these webinars with us, is back to talk a little bit about securing AgenTik AI. The last one we had was Sarah, Jenk, and I. And if you missed it, it's in the December section.

We ended the year with Sarah. So she's back in the New Year to kick it off. The feedback was phenomenal from that webinar. So, we're gonna be talking about that.

Questions are welcome. They're gonna be answered after the webinar. Obviously, this webinar runs four different times, so there's no possible way. We have four different hours to do this webinar.

So we'll be answering all your questions via email after the webinar. Please ask away. Don't be afraid to do so. Additionally, your comments during the webinar entertain me very much, because I get to see those. So I love them. So put them in there. Let us know how you're feeling.

We'll get to all of that. Let me introduce Sarah Cicchetti. She is Sarah Cicchetti. Right?

We we gotta we gotta do it with the Italian. She's the director of product strategy at our friends over at Beyond Identity. And Colton, you're gonna kill me. How how do we say this again, man?

I'm sorry.

I should have written it down better.

Shanaki.

Shanaki. It's it's it's it's carrier. It's it's easier than it looks. I think whoever spelled your name, you should have a conversation with them.

I know it's probably, like like, seven hundred and twenty generations, but still, I'm kidding. I'm joking. Don't don't change it ever. So alright, folks.

We're gonna be talking about securing AI agents from MCP's tool used to shadow API key scroll key sprawl. So, Sarah Colton, I'll hand it off to y'all. Have a great webinar, folks. Awesome.

So I'm gonna tee up the problem here, and then Colton's gonna go over the solution.

So let's go ahead and go to the next slide.

So, basically, what we're looking at right now is a a situation amongst IT teams where productivity has increased dramatically even in the last five weeks. I don't know if anybody played with Opus four point five over the holidays, and especially with, like, the new, like, Ralph Wiggum looping tools that have come out. Like, it is incredible how much more you can get done in an afternoon than you used to be able to and how much higher quality. So amongst our customers, we are seeing ten times faster, coding with agents, three times more output.

And, obviously, you can run them twenty four seven. Right? So if you don't start a rough week of lip going before you go to bed, like, what are you doing? Why are you even a coder?

So we're seeing, at least forty percent, time saved among coders right now. So we're seeing this huge shift in how development teams are working day to day, where they are getting, feature requests on x and within hours implementing those feature requests because they're able to use these agents to get things done so much more quickly. And that's, like, done done done done with unit tests, with documentation, completely, end to end, features that are that are complete within hours. So, companies that are not adopting this are really falling behind those who are.

Right? It's it's getting very, very competitive right now to be a SaaS company. If you're not, responding to feature requests within hours, if you're if you have any existing bug reports, why haven't you just put them into cloud? Why haven't you just put them into Cloud Code and solved them?

Right? There's no excuse for having a bug in your software these days.

The catch comes in that the security teams are kind of getting steamrolled because these new tools have made people so productive, so amazingly, useful that, security teams really can't say no. Right? How can you say no to someone who says, I can ten x my productivity, and then you're supposed to sit there and say, like, actually, I'm really worried about security. Please don't do that. Right? So companies can't wait for security to catch up, so they're adopting anyway. And so we're gonna talk a little bit about the dangers that come, with this, and then Colton will talk to you about how we're gonna mitigate those.

So traditionally, among CSOs, what we're seeing right now is, like, they have two options. Option a is like, okay. We're gonna ban AI altogether. Like, it is so dangerous to our organization that that no one gets to use it.

And what we see is that developers use it anyway. Right? So they they'll go on their personal laptops and connect it to your corporate environment and and have Cloud Code do its thing, and then they will manually type in all the all the code that Cloud came up with, right, and do that shadow IT thing. It's even more dangerous because they're they're going through a loop of personal technology.

Or they're doing it in Slack.

Or they're doing it in Slack.

Yeah. That's that's the one where they're committing everything. Like, I'm telling you, as a CISO, I've seen the like, we don't ban AI.

I think anyone who ban I was shaking my head when he said that because I don't think anyone should be banning AI.

Really?

Ban AI.

It's it's kinda like the people who said the Internet was a fad, and people will go back to newspapers at some point. Right? Like, cut it. AI is here to stay, and it's definitely not going anywhere. You're likely gonna go somewhere if you don't learn how to securely adopt the business to AI at the speed the business needs as a practitioner. So just just putting that out there.

But what they'll do to kinda give you the workaround, they'll use Slack because they just will go and and and and and commit all the code through their Slack and then into your corporate environment. You never see it. You're not looking at it because you're not monitoring Slack to that level.

Yeah.

They'll just copy and paste it right into into there, and that's how they get it back and forth. Yeah.

Terrifying. Right? Okay. So as a CISO, you kind of have to say, okay. I'm going to allow AI.

But, what you have to understand is that if your developers are using Cloud Code, they're connecting it using model context protocol to your email, to your databases, to your ticketing system, to all of your SaaS apps. And there you have, like, traditional security tools. If you're using your CrowdStrike and your SIM and all of the things that you have invested in, they have zero visibility into what those connections look like and what's going on in that protocol exchange. It's not like a traditional API where you can watch that network traffic go back and forth.

You have no visibility into what those agents are doing and what code they are executing on a local machine and what information they are getting access to. And so, if you are just allowing AI with no tools, like, you are gonna have trouble sleeping at night because you are just opening up your environment to these huge risks.

And so, on the next slide, we're gonna talk a little bit about these are the top four risks that we have seen, and we're gonna go into each of these. So, road tool use where the agent executes commands, data exfiltration where it's sending sensitive data somewhere else, API key sprawl, where it is exposing sensitive credentials, and then malicious MCP servers where the the MCP server itself is actually malware.

We've seen all of these in the wild, this is really where secure traditional security fails. As I said, the tools that you've invested in, your DLP tools, your EDR tools, your SIM tools, they really don't catch these things. They're not prepared for these things. They're not built to to monitor this and to control this.

And, so it's really an entirely new world that we need new solutions to fix. So let's go over each of these, risks. So the first is rogue tool use. So by that, we mean that an a it executes commands that were not authorized by the user, and the user doesn't know what they're authorizing.

So in Claude, you have the ability to let it be quite independent on its own and and find and use tools, without your consent. And it is impossible for you to tell whether any given tool is legitimate or not. So we've seen CVEs that allow attackers to run malicious code and do remote code execution on a machine, and those that remote code execution got used by millions of developers. So this is not a theoretical attack.

This is something we have seen in the wild. We've seen for real where a human developer working day to day would never make this command.

An agent executes it because they don't understand the what they are authorizing in the moment. And so that kind of rogue tool use is really, really dangerous.

The second one we're looking at is data exfiltration. So traditional, data loss prevention systems, DLP systems can't distinguish between legitimate and malicious data transfers, when the agent is compromised. So if an agent's API calls, matched and the the API call matched expected parameters, the breaches like, the DLP system won't send you an alert. It won't, tell you that it's DLP because it looked like a valid API call.

And so agents are capable of sending sensitive and proprietary data to external unsecured services without authorization. Additionally, MCP has a capacity for servers and events to do something called elicitation. And what elicitation means is when you are connecting to an MCP server that's remote, it can ask information of the memory of your AI. So the AI where you research health things, where you research legal things, where you research CVEs that you might have in your environment, your AI memory knows all of that, and the MCP server can exfiltrate that information back to the MCP server if that MCP server is compromised or if it is run by an attacker from the beginning. That sort of data exfiltration can be very, very damaging. We saw an incident in twenty twenty four where there was an health care AI leak that cost fourteen million dollars in fines and three months of exposure. So these are things that are that are real risks.

The third one we're seeing is API key sprawl. And in some ways, this is a good thing. It means that people who are not traditionally developers are using, Claude code and using Vibe coding to make their own things, which is great except that they don't know how secrets work, and they don't know that API keys are supposed to be protected. And so they go and commit them into GitHub, and they go and release them as open source on the Internet.

And so you are seeing, marketing managers. You're seeing HR people who are just making their own tools. They think they're doing a great job and being more productive workers, which they are. That is true.

But they've never had to deal with API keys before. And so when they go into their SaaS tool and say, oh, I'm just gonna download this API key. I'm gonna give it to Claude. Claude could do my work for me.

This is great. They don't realize that that API key can then leak. Right? So we have seen an example in Samsung where, there was a code leak via ChatGPT where employee pasted confidential source code right into ChatGPT causing AI exposure, credential compromise, and then somebody has to go in and manually rotate those keys.

Right? And so we really don't wanna see, employees having direct access to those API keys. That's quite dangerous.

And then the last one, I think, is one that most people are sleeping on that they are not aware of in terms of risks to their environment, and that's malicious MCP servers. So when a agent connects to a malicious or compromised MCP server, it can inject harmful instructions or steal data.

So there is no publisher identity verification for an MCP server. You do not know whether any given MCP server is, valid or not, whether it is the official MCP server for that thing or whether it is not. And there's no way to tell if there's been a supply chain attack. Right? If some library that that MCP server used got compromised or if the code itself got compromised, if that GitHub repository got taken over by someone else.

Right? And there's no way to, isolate that MCP server if it gets compromised. And so, we're seeing a number of different, attacks in this. The the first one we wanted to talk about was called Postmark.

This was, disguised as a legitimate email tool, but it was in fact malware. It was secretly BCC ing every email to the attacker and had sixteen hundred downloads before anyone figured out what was going on. And the so the attacker got all of those emails with no one knowing. Right?

No one could tell that this MCP server was doing that in the background.

And then the second one we wanted to bring up was WhatsApp. And so this was a tool poisoning attack. So this was a completely valid MCP server that got compromised and got malware added to it that added hidden instructions that would exfiltrate the entire message history to the attacker. And so this sort of of MCP compromise is something that is entirely possible in your environment. There's no way for you to know what MCP servers your developers are using today and whether or not they have been compromised. And so this is really one that a lot of CSOs are sleeping on that they are not aware that this is even attack that can happen in their environment.

And, hopefully hopefully, now you know and you'll be aware of it. But, I think a lot of companies are gonna get compromised by this one before it becomes kind of mainstream knowledge that this is this is a serious vulnerability in their environment.

So with that, I will hand off to, mister Shnacki. See, I can do it, James.

Thank you. Thank you.

And, he's gonna tell you about our Beyond Identity solutions.

It's not fair. You work with Colton every day.

Well, I'm curious.

You get to work with Colton every day.

And then and then number two, like, WTF.

It's a It's a New Year's era. I thought you and I were starting new.

We know each other well enough that I can roast you a little bit.

You can. You're more than welcome to. Okay. Ultimate luck. Alright.

I'll, talk about, like, the three pillars of our solution, which are identify visibility and control.

So our our core beyond identity tech, we cryptographically tie the human identity to the device.

And in this new age of AI, we're extending that chain of trust to the agents that human users are invoking on their desktops or in the cloud, and we're providing identity for that so that you can trace Anytime an agent does something, you can trace that now back to the device that the agent was running on and the human that, invoked the agent.

So now that we're able to provide, identity to these new things like agents and models and MCP servers, we now have all of this visibility into what is actually, going on in your AI stack.

So, like, what agents are being invoked?

What tools do those agents have available? Like, is that agent connected to an MCP server or some local tools that are provided with Claude?

And, now that you have this visibility, you can implement deterministic access controls inside of your AI stack. So if you see some models that your developers are using, you could block that, or you see a suspicious looking MCP server. You can just write a policy saying, you know, this MCP server is not allowed.

And I'll kinda get into sort of, like, how the solution is deployed. So so it first starts off with, we run an authenticator on the end user device, and that is how we tie the human identity to the device identity. And now we're extending that to create identity for the agents that are running on your users' desktops.

So when a user starts interacting with a desktop agent like Claude Code or Codex or something, all of that AI traffic is now routed through what we're calling our AI proxy. So now we're able this is what gives us the visibility into what models are being used, what tools are being used, audit logs for everything, the ability to write policy on what tools are allowed, denied.

And, now let's, get into the demo demo time.

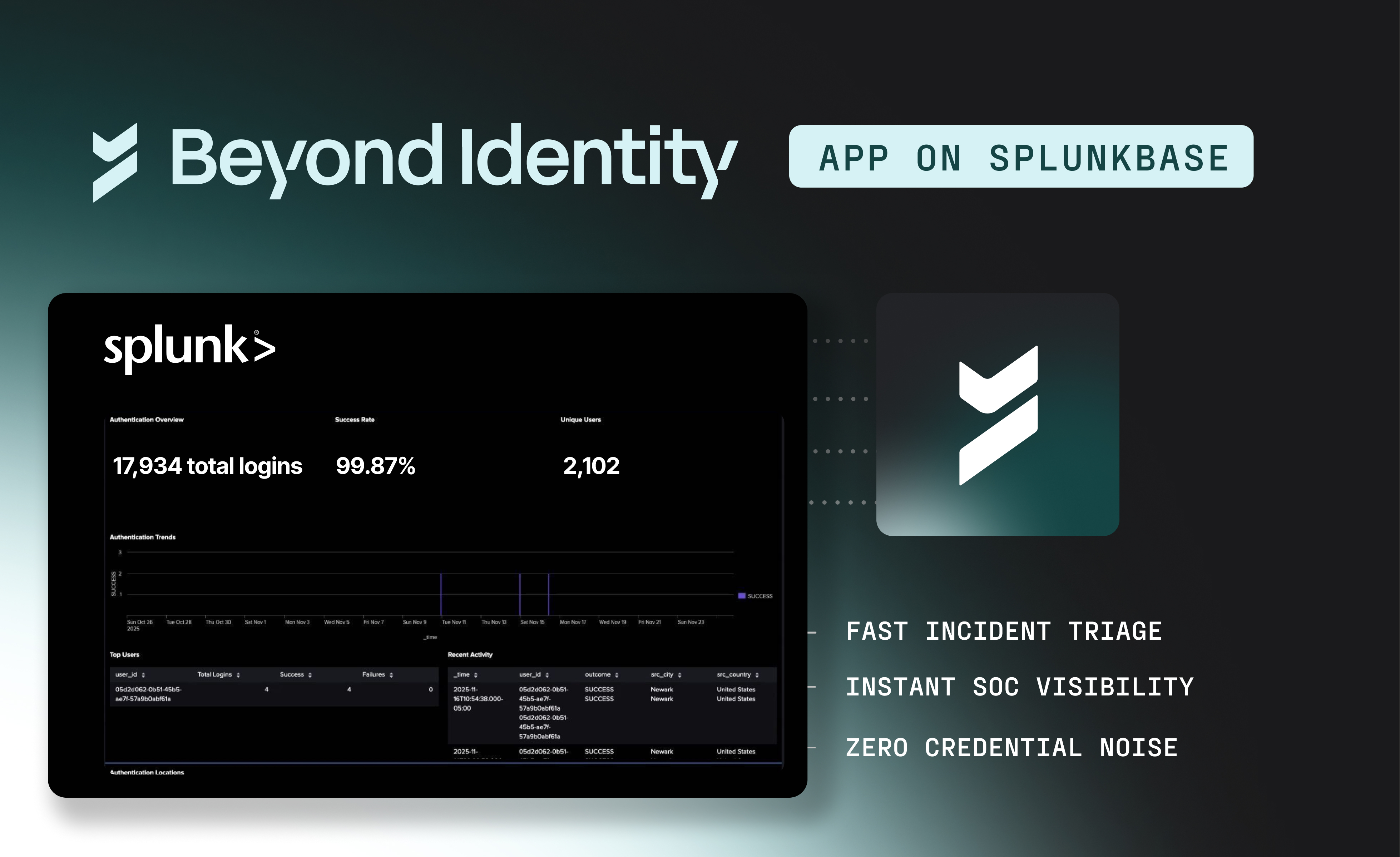

Okay. So this first screen is just like a dashboard showing all of the AI activity going on in your environment.

And there's some pretty basic things like what are the most used models, what are the most used agents, what are the most used tools. And remember, like, MCP server, it's it's just a tool. Just another name for a tool.

So you can see that, and you can see, which users are using those tools.

So I like to pull up this visual of one of our active users.

You know, we can we can break it down to see that this corporate identity, Jing, we see that she's been using these four different versions of Claude Code, and we see that Claude Code has been invoking these tools.

Now some of these tools like Bash, they they really can do anything. You we're kind of in this new territory where it's it's like, the perimeter here is sort of unbounded because you're relying on the LLM to tell the tool what to do.

So this is just a nice visual I like to show, showing, like, the chain from the user to the device to the agent and which tools they're using.

So as more users start to use the solution, we're we start to create this sort of catalog of all the tools that are that are being made to the users. And a lot of these tools already come packaged in, like, Claude code. So, like, Bash, for example, we're able to see exactly, you know, what the description and the purpose of the tool is.

So now we have an inventory of what models are being used, what agents are being used, who are using the agents, and then what tools are being used. So the tools could be whatever is packaged with Claude or maybe someone brought their own MCP servers. You can see exactly, you know, what the purpose of that MCP server is.

So I'm gonna go into a little demo now showing, like, well, what what exactly is going on when an agent invokes a tool? So I'm I'm just in Claude code right now. I'm gonna say what files are in my directory.

So what's happening here is the agent is sending a message up to the LLM in the cloud saying the user query is asking about files and their directory, and they also have this set of baked in tools such as Bash. So the LLM will process that request, and then it will send an action back to the agent saying, you know, use this bash command to run l s dot or dash l a, and then that will get executed.

So the other visibility we're able provide is not just, like, what tools the agent has available to them, but every time an agent invokes one of those tools, what exactly is that tool running on the desktop?

So in this example, we can see that Colton, me, kicked off this tool using the clawed code CLI, and here are the inputs to the tool, and here are the outputs for the tool.

So let's say you you're seeing some tool usage that you don't approve of or, like, an MCP server, that that you you don't really considered authorized by your organization.

You can write policy that says that you just want to block that tool. You wanna block users from using that tool.

So then when I go when the user goes into the terminal again hey. Can you look again at what files are in my directory?

We now have this policy written saying that the Bash tool is not allowed for that user on that device. And, like, what the user sees is basically, like, you know, this tool you're trying to invoke has been blocked by the system administrator.

Let's see here.

Alright. Let me just undeny that.

Yeah. So you can write more advanced policies, like, by the user, by the device.

Maybe you have also not a desktop agent, but you have agents spun up, deployed in your cloud cluster.

You can now provide visibility and policy on all of the actions and tools that your cloud containers are allowed to do. And, like, I would say a pretty common one is you don't like, there's this one tool called WebFetch that comes packaged with a lot of these agents, and that's probably something you don't want running in your your cloud to just go do random queries on the web. So you could just write a tool policy like that.

So we have all the you know, we're seeing all of this data. We have all of this visibility, and we can provide access control to it.

And we also have, like, the detailed, basically, the detailed logs for all of the AI traffic that is going on in your environment.

And that's that's pretty much it for the demo.

I didn't so what we've covered in the demo was we're able to provide identity for the human, the device, the agent that's running on the device, and the tools that this agent has access to, provide visibility into your whole AI stack, and then you can implement access controls in certain points in that AI stack. Like, what model should this user be allowed to use? What agents can they use, not use? Tools, especially like rogue MCP servers, you can just squash those remotely from this interface.

I think that's oh, did the demo.

So, yeah, it's the what we're we want people to roll out AI more quickly, but also securely.

So by providing identity layer and visibility and access control to this new AI stack that, whether you know it or not, is probably already deployed in your environment.

We've had a cup couple of customers who are already using us, and this is just, you know, some of their feedback.

And if you want to learn more, if you wanna get started, go visit us at beyond identity dot a I.

Well, Colton, Sarah, you guys, once again, twenty six minutes, almost twenty seven, knocking it out of the ballpark in terms of timing.

I will give you that. And, also, knocking it out of the ballpark in terms of actually managing the complexity of how AI is being used by empowering practitioners with the right tools. That goes a long way. Folks, go check out beyond identity dot a I, scan that QR code. It's not gonna run any scripts in the background of your device to accidentally steal all of your information. I promise we checked.

I can almost guarantee it, but don't take me to the bank with that one.

But but, Sarah, Colton, thank you so much for coming on the Hacker News webinars and kicking off this year with another amazing webinar and and more great work that Beyond Identity is doing to help security practitioners be better at what they're supposed to do in a time where speed matters and visibility and mitigation matters as well.

I'll just say this as we end it and end this webinar here.

As security practitioners, we are business enablers.

That's it.

We're not when I hear a security practitioner say, I shut it down, I cringe at that prideful moment because the business does not exist without generating revenue. And if it doesn't generate revenue, you don't have a job. And you're the first job going. Just wanna put that clear because they're gonna say, why aren't we doing that revenue?

They're blocking it. Who's blocking it? See you later. If you don't believe me, study the history of Equifax pre the twenty seventeen breach.

Just study it. Just go back and listen to insiders from Equifax who used to talk pre not the sister that was there during the breach, but the one before who locked everything down and said no to a lot of stuff and created all these backdoors.

The new system came in and overcompensated by being really, really loose, which led to Apache struts not getting patched in time, which led to, at the time, what was the most significant data breach in American history at that time. I mean, this was almost ten years ago. So, you know, by the way, a lot of water have gone under the bridge from Equifax to today. But understand that that's the same thing that applies now with AI.

We need to be business enablers. Tools like what we saw today help us do that. And when we present it effectively, we'll get the money to do it as well. So just wanna put that out there.

If you need more direction on how to do that, you can reach out to me. I'm available on LinkedIn. Reach out to Sarah and Colton. They'll be happy to help you as well figure that out.

But you've got you've gotta make the business case, and you'll get the money for it if it's supporting generating more revenue. What the business is doing is gonna generate more revenue. They'll spend the money to make sure they do it right every time.

Every time. Alright. Thank you all for tuning in. I hope that was helpful. I hope you enjoyed Sarah and Colton's presentation.

Great demo by Colton, by the way. I was glued to my screen watching it. So so really do appreciate it. Make sure to check out the hacker news dot com forward slash webinars for a lot more webinars coming this year.

I mean, we are going insane this year. You get to dictate what we talk about.

So go let us know at the hacker news dot com forward slash webinar. This webinar is available on demand also after the fact, so go check it out there. Thank you all for tuning in. Have a great New Year, and stay cyber safe.

Key Takeaways

Full Transcript

Happy New Year, boys and girls. Welcome back to the Hacker News webinar. I hope everyone had a great and amazing New Year. We're in the month of January where that means that everyone is starting fresh.

But you know what isn't fresh? AI. It's been around for a while. It's a buzzword.

It's a thing. Right? Sarah, welcome back to the hacker news webinar. Colton, welcome.

So, folks, we've got a packed webinar today. We've got a live demo that Colton is gonna execute flawlessly because, you know, it's live and nothing ever goes wrong when we do anything live here ever.

But in that event, thank you all for taking time out of your busy schedules to be here with us today. Our friends from Beyond Identity and Sarah, who's been a regular at this point, we should just have her cohost these webinars with us, is back to talk a little bit about securing AgenTik AI. The last one we had was Sarah, Jenk, and I. And if you missed it, it's in the December section.

We ended the year with Sarah. So she's back in the New Year to kick it off. The feedback was phenomenal from that webinar. So, we're gonna be talking about that.

Questions are welcome. They're gonna be answered after the webinar. Obviously, this webinar runs four different times, so there's no possible way. We have four different hours to do this webinar.

So we'll be answering all your questions via email after the webinar. Please ask away. Don't be afraid to do so. Additionally, your comments during the webinar entertain me very much, because I get to see those. So I love them. So put them in there. Let us know how you're feeling.

We'll get to all of that. Let me introduce Sarah Cicchetti. She is Sarah Cicchetti. Right?

We we gotta we gotta do it with the Italian. She's the director of product strategy at our friends over at Beyond Identity. And Colton, you're gonna kill me. How how do we say this again, man?

I'm sorry.

I should have written it down better.

Shanaki.

Shanaki. It's it's it's it's carrier. It's it's easier than it looks. I think whoever spelled your name, you should have a conversation with them.

I know it's probably, like like, seven hundred and twenty generations, but still, I'm kidding. I'm joking. Don't don't change it ever. So alright, folks.

We're gonna be talking about securing AI agents from MCP's tool used to shadow API key scroll key sprawl. So, Sarah Colton, I'll hand it off to y'all. Have a great webinar, folks. Awesome.

So I'm gonna tee up the problem here, and then Colton's gonna go over the solution.

So let's go ahead and go to the next slide.

So, basically, what we're looking at right now is a a situation amongst IT teams where productivity has increased dramatically even in the last five weeks. I don't know if anybody played with Opus four point five over the holidays, and especially with, like, the new, like, Ralph Wiggum looping tools that have come out. Like, it is incredible how much more you can get done in an afternoon than you used to be able to and how much higher quality. So amongst our customers, we are seeing ten times faster, coding with agents, three times more output.

And, obviously, you can run them twenty four seven. Right? So if you don't start a rough week of lip going before you go to bed, like, what are you doing? Why are you even a coder?

So we're seeing, at least forty percent, time saved among coders right now. So we're seeing this huge shift in how development teams are working day to day, where they are getting, feature requests on x and within hours implementing those feature requests because they're able to use these agents to get things done so much more quickly. And that's, like, done done done done with unit tests, with documentation, completely, end to end, features that are that are complete within hours. So, companies that are not adopting this are really falling behind those who are.

Right? It's it's getting very, very competitive right now to be a SaaS company. If you're not, responding to feature requests within hours, if you're if you have any existing bug reports, why haven't you just put them into cloud? Why haven't you just put them into Cloud Code and solved them?

Right? There's no excuse for having a bug in your software these days.

The catch comes in that the security teams are kind of getting steamrolled because these new tools have made people so productive, so amazingly, useful that, security teams really can't say no. Right? How can you say no to someone who says, I can ten x my productivity, and then you're supposed to sit there and say, like, actually, I'm really worried about security. Please don't do that. Right? So companies can't wait for security to catch up, so they're adopting anyway. And so we're gonna talk a little bit about the dangers that come, with this, and then Colton will talk to you about how we're gonna mitigate those.

So traditionally, among CSOs, what we're seeing right now is, like, they have two options. Option a is like, okay. We're gonna ban AI altogether. Like, it is so dangerous to our organization that that no one gets to use it.

And what we see is that developers use it anyway. Right? So they they'll go on their personal laptops and connect it to your corporate environment and and have Cloud Code do its thing, and then they will manually type in all the all the code that Cloud came up with, right, and do that shadow IT thing. It's even more dangerous because they're they're going through a loop of personal technology.

Or they're doing it in Slack.

Or they're doing it in Slack.

Yeah. That's that's the one where they're committing everything. Like, I'm telling you, as a CISO, I've seen the like, we don't ban AI.

I think anyone who ban I was shaking my head when he said that because I don't think anyone should be banning AI.

Really?

Ban AI.

It's it's kinda like the people who said the Internet was a fad, and people will go back to newspapers at some point. Right? Like, cut it. AI is here to stay, and it's definitely not going anywhere. You're likely gonna go somewhere if you don't learn how to securely adopt the business to AI at the speed the business needs as a practitioner. So just just putting that out there.

But what they'll do to kinda give you the workaround, they'll use Slack because they just will go and and and and and commit all the code through their Slack and then into your corporate environment. You never see it. You're not looking at it because you're not monitoring Slack to that level.

Yeah.

They'll just copy and paste it right into into there, and that's how they get it back and forth. Yeah.

Terrifying. Right? Okay. So as a CISO, you kind of have to say, okay. I'm going to allow AI.

But, what you have to understand is that if your developers are using Cloud Code, they're connecting it using model context protocol to your email, to your databases, to your ticketing system, to all of your SaaS apps. And there you have, like, traditional security tools. If you're using your CrowdStrike and your SIM and all of the things that you have invested in, they have zero visibility into what those connections look like and what's going on in that protocol exchange. It's not like a traditional API where you can watch that network traffic go back and forth.

You have no visibility into what those agents are doing and what code they are executing on a local machine and what information they are getting access to. And so, if you are just allowing AI with no tools, like, you are gonna have trouble sleeping at night because you are just opening up your environment to these huge risks.

And so, on the next slide, we're gonna talk a little bit about these are the top four risks that we have seen, and we're gonna go into each of these. So, road tool use where the agent executes commands, data exfiltration where it's sending sensitive data somewhere else, API key sprawl, where it is exposing sensitive credentials, and then malicious MCP servers where the the MCP server itself is actually malware.

We've seen all of these in the wild, this is really where secure traditional security fails. As I said, the tools that you've invested in, your DLP tools, your EDR tools, your SIM tools, they really don't catch these things. They're not prepared for these things. They're not built to to monitor this and to control this.

And, so it's really an entirely new world that we need new solutions to fix. So let's go over each of these, risks. So the first is rogue tool use. So by that, we mean that an a it executes commands that were not authorized by the user, and the user doesn't know what they're authorizing.

So in Claude, you have the ability to let it be quite independent on its own and and find and use tools, without your consent. And it is impossible for you to tell whether any given tool is legitimate or not. So we've seen CVEs that allow attackers to run malicious code and do remote code execution on a machine, and those that remote code execution got used by millions of developers. So this is not a theoretical attack.

This is something we have seen in the wild. We've seen for real where a human developer working day to day would never make this command.

An agent executes it because they don't understand the what they are authorizing in the moment. And so that kind of rogue tool use is really, really dangerous.

The second one we're looking at is data exfiltration. So traditional, data loss prevention systems, DLP systems can't distinguish between legitimate and malicious data transfers, when the agent is compromised. So if an agent's API calls, matched and the the API call matched expected parameters, the breaches like, the DLP system won't send you an alert. It won't, tell you that it's DLP because it looked like a valid API call.

And so agents are capable of sending sensitive and proprietary data to external unsecured services without authorization. Additionally, MCP has a capacity for servers and events to do something called elicitation. And what elicitation means is when you are connecting to an MCP server that's remote, it can ask information of the memory of your AI. So the AI where you research health things, where you research legal things, where you research CVEs that you might have in your environment, your AI memory knows all of that, and the MCP server can exfiltrate that information back to the MCP server if that MCP server is compromised or if it is run by an attacker from the beginning. That sort of data exfiltration can be very, very damaging. We saw an incident in twenty twenty four where there was an health care AI leak that cost fourteen million dollars in fines and three months of exposure. So these are things that are that are real risks.

The third one we're seeing is API key sprawl. And in some ways, this is a good thing. It means that people who are not traditionally developers are using, Claude code and using Vibe coding to make their own things, which is great except that they don't know how secrets work, and they don't know that API keys are supposed to be protected. And so they go and commit them into GitHub, and they go and release them as open source on the Internet.

And so you are seeing, marketing managers. You're seeing HR people who are just making their own tools. They think they're doing a great job and being more productive workers, which they are. That is true.

But they've never had to deal with API keys before. And so when they go into their SaaS tool and say, oh, I'm just gonna download this API key. I'm gonna give it to Claude. Claude could do my work for me.

This is great. They don't realize that that API key can then leak. Right? So we have seen an example in Samsung where, there was a code leak via ChatGPT where employee pasted confidential source code right into ChatGPT causing AI exposure, credential compromise, and then somebody has to go in and manually rotate those keys.

Right? And so we really don't wanna see, employees having direct access to those API keys. That's quite dangerous.

And then the last one, I think, is one that most people are sleeping on that they are not aware of in terms of risks to their environment, and that's malicious MCP servers. So when a agent connects to a malicious or compromised MCP server, it can inject harmful instructions or steal data.

So there is no publisher identity verification for an MCP server. You do not know whether any given MCP server is, valid or not, whether it is the official MCP server for that thing or whether it is not. And there's no way to tell if there's been a supply chain attack. Right? If some library that that MCP server used got compromised or if the code itself got compromised, if that GitHub repository got taken over by someone else.

Right? And there's no way to, isolate that MCP server if it gets compromised. And so, we're seeing a number of different, attacks in this. The the first one we wanted to talk about was called Postmark.

This was, disguised as a legitimate email tool, but it was in fact malware. It was secretly BCC ing every email to the attacker and had sixteen hundred downloads before anyone figured out what was going on. And the so the attacker got all of those emails with no one knowing. Right?

No one could tell that this MCP server was doing that in the background.

And then the second one we wanted to bring up was WhatsApp. And so this was a tool poisoning attack. So this was a completely valid MCP server that got compromised and got malware added to it that added hidden instructions that would exfiltrate the entire message history to the attacker. And so this sort of of MCP compromise is something that is entirely possible in your environment. There's no way for you to know what MCP servers your developers are using today and whether or not they have been compromised. And so this is really one that a lot of CSOs are sleeping on that they are not aware that this is even attack that can happen in their environment.

And, hopefully hopefully, now you know and you'll be aware of it. But, I think a lot of companies are gonna get compromised by this one before it becomes kind of mainstream knowledge that this is this is a serious vulnerability in their environment.

So with that, I will hand off to, mister Shnacki. See, I can do it, James.

Thank you. Thank you.

And, he's gonna tell you about our Beyond Identity solutions.

It's not fair. You work with Colton every day.

Well, I'm curious.

You get to work with Colton every day.

And then and then number two, like, WTF.

It's a It's a New Year's era. I thought you and I were starting new.

We know each other well enough that I can roast you a little bit.

You can. You're more than welcome to. Okay. Ultimate luck. Alright.

I'll, talk about, like, the three pillars of our solution, which are identify visibility and control.

So our our core beyond identity tech, we cryptographically tie the human identity to the device.

And in this new age of AI, we're extending that chain of trust to the agents that human users are invoking on their desktops or in the cloud, and we're providing identity for that so that you can trace Anytime an agent does something, you can trace that now back to the device that the agent was running on and the human that, invoked the agent.

So now that we're able to provide, identity to these new things like agents and models and MCP servers, we now have all of this visibility into what is actually, going on in your AI stack.

So, like, what agents are being invoked?

What tools do those agents have available? Like, is that agent connected to an MCP server or some local tools that are provided with Claude?

And, now that you have this visibility, you can implement deterministic access controls inside of your AI stack. So if you see some models that your developers are using, you could block that, or you see a suspicious looking MCP server. You can just write a policy saying, you know, this MCP server is not allowed.

And I'll kinda get into sort of, like, how the solution is deployed. So so it first starts off with, we run an authenticator on the end user device, and that is how we tie the human identity to the device identity. And now we're extending that to create identity for the agents that are running on your users' desktops.

So when a user starts interacting with a desktop agent like Claude Code or Codex or something, all of that AI traffic is now routed through what we're calling our AI proxy. So now we're able this is what gives us the visibility into what models are being used, what tools are being used, audit logs for everything, the ability to write policy on what tools are allowed, denied.

And, now let's, get into the demo demo time.

Okay. So this first screen is just like a dashboard showing all of the AI activity going on in your environment.

And there's some pretty basic things like what are the most used models, what are the most used agents, what are the most used tools. And remember, like, MCP server, it's it's just a tool. Just another name for a tool.

So you can see that, and you can see, which users are using those tools.

So I like to pull up this visual of one of our active users.

You know, we can we can break it down to see that this corporate identity, Jing, we see that she's been using these four different versions of Claude Code, and we see that Claude Code has been invoking these tools.

Now some of these tools like Bash, they they really can do anything. You we're kind of in this new territory where it's it's like, the perimeter here is sort of unbounded because you're relying on the LLM to tell the tool what to do.

So this is just a nice visual I like to show, showing, like, the chain from the user to the device to the agent and which tools they're using.

So as more users start to use the solution, we're we start to create this sort of catalog of all the tools that are that are being made to the users. And a lot of these tools already come packaged in, like, Claude code. So, like, Bash, for example, we're able to see exactly, you know, what the description and the purpose of the tool is.

So now we have an inventory of what models are being used, what agents are being used, who are using the agents, and then what tools are being used. So the tools could be whatever is packaged with Claude or maybe someone brought their own MCP servers. You can see exactly, you know, what the purpose of that MCP server is.

So I'm gonna go into a little demo now showing, like, well, what what exactly is going on when an agent invokes a tool? So I'm I'm just in Claude code right now. I'm gonna say what files are in my directory.

So what's happening here is the agent is sending a message up to the LLM in the cloud saying the user query is asking about files and their directory, and they also have this set of baked in tools such as Bash. So the LLM will process that request, and then it will send an action back to the agent saying, you know, use this bash command to run l s dot or dash l a, and then that will get executed.

So the other visibility we're able provide is not just, like, what tools the agent has available to them, but every time an agent invokes one of those tools, what exactly is that tool running on the desktop?

So in this example, we can see that Colton, me, kicked off this tool using the clawed code CLI, and here are the inputs to the tool, and here are the outputs for the tool.

So let's say you you're seeing some tool usage that you don't approve of or, like, an MCP server, that that you you don't really considered authorized by your organization.

You can write policy that says that you just want to block that tool. You wanna block users from using that tool.

So then when I go when the user goes into the terminal again hey. Can you look again at what files are in my directory?

We now have this policy written saying that the Bash tool is not allowed for that user on that device. And, like, what the user sees is basically, like, you know, this tool you're trying to invoke has been blocked by the system administrator.

Let's see here.

Alright. Let me just undeny that.

Yeah. So you can write more advanced policies, like, by the user, by the device.

Maybe you have also not a desktop agent, but you have agents spun up, deployed in your cloud cluster.

You can now provide visibility and policy on all of the actions and tools that your cloud containers are allowed to do. And, like, I would say a pretty common one is you don't like, there's this one tool called WebFetch that comes packaged with a lot of these agents, and that's probably something you don't want running in your your cloud to just go do random queries on the web. So you could just write a tool policy like that.

So we have all the you know, we're seeing all of this data. We have all of this visibility, and we can provide access control to it.

And we also have, like, the detailed, basically, the detailed logs for all of the AI traffic that is going on in your environment.

And that's that's pretty much it for the demo.

I didn't so what we've covered in the demo was we're able to provide identity for the human, the device, the agent that's running on the device, and the tools that this agent has access to, provide visibility into your whole AI stack, and then you can implement access controls in certain points in that AI stack. Like, what model should this user be allowed to use? What agents can they use, not use? Tools, especially like rogue MCP servers, you can just squash those remotely from this interface.

I think that's oh, did the demo.

So, yeah, it's the what we're we want people to roll out AI more quickly, but also securely.

So by providing identity layer and visibility and access control to this new AI stack that, whether you know it or not, is probably already deployed in your environment.

We've had a cup couple of customers who are already using us, and this is just, you know, some of their feedback.

And if you want to learn more, if you wanna get started, go visit us at beyond identity dot a I.

Well, Colton, Sarah, you guys, once again, twenty six minutes, almost twenty seven, knocking it out of the ballpark in terms of timing.

I will give you that. And, also, knocking it out of the ballpark in terms of actually managing the complexity of how AI is being used by empowering practitioners with the right tools. That goes a long way. Folks, go check out beyond identity dot a I, scan that QR code. It's not gonna run any scripts in the background of your device to accidentally steal all of your information. I promise we checked.

I can almost guarantee it, but don't take me to the bank with that one.

But but, Sarah, Colton, thank you so much for coming on the Hacker News webinars and kicking off this year with another amazing webinar and and more great work that Beyond Identity is doing to help security practitioners be better at what they're supposed to do in a time where speed matters and visibility and mitigation matters as well.

I'll just say this as we end it and end this webinar here.

As security practitioners, we are business enablers.

That's it.

We're not when I hear a security practitioner say, I shut it down, I cringe at that prideful moment because the business does not exist without generating revenue. And if it doesn't generate revenue, you don't have a job. And you're the first job going. Just wanna put that clear because they're gonna say, why aren't we doing that revenue?

They're blocking it. Who's blocking it? See you later. If you don't believe me, study the history of Equifax pre the twenty seventeen breach.

Just study it. Just go back and listen to insiders from Equifax who used to talk pre not the sister that was there during the breach, but the one before who locked everything down and said no to a lot of stuff and created all these backdoors.

The new system came in and overcompensated by being really, really loose, which led to Apache struts not getting patched in time, which led to, at the time, what was the most significant data breach in American history at that time. I mean, this was almost ten years ago. So, you know, by the way, a lot of water have gone under the bridge from Equifax to today. But understand that that's the same thing that applies now with AI.

We need to be business enablers. Tools like what we saw today help us do that. And when we present it effectively, we'll get the money to do it as well. So just wanna put that out there.

If you need more direction on how to do that, you can reach out to me. I'm available on LinkedIn. Reach out to Sarah and Colton. They'll be happy to help you as well figure that out.

But you've got you've gotta make the business case, and you'll get the money for it if it's supporting generating more revenue. What the business is doing is gonna generate more revenue. They'll spend the money to make sure they do it right every time.

Every time. Alright. Thank you all for tuning in. I hope that was helpful. I hope you enjoyed Sarah and Colton's presentation.

Great demo by Colton, by the way. I was glued to my screen watching it. So so really do appreciate it. Make sure to check out the hacker news dot com forward slash webinars for a lot more webinars coming this year.

I mean, we are going insane this year. You get to dictate what we talk about.

So go let us know at the hacker news dot com forward slash webinar. This webinar is available on demand also after the fact, so go check it out there. Thank you all for tuning in. Have a great New Year, and stay cyber safe.

Key Takeaways

Full Transcript

Happy New Year, boys and girls. Welcome back to the Hacker News webinar. I hope everyone had a great and amazing New Year. We're in the month of January where that means that everyone is starting fresh.

But you know what isn't fresh? AI. It's been around for a while. It's a buzzword.

It's a thing. Right? Sarah, welcome back to the hacker news webinar. Colton, welcome.

So, folks, we've got a packed webinar today. We've got a live demo that Colton is gonna execute flawlessly because, you know, it's live and nothing ever goes wrong when we do anything live here ever.

But in that event, thank you all for taking time out of your busy schedules to be here with us today. Our friends from Beyond Identity and Sarah, who's been a regular at this point, we should just have her cohost these webinars with us, is back to talk a little bit about securing AgenTik AI. The last one we had was Sarah, Jenk, and I. And if you missed it, it's in the December section.

We ended the year with Sarah. So she's back in the New Year to kick it off. The feedback was phenomenal from that webinar. So, we're gonna be talking about that.

Questions are welcome. They're gonna be answered after the webinar. Obviously, this webinar runs four different times, so there's no possible way. We have four different hours to do this webinar.

So we'll be answering all your questions via email after the webinar. Please ask away. Don't be afraid to do so. Additionally, your comments during the webinar entertain me very much, because I get to see those. So I love them. So put them in there. Let us know how you're feeling.

We'll get to all of that. Let me introduce Sarah Cicchetti. She is Sarah Cicchetti. Right?

We we gotta we gotta do it with the Italian. She's the director of product strategy at our friends over at Beyond Identity. And Colton, you're gonna kill me. How how do we say this again, man?

I'm sorry.

I should have written it down better.

Shanaki.

Shanaki. It's it's it's it's carrier. It's it's easier than it looks. I think whoever spelled your name, you should have a conversation with them.

I know it's probably, like like, seven hundred and twenty generations, but still, I'm kidding. I'm joking. Don't don't change it ever. So alright, folks.

We're gonna be talking about securing AI agents from MCP's tool used to shadow API key scroll key sprawl. So, Sarah Colton, I'll hand it off to y'all. Have a great webinar, folks. Awesome.

So I'm gonna tee up the problem here, and then Colton's gonna go over the solution.

So let's go ahead and go to the next slide.

So, basically, what we're looking at right now is a a situation amongst IT teams where productivity has increased dramatically even in the last five weeks. I don't know if anybody played with Opus four point five over the holidays, and especially with, like, the new, like, Ralph Wiggum looping tools that have come out. Like, it is incredible how much more you can get done in an afternoon than you used to be able to and how much higher quality. So amongst our customers, we are seeing ten times faster, coding with agents, three times more output.

And, obviously, you can run them twenty four seven. Right? So if you don't start a rough week of lip going before you go to bed, like, what are you doing? Why are you even a coder?

So we're seeing, at least forty percent, time saved among coders right now. So we're seeing this huge shift in how development teams are working day to day, where they are getting, feature requests on x and within hours implementing those feature requests because they're able to use these agents to get things done so much more quickly. And that's, like, done done done done with unit tests, with documentation, completely, end to end, features that are that are complete within hours. So, companies that are not adopting this are really falling behind those who are.

Right? It's it's getting very, very competitive right now to be a SaaS company. If you're not, responding to feature requests within hours, if you're if you have any existing bug reports, why haven't you just put them into cloud? Why haven't you just put them into Cloud Code and solved them?

Right? There's no excuse for having a bug in your software these days.

The catch comes in that the security teams are kind of getting steamrolled because these new tools have made people so productive, so amazingly, useful that, security teams really can't say no. Right? How can you say no to someone who says, I can ten x my productivity, and then you're supposed to sit there and say, like, actually, I'm really worried about security. Please don't do that. Right? So companies can't wait for security to catch up, so they're adopting anyway. And so we're gonna talk a little bit about the dangers that come, with this, and then Colton will talk to you about how we're gonna mitigate those.

So traditionally, among CSOs, what we're seeing right now is, like, they have two options. Option a is like, okay. We're gonna ban AI altogether. Like, it is so dangerous to our organization that that no one gets to use it.

And what we see is that developers use it anyway. Right? So they they'll go on their personal laptops and connect it to your corporate environment and and have Cloud Code do its thing, and then they will manually type in all the all the code that Cloud came up with, right, and do that shadow IT thing. It's even more dangerous because they're they're going through a loop of personal technology.

Or they're doing it in Slack.

Or they're doing it in Slack.

Yeah. That's that's the one where they're committing everything. Like, I'm telling you, as a CISO, I've seen the like, we don't ban AI.

I think anyone who ban I was shaking my head when he said that because I don't think anyone should be banning AI.

Really?

Ban AI.

It's it's kinda like the people who said the Internet was a fad, and people will go back to newspapers at some point. Right? Like, cut it. AI is here to stay, and it's definitely not going anywhere. You're likely gonna go somewhere if you don't learn how to securely adopt the business to AI at the speed the business needs as a practitioner. So just just putting that out there.

But what they'll do to kinda give you the workaround, they'll use Slack because they just will go and and and and and commit all the code through their Slack and then into your corporate environment. You never see it. You're not looking at it because you're not monitoring Slack to that level.

Yeah.

They'll just copy and paste it right into into there, and that's how they get it back and forth. Yeah.

Terrifying. Right? Okay. So as a CISO, you kind of have to say, okay. I'm going to allow AI.

But, what you have to understand is that if your developers are using Cloud Code, they're connecting it using model context protocol to your email, to your databases, to your ticketing system, to all of your SaaS apps. And there you have, like, traditional security tools. If you're using your CrowdStrike and your SIM and all of the things that you have invested in, they have zero visibility into what those connections look like and what's going on in that protocol exchange. It's not like a traditional API where you can watch that network traffic go back and forth.

You have no visibility into what those agents are doing and what code they are executing on a local machine and what information they are getting access to. And so, if you are just allowing AI with no tools, like, you are gonna have trouble sleeping at night because you are just opening up your environment to these huge risks.

And so, on the next slide, we're gonna talk a little bit about these are the top four risks that we have seen, and we're gonna go into each of these. So, road tool use where the agent executes commands, data exfiltration where it's sending sensitive data somewhere else, API key sprawl, where it is exposing sensitive credentials, and then malicious MCP servers where the the MCP server itself is actually malware.

We've seen all of these in the wild, this is really where secure traditional security fails. As I said, the tools that you've invested in, your DLP tools, your EDR tools, your SIM tools, they really don't catch these things. They're not prepared for these things. They're not built to to monitor this and to control this.

And, so it's really an entirely new world that we need new solutions to fix. So let's go over each of these, risks. So the first is rogue tool use. So by that, we mean that an a it executes commands that were not authorized by the user, and the user doesn't know what they're authorizing.

So in Claude, you have the ability to let it be quite independent on its own and and find and use tools, without your consent. And it is impossible for you to tell whether any given tool is legitimate or not. So we've seen CVEs that allow attackers to run malicious code and do remote code execution on a machine, and those that remote code execution got used by millions of developers. So this is not a theoretical attack.

This is something we have seen in the wild. We've seen for real where a human developer working day to day would never make this command.

An agent executes it because they don't understand the what they are authorizing in the moment. And so that kind of rogue tool use is really, really dangerous.

The second one we're looking at is data exfiltration. So traditional, data loss prevention systems, DLP systems can't distinguish between legitimate and malicious data transfers, when the agent is compromised. So if an agent's API calls, matched and the the API call matched expected parameters, the breaches like, the DLP system won't send you an alert. It won't, tell you that it's DLP because it looked like a valid API call.

And so agents are capable of sending sensitive and proprietary data to external unsecured services without authorization. Additionally, MCP has a capacity for servers and events to do something called elicitation. And what elicitation means is when you are connecting to an MCP server that's remote, it can ask information of the memory of your AI. So the AI where you research health things, where you research legal things, where you research CVEs that you might have in your environment, your AI memory knows all of that, and the MCP server can exfiltrate that information back to the MCP server if that MCP server is compromised or if it is run by an attacker from the beginning. That sort of data exfiltration can be very, very damaging. We saw an incident in twenty twenty four where there was an health care AI leak that cost fourteen million dollars in fines and three months of exposure. So these are things that are that are real risks.

The third one we're seeing is API key sprawl. And in some ways, this is a good thing. It means that people who are not traditionally developers are using, Claude code and using Vibe coding to make their own things, which is great except that they don't know how secrets work, and they don't know that API keys are supposed to be protected. And so they go and commit them into GitHub, and they go and release them as open source on the Internet.

And so you are seeing, marketing managers. You're seeing HR people who are just making their own tools. They think they're doing a great job and being more productive workers, which they are. That is true.

But they've never had to deal with API keys before. And so when they go into their SaaS tool and say, oh, I'm just gonna download this API key. I'm gonna give it to Claude. Claude could do my work for me.

This is great. They don't realize that that API key can then leak. Right? So we have seen an example in Samsung where, there was a code leak via ChatGPT where employee pasted confidential source code right into ChatGPT causing AI exposure, credential compromise, and then somebody has to go in and manually rotate those keys.

Right? And so we really don't wanna see, employees having direct access to those API keys. That's quite dangerous.

And then the last one, I think, is one that most people are sleeping on that they are not aware of in terms of risks to their environment, and that's malicious MCP servers. So when a agent connects to a malicious or compromised MCP server, it can inject harmful instructions or steal data.

So there is no publisher identity verification for an MCP server. You do not know whether any given MCP server is, valid or not, whether it is the official MCP server for that thing or whether it is not. And there's no way to tell if there's been a supply chain attack. Right? If some library that that MCP server used got compromised or if the code itself got compromised, if that GitHub repository got taken over by someone else.

Right? And there's no way to, isolate that MCP server if it gets compromised. And so, we're seeing a number of different, attacks in this. The the first one we wanted to talk about was called Postmark.

This was, disguised as a legitimate email tool, but it was in fact malware. It was secretly BCC ing every email to the attacker and had sixteen hundred downloads before anyone figured out what was going on. And the so the attacker got all of those emails with no one knowing. Right?

No one could tell that this MCP server was doing that in the background.

And then the second one we wanted to bring up was WhatsApp. And so this was a tool poisoning attack. So this was a completely valid MCP server that got compromised and got malware added to it that added hidden instructions that would exfiltrate the entire message history to the attacker. And so this sort of of MCP compromise is something that is entirely possible in your environment. There's no way for you to know what MCP servers your developers are using today and whether or not they have been compromised. And so this is really one that a lot of CSOs are sleeping on that they are not aware that this is even attack that can happen in their environment.

And, hopefully hopefully, now you know and you'll be aware of it. But, I think a lot of companies are gonna get compromised by this one before it becomes kind of mainstream knowledge that this is this is a serious vulnerability in their environment.

So with that, I will hand off to, mister Shnacki. See, I can do it, James.

Thank you. Thank you.

And, he's gonna tell you about our Beyond Identity solutions.

It's not fair. You work with Colton every day.

Well, I'm curious.

You get to work with Colton every day.

And then and then number two, like, WTF.

It's a It's a New Year's era. I thought you and I were starting new.

We know each other well enough that I can roast you a little bit.

You can. You're more than welcome to. Okay. Ultimate luck. Alright.

I'll, talk about, like, the three pillars of our solution, which are identify visibility and control.

So our our core beyond identity tech, we cryptographically tie the human identity to the device.

And in this new age of AI, we're extending that chain of trust to the agents that human users are invoking on their desktops or in the cloud, and we're providing identity for that so that you can trace Anytime an agent does something, you can trace that now back to the device that the agent was running on and the human that, invoked the agent.

So now that we're able to provide, identity to these new things like agents and models and MCP servers, we now have all of this visibility into what is actually, going on in your AI stack.

So, like, what agents are being invoked?

What tools do those agents have available? Like, is that agent connected to an MCP server or some local tools that are provided with Claude?

And, now that you have this visibility, you can implement deterministic access controls inside of your AI stack. So if you see some models that your developers are using, you could block that, or you see a suspicious looking MCP server. You can just write a policy saying, you know, this MCP server is not allowed.

And I'll kinda get into sort of, like, how the solution is deployed. So so it first starts off with, we run an authenticator on the end user device, and that is how we tie the human identity to the device identity. And now we're extending that to create identity for the agents that are running on your users' desktops.

So when a user starts interacting with a desktop agent like Claude Code or Codex or something, all of that AI traffic is now routed through what we're calling our AI proxy. So now we're able this is what gives us the visibility into what models are being used, what tools are being used, audit logs for everything, the ability to write policy on what tools are allowed, denied.

And, now let's, get into the demo demo time.

Okay. So this first screen is just like a dashboard showing all of the AI activity going on in your environment.

And there's some pretty basic things like what are the most used models, what are the most used agents, what are the most used tools. And remember, like, MCP server, it's it's just a tool. Just another name for a tool.

So you can see that, and you can see, which users are using those tools.

So I like to pull up this visual of one of our active users.

You know, we can we can break it down to see that this corporate identity, Jing, we see that she's been using these four different versions of Claude Code, and we see that Claude Code has been invoking these tools.

Now some of these tools like Bash, they they really can do anything. You we're kind of in this new territory where it's it's like, the perimeter here is sort of unbounded because you're relying on the LLM to tell the tool what to do.

So this is just a nice visual I like to show, showing, like, the chain from the user to the device to the agent and which tools they're using.

So as more users start to use the solution, we're we start to create this sort of catalog of all the tools that are that are being made to the users. And a lot of these tools already come packaged in, like, Claude code. So, like, Bash, for example, we're able to see exactly, you know, what the description and the purpose of the tool is.

So now we have an inventory of what models are being used, what agents are being used, who are using the agents, and then what tools are being used. So the tools could be whatever is packaged with Claude or maybe someone brought their own MCP servers. You can see exactly, you know, what the purpose of that MCP server is.

So I'm gonna go into a little demo now showing, like, well, what what exactly is going on when an agent invokes a tool? So I'm I'm just in Claude code right now. I'm gonna say what files are in my directory.

So what's happening here is the agent is sending a message up to the LLM in the cloud saying the user query is asking about files and their directory, and they also have this set of baked in tools such as Bash. So the LLM will process that request, and then it will send an action back to the agent saying, you know, use this bash command to run l s dot or dash l a, and then that will get executed.

So the other visibility we're able provide is not just, like, what tools the agent has available to them, but every time an agent invokes one of those tools, what exactly is that tool running on the desktop?

So in this example, we can see that Colton, me, kicked off this tool using the clawed code CLI, and here are the inputs to the tool, and here are the outputs for the tool.

So let's say you you're seeing some tool usage that you don't approve of or, like, an MCP server, that that you you don't really considered authorized by your organization.

You can write policy that says that you just want to block that tool. You wanna block users from using that tool.

So then when I go when the user goes into the terminal again hey. Can you look again at what files are in my directory?

We now have this policy written saying that the Bash tool is not allowed for that user on that device. And, like, what the user sees is basically, like, you know, this tool you're trying to invoke has been blocked by the system administrator.

Let's see here.

Alright. Let me just undeny that.

Yeah. So you can write more advanced policies, like, by the user, by the device.

Maybe you have also not a desktop agent, but you have agents spun up, deployed in your cloud cluster.

You can now provide visibility and policy on all of the actions and tools that your cloud containers are allowed to do. And, like, I would say a pretty common one is you don't like, there's this one tool called WebFetch that comes packaged with a lot of these agents, and that's probably something you don't want running in your your cloud to just go do random queries on the web. So you could just write a tool policy like that.

So we have all the you know, we're seeing all of this data. We have all of this visibility, and we can provide access control to it.

And we also have, like, the detailed, basically, the detailed logs for all of the AI traffic that is going on in your environment.

And that's that's pretty much it for the demo.

I didn't so what we've covered in the demo was we're able to provide identity for the human, the device, the agent that's running on the device, and the tools that this agent has access to, provide visibility into your whole AI stack, and then you can implement access controls in certain points in that AI stack. Like, what model should this user be allowed to use? What agents can they use, not use? Tools, especially like rogue MCP servers, you can just squash those remotely from this interface.

I think that's oh, did the demo.

So, yeah, it's the what we're we want people to roll out AI more quickly, but also securely.

So by providing identity layer and visibility and access control to this new AI stack that, whether you know it or not, is probably already deployed in your environment.

We've had a cup couple of customers who are already using us, and this is just, you know, some of their feedback.

And if you want to learn more, if you wanna get started, go visit us at beyond identity dot a I.

Well, Colton, Sarah, you guys, once again, twenty six minutes, almost twenty seven, knocking it out of the ballpark in terms of timing.

I will give you that. And, also, knocking it out of the ballpark in terms of actually managing the complexity of how AI is being used by empowering practitioners with the right tools. That goes a long way. Folks, go check out beyond identity dot a I, scan that QR code. It's not gonna run any scripts in the background of your device to accidentally steal all of your information. I promise we checked.

I can almost guarantee it, but don't take me to the bank with that one.

But but, Sarah, Colton, thank you so much for coming on the Hacker News webinars and kicking off this year with another amazing webinar and and more great work that Beyond Identity is doing to help security practitioners be better at what they're supposed to do in a time where speed matters and visibility and mitigation matters as well.

I'll just say this as we end it and end this webinar here.

As security practitioners, we are business enablers.

That's it.

We're not when I hear a security practitioner say, I shut it down, I cringe at that prideful moment because the business does not exist without generating revenue. And if it doesn't generate revenue, you don't have a job. And you're the first job going. Just wanna put that clear because they're gonna say, why aren't we doing that revenue?

They're blocking it. Who's blocking it? See you later. If you don't believe me, study the history of Equifax pre the twenty seventeen breach.

Just study it. Just go back and listen to insiders from Equifax who used to talk pre not the sister that was there during the breach, but the one before who locked everything down and said no to a lot of stuff and created all these backdoors.

The new system came in and overcompensated by being really, really loose, which led to Apache struts not getting patched in time, which led to, at the time, what was the most significant data breach in American history at that time. I mean, this was almost ten years ago. So, you know, by the way, a lot of water have gone under the bridge from Equifax to today. But understand that that's the same thing that applies now with AI.

We need to be business enablers. Tools like what we saw today help us do that. And when we present it effectively, we'll get the money to do it as well. So just wanna put that out there.

If you need more direction on how to do that, you can reach out to me. I'm available on LinkedIn. Reach out to Sarah and Colton. They'll be happy to help you as well figure that out.

But you've got you've gotta make the business case, and you'll get the money for it if it's supporting generating more revenue. What the business is doing is gonna generate more revenue. They'll spend the money to make sure they do it right every time.

Every time. Alright. Thank you all for tuning in. I hope that was helpful. I hope you enjoyed Sarah and Colton's presentation.

Great demo by Colton, by the way. I was glued to my screen watching it. So so really do appreciate it. Make sure to check out the hacker news dot com forward slash webinars for a lot more webinars coming this year.

I mean, we are going insane this year. You get to dictate what we talk about.

So go let us know at the hacker news dot com forward slash webinar. This webinar is available on demand also after the fact, so go check it out there. Thank you all for tuning in. Have a great New Year, and stay cyber safe.

.avif)

.avif)

.avif)

.avif)