Video

What's Wrong With Our Sign In Process? Hackers Don’t Break In: They Log In!

Table of contents

The Attacker Gave Claude Their API Key: Why AI Agents Need Hardware-Bound Identity

February 2, 2026

Beyond Identity Opens Early Access for the AI Security Suite

January 22, 2026

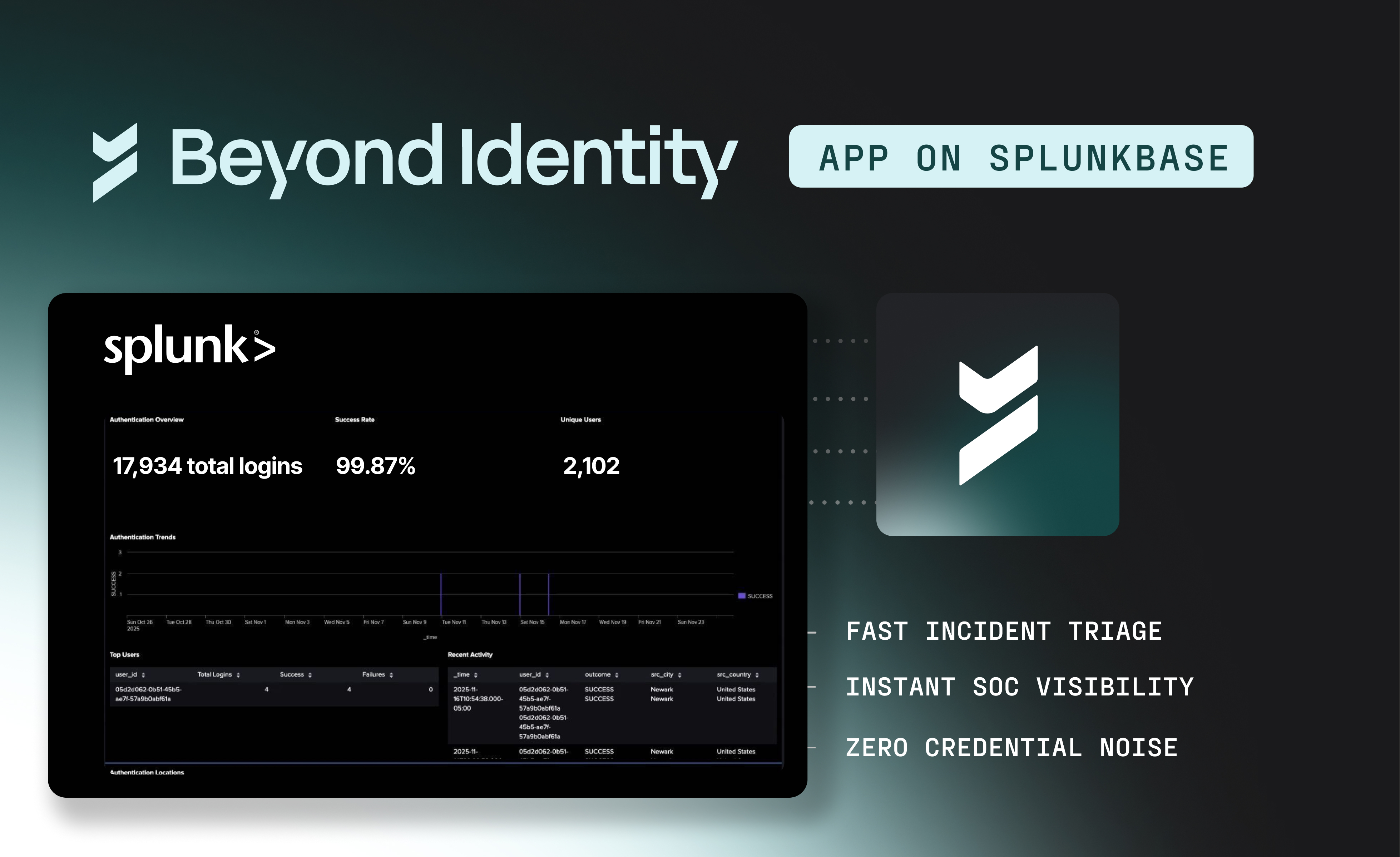

Unlock High-Fidelity Security with the New Beyond Identity App for Splunk

January 16, 2026

Beyond Identity Joins NVIDIA Inception Program to Advance Hardware-Enforced Security for the AI Era

January 5, 2026

Chips and SLSA: Why TPMs Matter for Code Commits

December 19, 2025

Why Is Code Provenance Non-Negotiable in the Age of AI?

December 17, 2025

.avif)

How Beyond Identity & Nametag Stop Identity Fraud at Onboarding & Recovery

November 19, 2025

.avif)

New: Self Remediation Features to Reduce Help Desk Tickets and Improve UX

November 13, 2025

Make Identity-Based Attacks Impossible

August 11, 2025

Meeting CJIS Compliance with WDL

May 27, 2025

PCI DSS Compliance with Beyond Identity

March 6, 2025

.avif)

Online Job Board Safety: How and Why To Avoid a Scam

March 7, 2024

ChatGPT's Dark Side: Cyber Experts Warn AI Will Aid Cyberattacks in 2023

February 29, 2024

Improving User Access and Identity Management to Address Modern Enterprise Risk

February 20, 2024

Okta Cyber Trust Report

January 5, 2024

Securing Remote Work: Insights into Cyber Threats and Solutions

October 30, 2023

Networking Dinner with MightyID and Tevora

December 9, 2025

Alphinia CISO Mastermind Dinner

December 1, 2025

Myriad360 Client Appreciation Celebration

November 20, 2025

GuidePoint Security Movie Premeire of Wicked

November 19, 2025

.avif)

How AI Is Accelerating Threats: The Inside Scoop on Emerging Phishing GPTs

November 18, 2025

GuidePoint Security 3rd Annual Houston Golf Outing

November 13, 2025

GuidePoint Security's Pinehurst Golf Outing

November 12, 2025

GuidePoint Security Public Sector Vendor Fair

November 5, 2025