Secure Your Private AI

TL;DR

Full Transcript

Hello, everybody. Welcome to another Beyond Identity webinar. We have some great content today, how to secure your private AI, a guide for enterprises building internal agents.

I'm Kasia. I'm a Product Marketing Manager at Beyond Identity, and we have two, amazing guests with us today, Colton and Huy. So I'm gonna let them introduce themselves.

Huy, you're our special guest. Would you like to introduce yourself?

Yep. My name is Huy Ly, and I am the Head of Global IT Security and infrastructure for Monolithic Power Systems (MPS).

Hi. I'm Colton. I'm a Product Manager here at Beyond Identity, and I'm focusing on the intersection of AI and identity and access management.

Awesome. So we're gonna just start off a little bit about MPS. We're so lucky to have Huy from MPS join us here today to talk about how he has built and secured his AI agents for MPS. So we before we go into that, would love a little introduction on MPS, you don't mind, Huy.

Yeah. MPS is a semiconductor company located headquarter in Kirkland, Washington.

We the company start in nineteen ninety seven by a gentleman named Michael Hsing, and he has been a CEO and cofounder. We are a Fortune 500 company that is specialized in data center, AI, automobile, and a lot of consumer product.

Awesome. Yeah. And you guys have done some great things. I saw that you were on Fortune's one hundred fastest growing companies in the past couple of years, and you've also been a customer of Beyond Identity for the past two or three years.

So a little bit of an agenda of what we're gonna go over today because then we have a lot of great content, and a lot of great demos too, so get ready. We're gonna start off with, how to build AI agents from the ground up.

Thankfully, Huy is here to answer a lot of questions for us. We'll talk about, risks with the rise of AI, how MPS solved AI agent security, also the future of AI security with the demo from Colton, and then lastly, wrapping up with some key takeaways.

So let's get right into it. So first up, you know, we're gonna be doing a little bit of, like, of a q and a session with Huy and then also some demos. So starting off with some q and a on, like, how to build AI agents from the ground up.

It's actually really interesting because most AI adoption starts outside of the IT and security departments. Most of the time, it's product, engineering, or marketing. So what do you think made MPS different in this scenario?

Yeah. For MPS, because we are a semiconductor company, so a lot of our product are built based on intellectual property.

And because of that reason, we do not want our engineer to communicate with JCPT perplexity or any of the commercial foundation and leaking out our secret sauce to the world. Right? So because of that, what the footing that we did what we blocked all the AI asset to the Internet.

But we cut but we're but we want to make sure that we continue innovate. So to solve that problem, we decide that we are going to build our own private AI for MPS.

Awesome. Got it. And that kind of answers my next question. Like, why did MPS choose to build versus buy? You know, sounds like, you know, you didn't want to lose the secret sauce, so contain a lot of information inside. Was there anything else to the that decision?

Yeah. I I think there's there's a multiple reason for that. Right? So numb number one, because we don't want to lead our intellectual property to the world. So and there, it's actually auctioned out there in the marketplace.

The Clo, you know, the Clo model, like OpenAI, and then the open source model in the OLAMA community.

And because my team have been, you know, in the DevOps space for a long time, we you know, majority of my team came with infrastructure, large scale data center, infrastructure as code. So because of that, what we what we did, what we basically spending the last the the first two, three months to explore what the option and, importantly, how much it cost because everyone want to jump into the AI bandwagon. But the price hack, it will be very expensive if you're looking at some of the big label. Right? So if you go with the alpha AI factory or go with HPE for for the same concept, it will be ten of million dollar. So it's it's a very difficult thing to try to sell to a executive. So because of that, we decided to build our own.

Yeah. And you've already gotten your AI to be used by a thousand plus employees, so great traction there.

In that case, like, would you, like to share a little bit more about your, your AII agents? Like, can we see a little bit of how they work and how they were built?

Yeah. I can give you a quick demo on how we got, how we build our agent, importantly, how we integrate with Beyond Identity.

Where I came from is security. So there is Zero Trust and validate, especially with AI. So with that, I'm going to give you a quick demo. On my screen, you will see the login screen that the employee when they actually accessing the system. So when I click on log in, here you go. As simple as that.

You are now in our basically, you're in our chat box environment where you can do text to image. You can do content creation. You can do co augmentation.

All that can be done with this interface.

Wow. Incredible. Yeah. And you've built, multiple AI agents for various scenarios, if I remember correctly.

Yep. So as you can see here, did it a lit up all the different model that that we have in our infrastructure. And, if you look on the left right here, basically, the this is all the prompt that I use to communicate with the agent.

Awesome. Very cool. Yeah. And, I think I remember that this, this was something that has been, like, a passion of yours, things that you're doing outside of work to continuously building on this AI agent.

Yeah. It it actually it my weekend fun job that, that I'm working putting audit together. So a lot of time myself and also some of my team member working together in putting up update infrastructure. And let's see. I want to share with you another metric that we use to basically monitoring the usage.

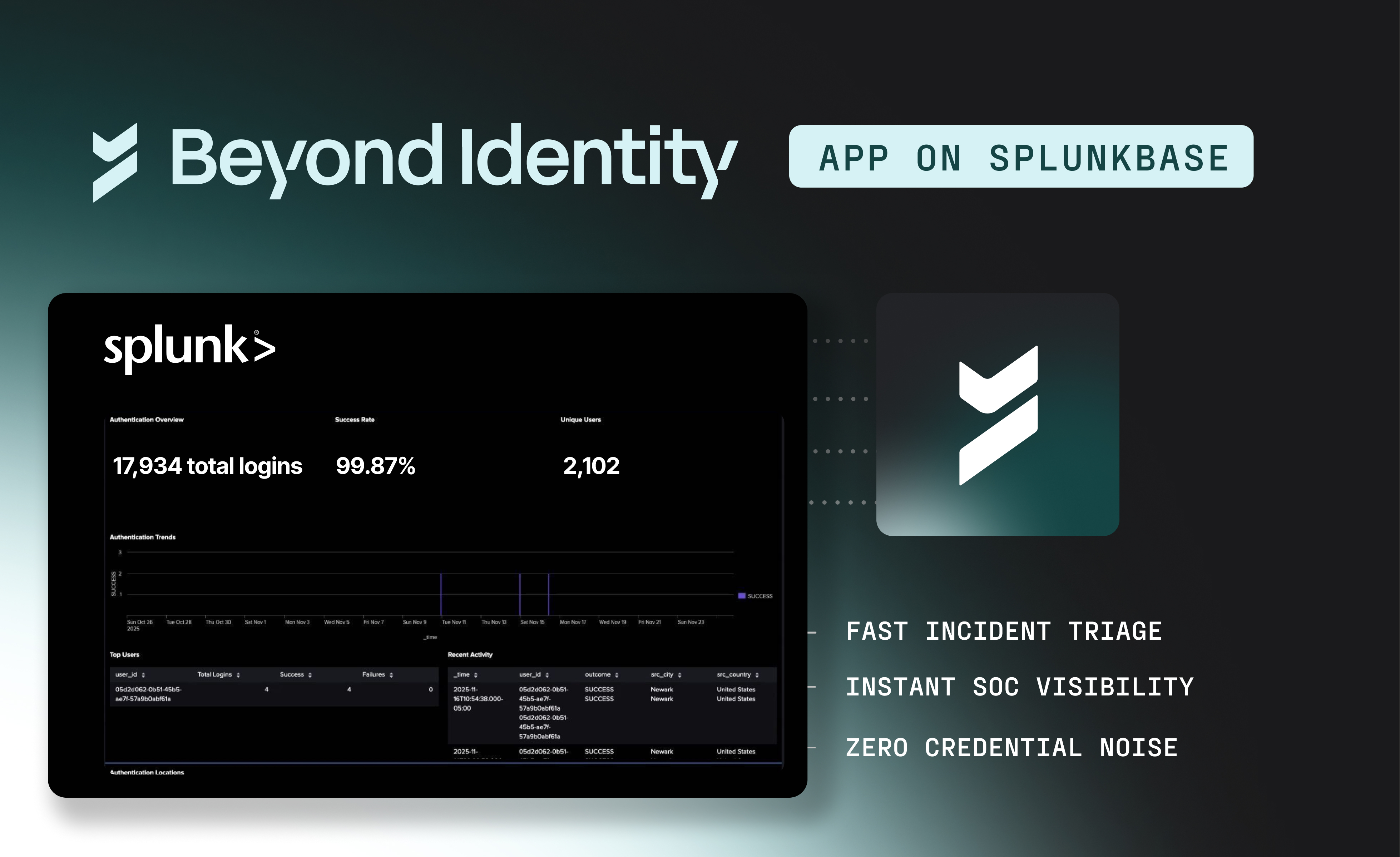

So as you can see on the screen, did it basically give you a number of the number of token that we have been using on this? So when I click on today, that's telling me how many call have been made on the platform and, importantly, how many token that have been generate on the daily basic.

On the weekly basic, you know, we generate almost three million token. Just imagine if you have to pay it to, like, OpenAI or or any other third party company, that could be a a significant cost for for your IT department.

Right. Yeah. You've definitely reached economies of scale with this. And I love that you've added a top user to see who's really engaging with the AIs, you know, on a weekly or daily basis.

Yep. You can see all that and what with also you can also track to see what model it being used so that way you can to make sure that it actually tune in to support all the the concurrent user as well.

Very cool. Awesome. Colton, any other you know, anything else you would want to see with this AI agent?

Yeah. How so how are you sort of siloing the agents from human users?

So so did it think of it as a chatbot. And then the agent that we build, what we want to do it because from security perspective, it require to interact with different subset of data. So the way that you set it up is let's say, for example, one of the AI agent that I'm billing for the people team, we have hundred of new job that we are hiring people. So today, the the recruiter, what they have to do is they have to go to Workday, and they have to screening all the resume to identify a candidate for to bring in for interview.

So the AI agent that we built for the purpose, it only allow access to the recruiter.

And then we and with that, we will allow us to separate the the user base on who can asset, what and when, and for what function.

And that is another layer of security from an agent versus versus the the the traditional chatbot where everyone have access to all the different model.

Got it. Very cool. Thanks for showing this.

We're gonna talk a little bit, also about, you know, what new risks these AI agents pose.

Colton, would you like to talk a little bit more about that?

Yes. I I think the key point here is that AI systems like LLMs operate, probabilistically. So they don't make the same decision twice, And attackers know this, and they exploit, basically, the lack of deterministic controls and just, you know, continuously try different prompts until they get the outcome they want.

So you can't and you shouldn't rely on these LLM models to enforce security.

What you really need is this sort of binary enforceable controls around it.

And then another thing I think we're seeing is you see all of these, like, flashy AI tools that say they can do everything and anything.

I think it's important that you always understand what actions these AI agents are taking on behalf of your users.

So, like, everyone thinks of identity at login, but in AI workflows, sensitive data can be accessed by agents, APIs, MCP servers, chain prompts, and all of this happens, you know, well after the initial authentication.

So these new access points, what you're seeing in, like, these new tools is they often lack monitoring or policy enforcement.

So if you're not securing each stage in your AI workflows, for example, like, the agent wants to go use a tool or the agent wants to read a document, you're really leaving gaps in your system.

Yeah. Hold on. You wanna ask the next question?

Yeah. So if we like, what new risks are you concerned with with the rise of these new AI agents?

So so you brought up all all the great point, and, actually, those are some of the concern that we have. That's why it is really important as a an AI agent builder.

Whenever you build an agent, you don't want an agent to do too many function. That's one. Right? So you you want to have you've got to have multiple agent, and each agent only responsible for a specific function. That way, you give the the agent very little asset to what the agent can do or and only do for that job. Mhmm. And that that is for now, that is the way that you you to, you know, protecting the security.

In the future, I believe that there are a lot of company out there always looking at and see how you can monitor, control that through policy. But as of right now, this this this still a very early stay of development, so not a whole lot of tool available yet.

Got it. And this is a perfect segue into, you know, how, you know, companies and MPS have tackled AI agent security. So, a couple of questions there, Huy. In terms of, like, who's accessing your agents, is it just employees or your partners, contractors? Are there unmanaged devices like mobile phones that are accessing your AI agents?

So there are there are two two things that I I basically put it into my AI agent. One is a service account if I need to access a certain system with very limited to the dataset that they have access to.

And then and then secondly, we build what we have is a life cycle management for the agent.

So our team, you know, having basically CICD that we're building into the agent and continue monitor for the life of the library because majority of the code that we are using in the back end is running on Python. So we constantly monitor that. We're scanning the library, make sure that there's no vulnerability within the library. So so there are some of the the manual control that we're doing somewhat a semi automatic in how we're building our agent.

And for the the end user that accessing the agent, we follow the same protocol that I shared with the earlier by leveraging beyond identity. So we control at the group level. So when they using the agent, they have to go to the identity p and, you know, to to ask that the agent is stopped.

Got it. And is it true that your employees could even access your AI agent AI agents through, like, a mobile phone, or is it just strict to corporate devices?

Everything is only strict to corporate devices because, again, we are an intellectual company. So our one of our policy, employee only conduct MPS business on MPS device.

Got it. Got it. That makes sense.

And so, you know, MPS chose a couple of years ago to, be a customer of Beyond Identity, but why currently secure your AI agents with Beyond Identity as well?

Yeah. So so we we chose Beyond Identity because of the pain point of the password that have been constantly phishing our user account. And my VIP, it constantly got hit with the phishing attack. And even though we use MFA and that's at all from other partner, When when your your your executive trying to approve an offer letter or a signed contract and not able to get into the system, that's considered a failure. So because of that, I reaching out. I found Beyond Identity, and we have been partner working very closely in, you know, leveraging your product as the identity.

I have several conversations with the team, constantly work closely with the team, and I think that, you know, agent at some point, as we continue to grow our massive of agent, it's really a digital employee per se as part of the agent. So I think Beyond would be the best candidate to help me secure that.

That's awesome. That's great to hear on our side.

And you kind of, you already showed us a snippet of how it looked beforehand, basically, like, logging out and logging back in. So maybe we don't have to show that again. But, yeah, it was, like, painless, passwordless, and it eliminates phishing risks.

I always tell my team whenever you want to build something, keep it simple, stupid.

Exactly. Exactly. So, with that, would love to talk a little bit about the benefits on Beyond Identity. Saw Huy demo this earlier in this webinar of, you know, delegating authentication to Beyond Identity, doing a finger swipe or, an unphishable PIN as a fallback, and then doing a on sort of device posture checks, which the user doesn't even see it's all happening in the background before getting access to his AI agent.

And so, like we mentioned, he his use case was to eliminate phishing, eliminate passwords. That's exactly what we do. And even more than that, we make unauthorized access impossible. So replacing all phishable credentials like push notifications OTPs with a device bound passkey, to prove the ownership of the device, that it's the right device, and then also, proving the right user with that biometric check or PIN.

And also we also provide all, device security assurance for all users, although we is using the policy just to make sure that all devices are corporate devices.

Beyond Identity also works on unmanaged devices, making sure they're not rooted, making sure they're not jailbroken, also works on partner or contractor devices.

You can also leverage your entire security stack, so thinking of your EDR, MDM, ZTNA, the risk signals from them. So for instance, if CrowdStrike flags, hey, I no longer have full disk access to this device, then, Beyond Identity will remove access from that device until it is re remediated. So blocking any type of device risk posture changes in real time. Be confident that all your users are secure. So instead of having to wait, eight hours for another re authentication, which just, you know, adds more work for the user, Beyond Identity in the background is continuously checking the device posture and if anything is changing.

And here's just a simple diagram to see how Beyond Identity works and how it fits into the actual flow. On the left hand side, we have users and devices, and these, two entities are ultimately just trying to get to work. They're trying to get to these applications help them be productive, whether that's, ChatGPT, Claude, or the AI agents that you're building for your enterprise.

But the process really starts with a biometric check and the device, risk posture signals.

Those happen continuously, and then they're rich enriched by your EDM, your m EDR, MDM, ZTNA. So if any of the posture changes here, it flags it to Beyond Identity and can revoke access at any time to make sure that only the right users, only the right devices are gaining access ultimately to your most critical resources. And this is ultimately how we make unauthorized access impossible.

So now we want to talk a little bit about the future of AI security. We've talked about, you know, what Beyond Identity can do today, how it secures AI agents.

But there's more that we're building towards. And so Colton has a demo for us of, risk based access controls in your AI agents and then also continuous authentication in your I AI agents as well as audit controls. So I'm gonna hand over the screen to him.

Thanks, Kasia. So we we were sitting around one day just brainstorming about, you know, AI agents, chatbots. And given this new era of, like, probabilistic systems, how do you deploy deterministic access control to something like a chatbot that claims it can do anything and everything?

So we ended up building this sort of demo application using our APIs and SDKs to demonstrate, deterministic access controls throughout, like, a chatbot workflow.

So I'm gonna go into it a little bit.

So the first thing I'm gonna do is log in just like any other chatbot, And we're using Beyond Identity to log in. So we do all of the device verification in the background as the user logs in. We make sure, the device is managed, the OS version is up to date, disk encryption is on.

And now the user is logged in. So what we what we built out here is sort of a rack system that would allow employees to upload documents and then chat with those documents.

But it's important to note that not all employees should have equal access to all documents.

So using Beyond Identity's, RBAC system and SDKs, we sort of built this little access control around accessing documents. So, for example, engineering should not be allowed to chat with finance documents.

So I'm gonna start a new chat here, and you'll kinda see this up at the top.

Before any transaction is made in this chatbot in the system, we're verifying the security status of the device that is being used.

So I'll just ask it a question.

Alright.

So it it's able to access basically any of these LLMs that you grant to the employees in this group.

So I'm gonna ask it about a document that the marketing team is aware of.

What are the q three marketing comments?

So it's gonna go into the Rack system and fetch documents related to the q three plans for marketing.

And you can see that it pulled this document because it was a match, and this document was, accessible by someone on the marketing team.

Now if you log out and let's say we go to engineering at BI, And we'll start a new chat and ask about the marketing plans.

Now you'll notice that the documents that were accessible by the marketing team are not accessible by engineering. So this is just an example of using access control before using deterministic access control instead of using an LLM to enforce security.

So this is just typical chatbot workflow. You're asking questions. You're asking to see and reference documents.

So what happens if your the device you're on, its security posture deteriorate deteriorates. So let's let me just temporarily, like, disable my firewall and try to ask it another question.

So according to the Beyond Identity policy we set, you're only allowed to make this transaction that is chat with this bot if your firewall is enabled.

Alright. So I've reenabled the firewall.

Now I'm going to demo, the auditing capabilities that we offer.

So I sort of back to what we were talking about earlier, every action that these AI agents take should be logged and traceable somewhere. So they're either gonna be calling a tool like a MCP server, or they're gonna be accessing documents or maybe even calling another agent.

And it's important that all of these transactions are logged, in details, you know, whether you're creating a message, accessing a document, and that you can see the the security posture of the device for each of these transactions.

Okay. Now we're gonna show a little bit about how to manage policy in this system.

So what this allows you to do is basically write a policy for these specific groups of users saying, you know, what is the minimum security score needed? What are some of the device requirements that they need to do some of these particular actions?

Also, specify what models they can access and what exactly they can do to the Rag system, such as reading or writing documents to it.

Okay.

And that's it for the demo.

Awesome.

Well, it looks like you can have, enforce some strong policies as well as great visibility into, you know, what's actually happening when users are interacting with it. So best of both worlds.

So lastly, just to wrap everything up, some key takeaways. Huy, I'd love, you know, some insight from you on, you know, if someone is thinking of building or buying an AI agent or is currently in the process too and just has a lot of question marks on how to secure it and, or how to just, like, start the process. You know, what are some recommendations that you've had now that you have gone through the process that you have?

I would I would my first recommendation is know exactly what you want to get out of the agent and give the agent minimum security that they can. And then I love the idea of you guys start thinking about, using asset, you know, for for the agent, you know, like, you start with chatbot, but I hope that you guys also going to provide identity to the agent by accessing your SDK or API.

Sounds good. Awesome. Colton, maybe that can be on your road map.

Definitely is. AI is moving quickly, and we're going along with it.

Awesome. Well, that wraps up a really great webinar. Thank you to Huy being our special guest, both of you guys for, showing some great demos.

If anything was interesting to you from how Beyond Identity can secure your AI agent or your, LLMs today to, you know, Colton's demo of the future of AI security and, you know, what we're building towards, reach out to us. You can you can get a demo at beyond identity dot com slash demo. You'll talk to us, hear from us. Yeah. We're we're interested to hear your thoughts as well. Thanks, everybody.

Thanks. Thank you.

TL;DR

Full Transcript

Hello, everybody. Welcome to another Beyond Identity webinar. We have some great content today, how to secure your private AI, a guide for enterprises building internal agents.

I'm Kasia. I'm a Product Marketing Manager at Beyond Identity, and we have two, amazing guests with us today, Colton and Huy. So I'm gonna let them introduce themselves.

Huy, you're our special guest. Would you like to introduce yourself?

Yep. My name is Huy Ly, and I am the Head of Global IT Security and infrastructure for Monolithic Power Systems (MPS).

Hi. I'm Colton. I'm a Product Manager here at Beyond Identity, and I'm focusing on the intersection of AI and identity and access management.

Awesome. So we're gonna just start off a little bit about MPS. We're so lucky to have Huy from MPS join us here today to talk about how he has built and secured his AI agents for MPS. So we before we go into that, would love a little introduction on MPS, you don't mind, Huy.

Yeah. MPS is a semiconductor company located headquarter in Kirkland, Washington.

We the company start in nineteen ninety seven by a gentleman named Michael Hsing, and he has been a CEO and cofounder. We are a Fortune 500 company that is specialized in data center, AI, automobile, and a lot of consumer product.

Awesome. Yeah. And you guys have done some great things. I saw that you were on Fortune's one hundred fastest growing companies in the past couple of years, and you've also been a customer of Beyond Identity for the past two or three years.

So a little bit of an agenda of what we're gonna go over today because then we have a lot of great content, and a lot of great demos too, so get ready. We're gonna start off with, how to build AI agents from the ground up.

Thankfully, Huy is here to answer a lot of questions for us. We'll talk about, risks with the rise of AI, how MPS solved AI agent security, also the future of AI security with the demo from Colton, and then lastly, wrapping up with some key takeaways.

So let's get right into it. So first up, you know, we're gonna be doing a little bit of, like, of a q and a session with Huy and then also some demos. So starting off with some q and a on, like, how to build AI agents from the ground up.

It's actually really interesting because most AI adoption starts outside of the IT and security departments. Most of the time, it's product, engineering, or marketing. So what do you think made MPS different in this scenario?

Yeah. For MPS, because we are a semiconductor company, so a lot of our product are built based on intellectual property.

And because of that reason, we do not want our engineer to communicate with JCPT perplexity or any of the commercial foundation and leaking out our secret sauce to the world. Right? So because of that, what the footing that we did what we blocked all the AI asset to the Internet.

But we cut but we're but we want to make sure that we continue innovate. So to solve that problem, we decide that we are going to build our own private AI for MPS.

Awesome. Got it. And that kind of answers my next question. Like, why did MPS choose to build versus buy? You know, sounds like, you know, you didn't want to lose the secret sauce, so contain a lot of information inside. Was there anything else to the that decision?

Yeah. I I think there's there's a multiple reason for that. Right? So numb number one, because we don't want to lead our intellectual property to the world. So and there, it's actually auctioned out there in the marketplace.

The Clo, you know, the Clo model, like OpenAI, and then the open source model in the OLAMA community.

And because my team have been, you know, in the DevOps space for a long time, we you know, majority of my team came with infrastructure, large scale data center, infrastructure as code. So because of that, what we what we did, what we basically spending the last the the first two, three months to explore what the option and, importantly, how much it cost because everyone want to jump into the AI bandwagon. But the price hack, it will be very expensive if you're looking at some of the big label. Right? So if you go with the alpha AI factory or go with HPE for for the same concept, it will be ten of million dollar. So it's it's a very difficult thing to try to sell to a executive. So because of that, we decided to build our own.

Yeah. And you've already gotten your AI to be used by a thousand plus employees, so great traction there.

In that case, like, would you, like to share a little bit more about your, your AII agents? Like, can we see a little bit of how they work and how they were built?

Yeah. I can give you a quick demo on how we got, how we build our agent, importantly, how we integrate with Beyond Identity.

Where I came from is security. So there is Zero Trust and validate, especially with AI. So with that, I'm going to give you a quick demo. On my screen, you will see the login screen that the employee when they actually accessing the system. So when I click on log in, here you go. As simple as that.

You are now in our basically, you're in our chat box environment where you can do text to image. You can do content creation. You can do co augmentation.

All that can be done with this interface.

Wow. Incredible. Yeah. And you've built, multiple AI agents for various scenarios, if I remember correctly.

Yep. So as you can see here, did it a lit up all the different model that that we have in our infrastructure. And, if you look on the left right here, basically, the this is all the prompt that I use to communicate with the agent.

Awesome. Very cool. Yeah. And, I think I remember that this, this was something that has been, like, a passion of yours, things that you're doing outside of work to continuously building on this AI agent.

Yeah. It it actually it my weekend fun job that, that I'm working putting audit together. So a lot of time myself and also some of my team member working together in putting up update infrastructure. And let's see. I want to share with you another metric that we use to basically monitoring the usage.

So as you can see on the screen, did it basically give you a number of the number of token that we have been using on this? So when I click on today, that's telling me how many call have been made on the platform and, importantly, how many token that have been generate on the daily basic.

On the weekly basic, you know, we generate almost three million token. Just imagine if you have to pay it to, like, OpenAI or or any other third party company, that could be a a significant cost for for your IT department.

Right. Yeah. You've definitely reached economies of scale with this. And I love that you've added a top user to see who's really engaging with the AIs, you know, on a weekly or daily basis.

Yep. You can see all that and what with also you can also track to see what model it being used so that way you can to make sure that it actually tune in to support all the the concurrent user as well.

Very cool. Awesome. Colton, any other you know, anything else you would want to see with this AI agent?

Yeah. How so how are you sort of siloing the agents from human users?

So so did it think of it as a chatbot. And then the agent that we build, what we want to do it because from security perspective, it require to interact with different subset of data. So the way that you set it up is let's say, for example, one of the AI agent that I'm billing for the people team, we have hundred of new job that we are hiring people. So today, the the recruiter, what they have to do is they have to go to Workday, and they have to screening all the resume to identify a candidate for to bring in for interview.

So the AI agent that we built for the purpose, it only allow access to the recruiter.

And then we and with that, we will allow us to separate the the user base on who can asset, what and when, and for what function.

And that is another layer of security from an agent versus versus the the the traditional chatbot where everyone have access to all the different model.

Got it. Very cool. Thanks for showing this.

We're gonna talk a little bit, also about, you know, what new risks these AI agents pose.

Colton, would you like to talk a little bit more about that?

Yes. I I think the key point here is that AI systems like LLMs operate, probabilistically. So they don't make the same decision twice, And attackers know this, and they exploit, basically, the lack of deterministic controls and just, you know, continuously try different prompts until they get the outcome they want.

So you can't and you shouldn't rely on these LLM models to enforce security.

What you really need is this sort of binary enforceable controls around it.

And then another thing I think we're seeing is you see all of these, like, flashy AI tools that say they can do everything and anything.

I think it's important that you always understand what actions these AI agents are taking on behalf of your users.

So, like, everyone thinks of identity at login, but in AI workflows, sensitive data can be accessed by agents, APIs, MCP servers, chain prompts, and all of this happens, you know, well after the initial authentication.

So these new access points, what you're seeing in, like, these new tools is they often lack monitoring or policy enforcement.

So if you're not securing each stage in your AI workflows, for example, like, the agent wants to go use a tool or the agent wants to read a document, you're really leaving gaps in your system.

Yeah. Hold on. You wanna ask the next question?

Yeah. So if we like, what new risks are you concerned with with the rise of these new AI agents?

So so you brought up all all the great point, and, actually, those are some of the concern that we have. That's why it is really important as a an AI agent builder.

Whenever you build an agent, you don't want an agent to do too many function. That's one. Right? So you you want to have you've got to have multiple agent, and each agent only responsible for a specific function. That way, you give the the agent very little asset to what the agent can do or and only do for that job. Mhmm. And that that is for now, that is the way that you you to, you know, protecting the security.

In the future, I believe that there are a lot of company out there always looking at and see how you can monitor, control that through policy. But as of right now, this this this still a very early stay of development, so not a whole lot of tool available yet.

Got it. And this is a perfect segue into, you know, how, you know, companies and MPS have tackled AI agent security. So, a couple of questions there, Huy. In terms of, like, who's accessing your agents, is it just employees or your partners, contractors? Are there unmanaged devices like mobile phones that are accessing your AI agents?

So there are there are two two things that I I basically put it into my AI agent. One is a service account if I need to access a certain system with very limited to the dataset that they have access to.

And then and then secondly, we build what we have is a life cycle management for the agent.

So our team, you know, having basically CICD that we're building into the agent and continue monitor for the life of the library because majority of the code that we are using in the back end is running on Python. So we constantly monitor that. We're scanning the library, make sure that there's no vulnerability within the library. So so there are some of the the manual control that we're doing somewhat a semi automatic in how we're building our agent.

And for the the end user that accessing the agent, we follow the same protocol that I shared with the earlier by leveraging beyond identity. So we control at the group level. So when they using the agent, they have to go to the identity p and, you know, to to ask that the agent is stopped.

Got it. And is it true that your employees could even access your AI agent AI agents through, like, a mobile phone, or is it just strict to corporate devices?

Everything is only strict to corporate devices because, again, we are an intellectual company. So our one of our policy, employee only conduct MPS business on MPS device.

Got it. Got it. That makes sense.

And so, you know, MPS chose a couple of years ago to, be a customer of Beyond Identity, but why currently secure your AI agents with Beyond Identity as well?

Yeah. So so we we chose Beyond Identity because of the pain point of the password that have been constantly phishing our user account. And my VIP, it constantly got hit with the phishing attack. And even though we use MFA and that's at all from other partner, When when your your your executive trying to approve an offer letter or a signed contract and not able to get into the system, that's considered a failure. So because of that, I reaching out. I found Beyond Identity, and we have been partner working very closely in, you know, leveraging your product as the identity.

I have several conversations with the team, constantly work closely with the team, and I think that, you know, agent at some point, as we continue to grow our massive of agent, it's really a digital employee per se as part of the agent. So I think Beyond would be the best candidate to help me secure that.

That's awesome. That's great to hear on our side.

And you kind of, you already showed us a snippet of how it looked beforehand, basically, like, logging out and logging back in. So maybe we don't have to show that again. But, yeah, it was, like, painless, passwordless, and it eliminates phishing risks.

I always tell my team whenever you want to build something, keep it simple, stupid.

Exactly. Exactly. So, with that, would love to talk a little bit about the benefits on Beyond Identity. Saw Huy demo this earlier in this webinar of, you know, delegating authentication to Beyond Identity, doing a finger swipe or, an unphishable PIN as a fallback, and then doing a on sort of device posture checks, which the user doesn't even see it's all happening in the background before getting access to his AI agent.

And so, like we mentioned, he his use case was to eliminate phishing, eliminate passwords. That's exactly what we do. And even more than that, we make unauthorized access impossible. So replacing all phishable credentials like push notifications OTPs with a device bound passkey, to prove the ownership of the device, that it's the right device, and then also, proving the right user with that biometric check or PIN.

And also we also provide all, device security assurance for all users, although we is using the policy just to make sure that all devices are corporate devices.

Beyond Identity also works on unmanaged devices, making sure they're not rooted, making sure they're not jailbroken, also works on partner or contractor devices.

You can also leverage your entire security stack, so thinking of your EDR, MDM, ZTNA, the risk signals from them. So for instance, if CrowdStrike flags, hey, I no longer have full disk access to this device, then, Beyond Identity will remove access from that device until it is re remediated. So blocking any type of device risk posture changes in real time. Be confident that all your users are secure. So instead of having to wait, eight hours for another re authentication, which just, you know, adds more work for the user, Beyond Identity in the background is continuously checking the device posture and if anything is changing.

And here's just a simple diagram to see how Beyond Identity works and how it fits into the actual flow. On the left hand side, we have users and devices, and these, two entities are ultimately just trying to get to work. They're trying to get to these applications help them be productive, whether that's, ChatGPT, Claude, or the AI agents that you're building for your enterprise.

But the process really starts with a biometric check and the device, risk posture signals.

Those happen continuously, and then they're rich enriched by your EDM, your m EDR, MDM, ZTNA. So if any of the posture changes here, it flags it to Beyond Identity and can revoke access at any time to make sure that only the right users, only the right devices are gaining access ultimately to your most critical resources. And this is ultimately how we make unauthorized access impossible.

So now we want to talk a little bit about the future of AI security. We've talked about, you know, what Beyond Identity can do today, how it secures AI agents.

But there's more that we're building towards. And so Colton has a demo for us of, risk based access controls in your AI agents and then also continuous authentication in your I AI agents as well as audit controls. So I'm gonna hand over the screen to him.

Thanks, Kasia. So we we were sitting around one day just brainstorming about, you know, AI agents, chatbots. And given this new era of, like, probabilistic systems, how do you deploy deterministic access control to something like a chatbot that claims it can do anything and everything?

So we ended up building this sort of demo application using our APIs and SDKs to demonstrate, deterministic access controls throughout, like, a chatbot workflow.

So I'm gonna go into it a little bit.

So the first thing I'm gonna do is log in just like any other chatbot, And we're using Beyond Identity to log in. So we do all of the device verification in the background as the user logs in. We make sure, the device is managed, the OS version is up to date, disk encryption is on.

And now the user is logged in. So what we what we built out here is sort of a rack system that would allow employees to upload documents and then chat with those documents.

But it's important to note that not all employees should have equal access to all documents.

So using Beyond Identity's, RBAC system and SDKs, we sort of built this little access control around accessing documents. So, for example, engineering should not be allowed to chat with finance documents.

So I'm gonna start a new chat here, and you'll kinda see this up at the top.

Before any transaction is made in this chatbot in the system, we're verifying the security status of the device that is being used.

So I'll just ask it a question.

Alright.

So it it's able to access basically any of these LLMs that you grant to the employees in this group.

So I'm gonna ask it about a document that the marketing team is aware of.

What are the q three marketing comments?

So it's gonna go into the Rack system and fetch documents related to the q three plans for marketing.

And you can see that it pulled this document because it was a match, and this document was, accessible by someone on the marketing team.

Now if you log out and let's say we go to engineering at BI, And we'll start a new chat and ask about the marketing plans.

Now you'll notice that the documents that were accessible by the marketing team are not accessible by engineering. So this is just an example of using access control before using deterministic access control instead of using an LLM to enforce security.

So this is just typical chatbot workflow. You're asking questions. You're asking to see and reference documents.

So what happens if your the device you're on, its security posture deteriorate deteriorates. So let's let me just temporarily, like, disable my firewall and try to ask it another question.

So according to the Beyond Identity policy we set, you're only allowed to make this transaction that is chat with this bot if your firewall is enabled.

Alright. So I've reenabled the firewall.

Now I'm going to demo, the auditing capabilities that we offer.

So I sort of back to what we were talking about earlier, every action that these AI agents take should be logged and traceable somewhere. So they're either gonna be calling a tool like a MCP server, or they're gonna be accessing documents or maybe even calling another agent.

And it's important that all of these transactions are logged, in details, you know, whether you're creating a message, accessing a document, and that you can see the the security posture of the device for each of these transactions.

Okay. Now we're gonna show a little bit about how to manage policy in this system.

So what this allows you to do is basically write a policy for these specific groups of users saying, you know, what is the minimum security score needed? What are some of the device requirements that they need to do some of these particular actions?

Also, specify what models they can access and what exactly they can do to the Rag system, such as reading or writing documents to it.

Okay.

And that's it for the demo.

Awesome.

Well, it looks like you can have, enforce some strong policies as well as great visibility into, you know, what's actually happening when users are interacting with it. So best of both worlds.

So lastly, just to wrap everything up, some key takeaways. Huy, I'd love, you know, some insight from you on, you know, if someone is thinking of building or buying an AI agent or is currently in the process too and just has a lot of question marks on how to secure it and, or how to just, like, start the process. You know, what are some recommendations that you've had now that you have gone through the process that you have?

I would I would my first recommendation is know exactly what you want to get out of the agent and give the agent minimum security that they can. And then I love the idea of you guys start thinking about, using asset, you know, for for the agent, you know, like, you start with chatbot, but I hope that you guys also going to provide identity to the agent by accessing your SDK or API.

Sounds good. Awesome. Colton, maybe that can be on your road map.

Definitely is. AI is moving quickly, and we're going along with it.

Awesome. Well, that wraps up a really great webinar. Thank you to Huy being our special guest, both of you guys for, showing some great demos.

If anything was interesting to you from how Beyond Identity can secure your AI agent or your, LLMs today to, you know, Colton's demo of the future of AI security and, you know, what we're building towards, reach out to us. You can you can get a demo at beyond identity dot com slash demo. You'll talk to us, hear from us. Yeah. We're we're interested to hear your thoughts as well. Thanks, everybody.

Thanks. Thank you.

TL;DR

Full Transcript

Hello, everybody. Welcome to another Beyond Identity webinar. We have some great content today, how to secure your private AI, a guide for enterprises building internal agents.

I'm Kasia. I'm a Product Marketing Manager at Beyond Identity, and we have two, amazing guests with us today, Colton and Huy. So I'm gonna let them introduce themselves.

Huy, you're our special guest. Would you like to introduce yourself?

Yep. My name is Huy Ly, and I am the Head of Global IT Security and infrastructure for Monolithic Power Systems (MPS).

Hi. I'm Colton. I'm a Product Manager here at Beyond Identity, and I'm focusing on the intersection of AI and identity and access management.

Awesome. So we're gonna just start off a little bit about MPS. We're so lucky to have Huy from MPS join us here today to talk about how he has built and secured his AI agents for MPS. So we before we go into that, would love a little introduction on MPS, you don't mind, Huy.

Yeah. MPS is a semiconductor company located headquarter in Kirkland, Washington.

We the company start in nineteen ninety seven by a gentleman named Michael Hsing, and he has been a CEO and cofounder. We are a Fortune 500 company that is specialized in data center, AI, automobile, and a lot of consumer product.

Awesome. Yeah. And you guys have done some great things. I saw that you were on Fortune's one hundred fastest growing companies in the past couple of years, and you've also been a customer of Beyond Identity for the past two or three years.

So a little bit of an agenda of what we're gonna go over today because then we have a lot of great content, and a lot of great demos too, so get ready. We're gonna start off with, how to build AI agents from the ground up.

Thankfully, Huy is here to answer a lot of questions for us. We'll talk about, risks with the rise of AI, how MPS solved AI agent security, also the future of AI security with the demo from Colton, and then lastly, wrapping up with some key takeaways.

So let's get right into it. So first up, you know, we're gonna be doing a little bit of, like, of a q and a session with Huy and then also some demos. So starting off with some q and a on, like, how to build AI agents from the ground up.

It's actually really interesting because most AI adoption starts outside of the IT and security departments. Most of the time, it's product, engineering, or marketing. So what do you think made MPS different in this scenario?

Yeah. For MPS, because we are a semiconductor company, so a lot of our product are built based on intellectual property.

And because of that reason, we do not want our engineer to communicate with JCPT perplexity or any of the commercial foundation and leaking out our secret sauce to the world. Right? So because of that, what the footing that we did what we blocked all the AI asset to the Internet.

But we cut but we're but we want to make sure that we continue innovate. So to solve that problem, we decide that we are going to build our own private AI for MPS.

Awesome. Got it. And that kind of answers my next question. Like, why did MPS choose to build versus buy? You know, sounds like, you know, you didn't want to lose the secret sauce, so contain a lot of information inside. Was there anything else to the that decision?

Yeah. I I think there's there's a multiple reason for that. Right? So numb number one, because we don't want to lead our intellectual property to the world. So and there, it's actually auctioned out there in the marketplace.

The Clo, you know, the Clo model, like OpenAI, and then the open source model in the OLAMA community.

And because my team have been, you know, in the DevOps space for a long time, we you know, majority of my team came with infrastructure, large scale data center, infrastructure as code. So because of that, what we what we did, what we basically spending the last the the first two, three months to explore what the option and, importantly, how much it cost because everyone want to jump into the AI bandwagon. But the price hack, it will be very expensive if you're looking at some of the big label. Right? So if you go with the alpha AI factory or go with HPE for for the same concept, it will be ten of million dollar. So it's it's a very difficult thing to try to sell to a executive. So because of that, we decided to build our own.

Yeah. And you've already gotten your AI to be used by a thousand plus employees, so great traction there.

In that case, like, would you, like to share a little bit more about your, your AII agents? Like, can we see a little bit of how they work and how they were built?

Yeah. I can give you a quick demo on how we got, how we build our agent, importantly, how we integrate with Beyond Identity.

Where I came from is security. So there is Zero Trust and validate, especially with AI. So with that, I'm going to give you a quick demo. On my screen, you will see the login screen that the employee when they actually accessing the system. So when I click on log in, here you go. As simple as that.

You are now in our basically, you're in our chat box environment where you can do text to image. You can do content creation. You can do co augmentation.

All that can be done with this interface.

Wow. Incredible. Yeah. And you've built, multiple AI agents for various scenarios, if I remember correctly.

Yep. So as you can see here, did it a lit up all the different model that that we have in our infrastructure. And, if you look on the left right here, basically, the this is all the prompt that I use to communicate with the agent.

Awesome. Very cool. Yeah. And, I think I remember that this, this was something that has been, like, a passion of yours, things that you're doing outside of work to continuously building on this AI agent.

Yeah. It it actually it my weekend fun job that, that I'm working putting audit together. So a lot of time myself and also some of my team member working together in putting up update infrastructure. And let's see. I want to share with you another metric that we use to basically monitoring the usage.

So as you can see on the screen, did it basically give you a number of the number of token that we have been using on this? So when I click on today, that's telling me how many call have been made on the platform and, importantly, how many token that have been generate on the daily basic.

On the weekly basic, you know, we generate almost three million token. Just imagine if you have to pay it to, like, OpenAI or or any other third party company, that could be a a significant cost for for your IT department.

Right. Yeah. You've definitely reached economies of scale with this. And I love that you've added a top user to see who's really engaging with the AIs, you know, on a weekly or daily basis.

Yep. You can see all that and what with also you can also track to see what model it being used so that way you can to make sure that it actually tune in to support all the the concurrent user as well.

Very cool. Awesome. Colton, any other you know, anything else you would want to see with this AI agent?

Yeah. How so how are you sort of siloing the agents from human users?

So so did it think of it as a chatbot. And then the agent that we build, what we want to do it because from security perspective, it require to interact with different subset of data. So the way that you set it up is let's say, for example, one of the AI agent that I'm billing for the people team, we have hundred of new job that we are hiring people. So today, the the recruiter, what they have to do is they have to go to Workday, and they have to screening all the resume to identify a candidate for to bring in for interview.

So the AI agent that we built for the purpose, it only allow access to the recruiter.

And then we and with that, we will allow us to separate the the user base on who can asset, what and when, and for what function.

And that is another layer of security from an agent versus versus the the the traditional chatbot where everyone have access to all the different model.

Got it. Very cool. Thanks for showing this.

We're gonna talk a little bit, also about, you know, what new risks these AI agents pose.

Colton, would you like to talk a little bit more about that?

Yes. I I think the key point here is that AI systems like LLMs operate, probabilistically. So they don't make the same decision twice, And attackers know this, and they exploit, basically, the lack of deterministic controls and just, you know, continuously try different prompts until they get the outcome they want.

So you can't and you shouldn't rely on these LLM models to enforce security.

What you really need is this sort of binary enforceable controls around it.

And then another thing I think we're seeing is you see all of these, like, flashy AI tools that say they can do everything and anything.

I think it's important that you always understand what actions these AI agents are taking on behalf of your users.

So, like, everyone thinks of identity at login, but in AI workflows, sensitive data can be accessed by agents, APIs, MCP servers, chain prompts, and all of this happens, you know, well after the initial authentication.

So these new access points, what you're seeing in, like, these new tools is they often lack monitoring or policy enforcement.

So if you're not securing each stage in your AI workflows, for example, like, the agent wants to go use a tool or the agent wants to read a document, you're really leaving gaps in your system.

Yeah. Hold on. You wanna ask the next question?

Yeah. So if we like, what new risks are you concerned with with the rise of these new AI agents?

So so you brought up all all the great point, and, actually, those are some of the concern that we have. That's why it is really important as a an AI agent builder.

Whenever you build an agent, you don't want an agent to do too many function. That's one. Right? So you you want to have you've got to have multiple agent, and each agent only responsible for a specific function. That way, you give the the agent very little asset to what the agent can do or and only do for that job. Mhmm. And that that is for now, that is the way that you you to, you know, protecting the security.

In the future, I believe that there are a lot of company out there always looking at and see how you can monitor, control that through policy. But as of right now, this this this still a very early stay of development, so not a whole lot of tool available yet.

Got it. And this is a perfect segue into, you know, how, you know, companies and MPS have tackled AI agent security. So, a couple of questions there, Huy. In terms of, like, who's accessing your agents, is it just employees or your partners, contractors? Are there unmanaged devices like mobile phones that are accessing your AI agents?

So there are there are two two things that I I basically put it into my AI agent. One is a service account if I need to access a certain system with very limited to the dataset that they have access to.

And then and then secondly, we build what we have is a life cycle management for the agent.

So our team, you know, having basically CICD that we're building into the agent and continue monitor for the life of the library because majority of the code that we are using in the back end is running on Python. So we constantly monitor that. We're scanning the library, make sure that there's no vulnerability within the library. So so there are some of the the manual control that we're doing somewhat a semi automatic in how we're building our agent.

And for the the end user that accessing the agent, we follow the same protocol that I shared with the earlier by leveraging beyond identity. So we control at the group level. So when they using the agent, they have to go to the identity p and, you know, to to ask that the agent is stopped.

Got it. And is it true that your employees could even access your AI agent AI agents through, like, a mobile phone, or is it just strict to corporate devices?

Everything is only strict to corporate devices because, again, we are an intellectual company. So our one of our policy, employee only conduct MPS business on MPS device.

Got it. Got it. That makes sense.

And so, you know, MPS chose a couple of years ago to, be a customer of Beyond Identity, but why currently secure your AI agents with Beyond Identity as well?

Yeah. So so we we chose Beyond Identity because of the pain point of the password that have been constantly phishing our user account. And my VIP, it constantly got hit with the phishing attack. And even though we use MFA and that's at all from other partner, When when your your your executive trying to approve an offer letter or a signed contract and not able to get into the system, that's considered a failure. So because of that, I reaching out. I found Beyond Identity, and we have been partner working very closely in, you know, leveraging your product as the identity.

I have several conversations with the team, constantly work closely with the team, and I think that, you know, agent at some point, as we continue to grow our massive of agent, it's really a digital employee per se as part of the agent. So I think Beyond would be the best candidate to help me secure that.

That's awesome. That's great to hear on our side.

And you kind of, you already showed us a snippet of how it looked beforehand, basically, like, logging out and logging back in. So maybe we don't have to show that again. But, yeah, it was, like, painless, passwordless, and it eliminates phishing risks.

I always tell my team whenever you want to build something, keep it simple, stupid.

Exactly. Exactly. So, with that, would love to talk a little bit about the benefits on Beyond Identity. Saw Huy demo this earlier in this webinar of, you know, delegating authentication to Beyond Identity, doing a finger swipe or, an unphishable PIN as a fallback, and then doing a on sort of device posture checks, which the user doesn't even see it's all happening in the background before getting access to his AI agent.

And so, like we mentioned, he his use case was to eliminate phishing, eliminate passwords. That's exactly what we do. And even more than that, we make unauthorized access impossible. So replacing all phishable credentials like push notifications OTPs with a device bound passkey, to prove the ownership of the device, that it's the right device, and then also, proving the right user with that biometric check or PIN.

And also we also provide all, device security assurance for all users, although we is using the policy just to make sure that all devices are corporate devices.

Beyond Identity also works on unmanaged devices, making sure they're not rooted, making sure they're not jailbroken, also works on partner or contractor devices.

You can also leverage your entire security stack, so thinking of your EDR, MDM, ZTNA, the risk signals from them. So for instance, if CrowdStrike flags, hey, I no longer have full disk access to this device, then, Beyond Identity will remove access from that device until it is re remediated. So blocking any type of device risk posture changes in real time. Be confident that all your users are secure. So instead of having to wait, eight hours for another re authentication, which just, you know, adds more work for the user, Beyond Identity in the background is continuously checking the device posture and if anything is changing.

And here's just a simple diagram to see how Beyond Identity works and how it fits into the actual flow. On the left hand side, we have users and devices, and these, two entities are ultimately just trying to get to work. They're trying to get to these applications help them be productive, whether that's, ChatGPT, Claude, or the AI agents that you're building for your enterprise.

But the process really starts with a biometric check and the device, risk posture signals.

Those happen continuously, and then they're rich enriched by your EDM, your m EDR, MDM, ZTNA. So if any of the posture changes here, it flags it to Beyond Identity and can revoke access at any time to make sure that only the right users, only the right devices are gaining access ultimately to your most critical resources. And this is ultimately how we make unauthorized access impossible.

So now we want to talk a little bit about the future of AI security. We've talked about, you know, what Beyond Identity can do today, how it secures AI agents.

But there's more that we're building towards. And so Colton has a demo for us of, risk based access controls in your AI agents and then also continuous authentication in your I AI agents as well as audit controls. So I'm gonna hand over the screen to him.

Thanks, Kasia. So we we were sitting around one day just brainstorming about, you know, AI agents, chatbots. And given this new era of, like, probabilistic systems, how do you deploy deterministic access control to something like a chatbot that claims it can do anything and everything?

So we ended up building this sort of demo application using our APIs and SDKs to demonstrate, deterministic access controls throughout, like, a chatbot workflow.

So I'm gonna go into it a little bit.

So the first thing I'm gonna do is log in just like any other chatbot, And we're using Beyond Identity to log in. So we do all of the device verification in the background as the user logs in. We make sure, the device is managed, the OS version is up to date, disk encryption is on.

And now the user is logged in. So what we what we built out here is sort of a rack system that would allow employees to upload documents and then chat with those documents.

But it's important to note that not all employees should have equal access to all documents.

So using Beyond Identity's, RBAC system and SDKs, we sort of built this little access control around accessing documents. So, for example, engineering should not be allowed to chat with finance documents.

So I'm gonna start a new chat here, and you'll kinda see this up at the top.

Before any transaction is made in this chatbot in the system, we're verifying the security status of the device that is being used.

So I'll just ask it a question.

Alright.

So it it's able to access basically any of these LLMs that you grant to the employees in this group.

So I'm gonna ask it about a document that the marketing team is aware of.

What are the q three marketing comments?

So it's gonna go into the Rack system and fetch documents related to the q three plans for marketing.

And you can see that it pulled this document because it was a match, and this document was, accessible by someone on the marketing team.

Now if you log out and let's say we go to engineering at BI, And we'll start a new chat and ask about the marketing plans.

Now you'll notice that the documents that were accessible by the marketing team are not accessible by engineering. So this is just an example of using access control before using deterministic access control instead of using an LLM to enforce security.

So this is just typical chatbot workflow. You're asking questions. You're asking to see and reference documents.

So what happens if your the device you're on, its security posture deteriorate deteriorates. So let's let me just temporarily, like, disable my firewall and try to ask it another question.

So according to the Beyond Identity policy we set, you're only allowed to make this transaction that is chat with this bot if your firewall is enabled.

Alright. So I've reenabled the firewall.

Now I'm going to demo, the auditing capabilities that we offer.

So I sort of back to what we were talking about earlier, every action that these AI agents take should be logged and traceable somewhere. So they're either gonna be calling a tool like a MCP server, or they're gonna be accessing documents or maybe even calling another agent.

And it's important that all of these transactions are logged, in details, you know, whether you're creating a message, accessing a document, and that you can see the the security posture of the device for each of these transactions.

Okay. Now we're gonna show a little bit about how to manage policy in this system.

So what this allows you to do is basically write a policy for these specific groups of users saying, you know, what is the minimum security score needed? What are some of the device requirements that they need to do some of these particular actions?

Also, specify what models they can access and what exactly they can do to the Rag system, such as reading or writing documents to it.

Okay.

And that's it for the demo.

Awesome.

Well, it looks like you can have, enforce some strong policies as well as great visibility into, you know, what's actually happening when users are interacting with it. So best of both worlds.

So lastly, just to wrap everything up, some key takeaways. Huy, I'd love, you know, some insight from you on, you know, if someone is thinking of building or buying an AI agent or is currently in the process too and just has a lot of question marks on how to secure it and, or how to just, like, start the process. You know, what are some recommendations that you've had now that you have gone through the process that you have?

I would I would my first recommendation is know exactly what you want to get out of the agent and give the agent minimum security that they can. And then I love the idea of you guys start thinking about, using asset, you know, for for the agent, you know, like, you start with chatbot, but I hope that you guys also going to provide identity to the agent by accessing your SDK or API.

Sounds good. Awesome. Colton, maybe that can be on your road map.

Definitely is. AI is moving quickly, and we're going along with it.

Awesome. Well, that wraps up a really great webinar. Thank you to Huy being our special guest, both of you guys for, showing some great demos.

If anything was interesting to you from how Beyond Identity can secure your AI agent or your, LLMs today to, you know, Colton's demo of the future of AI security and, you know, what we're building towards, reach out to us. You can you can get a demo at beyond identity dot com slash demo. You'll talk to us, hear from us. Yeah. We're we're interested to hear your thoughts as well. Thanks, everybody.

Thanks. Thank you.

.avif)

.avif)

.avif)

.avif)

.avif)