How AI Is Accelerating Threats

TL;DR

Full Transcript

Hi, everyone. Welcome to another Beyond Identity webinar. This time, I am super excited about this webinar, not only because I love AI.

I do love AI, but because we get to share almost like a a meta threat research of some of the interesting developments in AI social engineering tactics. But, also, if you stick around, there's a fun, fun surprise at the end where we will actually give you a quick preview of, the next iteration of, Beyond Identity's product and how it applies to Agentic AI. Before I get carried away, my name is Jing. I lead marketing here, and I am joined by the excellent Sarah Cecchetti.

If you wanna quickly introduce yourself, Sarah.

Hi. I lead product strategy for Beyond Identity.

And is also one of the authors on the OIDC white paper. I think you would call that, like, the definitive white paper on agentic AI.

And identity access management. Yeah.

Yeah. We'll try to send a link out, after the show. So we are going to dive right in here.

So we are talking about winning the AI arms race and why your current defenses might be failing, but I don't deal in doom and gloom. So we're actually gonna talk to you about how you can defend yourself in a, deterministic way.

Let's get started. Okay.

So I wanna start with this slide because it turns out there's a lot of conversation about should we deploy AI? How should we use AI? You know, I know at Beyond Identity, we've gone through a few times of, how should we train up our employee workforce. So while we're going through all of this, attackers are actually actively using AI and putting it to use for malicious purposes because a tool is just a tool.

Right? I can take a hammer. I can build a deck. I can't build a deck.

I don't know where to start. But theoretically, one could build a deck or one could chase, you know, their little cousin or whatever. Don't do that either. But a tool is a tool, and how you use that tool determines kind of the outcome and the impact you have in the world.

Right? So while AI is accelerating our workflows, I'm a proud Vibe coder, it is also accelerating malicious workflows. I think the new anthropic report on how they interrupted, a Chinese adversary, using Cloud Code coined the term vibe-hacking. Actually, don't know if Anthropic coined the term vibe-hacking, but it is being done.

And, now there's this two point eight billion dollar industry for malicious AI tools and services. And that's just on the dark web. Right? Two point eight billion is no joke.

I'm pretty sure NVIDIA's, market cap is, what, like three, seven, eight billion? I don't know. But it's pretty big. So there's a there's a whole kind of substrate out there that is just dedicated to the development investment in and the use of these, AI tools and services.

We'll cover three of them today, and they're actually really interesting in terms of how they're being deployed.

Additionally, this number has actually gone up, but the research I had at the time showed a sixty percent surge in AI powered phishing attacks. Phishing and social engineering now just will never look the same. Right? And then in terms of that speed gap I was talking about, you know, we're debating it, attackers are using it, is it's apparently four thousand one hundred and fifty one percent in terms of the attackers that use generative AI versus the defenders who use generative AI to defend themselves.

That is tremendous. Right? So if this is the landscape, what do we do about it? How is this actually being deployed?

Well, you are in for a treat because we'll actually cover, three of the new tools in the AI arsenal. So there's WormGPT, and I gave them some fun names just so they're a little bit differentiated. Once a marketer, always a marketer. But I'm actually gonna hand it to Sarah at this point to kind of walk us through how these tools work and the kinds of outcomes you can drive with them. Let me advance this slide.

The market cap for NVIDIA right now is four trillion dollars.

It is Oh, I was off by, like, a multiple of ten.

So exciting times and much of that is funneling exactly what we're talking about today.

So the first thing that we're seeing in the field is called WormGBT. And, the thing that it allows attackers to do is write perfect English and not just perfect, but, on tone for the person they are trying to imitate. So typically in the past, an attacker would have to, craft their own copy for their phishing emails. Now they can use AI to do that. So you don't need a native English speaker in order to craft a great phishing email.

And likewise, when your employees open up an email, they're not gonna be able to rely on mistakes in punctuation, in grammar, and things like that in order to, get get around this. So it writes flawless phishing emails. And as you can see from the example here, it might say, hey, quick question. That's not grammatically correct English, but that is exactly how people talk in in emails. And so it is hitting the mark right in between, oh, it's so bad that it's easy to tell or it's so perfect that it's easy to tell that it's written by a GPT. It captures the exact tone that your employees use. So it's actually completely undetectable in terms of tone and style of the English copy that's in the phishing email.

Yeah. This one's really fun because, when I was reading over the, threat researchers' sort of write up on this, they called it unsettling and remarkably persuasive. So the first thing I thought about was all of the mandatory phishing training, that we put our users through. Right? Like, what's the point if they sound just like Sarah or just like our CFO. Right?

So Yep.

The next one I wanna talk about is fraud GBT. And this one really does a lot of things. So, when you are an attacker, it requires a lot of work actually on your part. They They sit in offices just like we sit in offices and they have to write computer programs that, deploy malware.

They have to write the computer programs that imitate the login page of your organization so that they can capture the credentials of your employees, right? All of that is work and it slows them down. It means that they can't attack as many employees. They can't attack as many organizations as they could otherwise.

What Fraud GBT does is it enables them to automate all of that. So they can just like we can use AI to automate our software development for good, they can use AI to automate their software development for evil.

And so they can very easily, put up a page and just like, what we talked about before with WormGPT with the perfect copy, that login page will be pixel perfect to your existing login page. So there is no way for an employee to tell the difference between just looking output of the DOM of the browser, oh, this is different from my normal login page because it's not. Ai enabled them to make it perfectly similar to the page that they're used to looking at, where they're used to entering their credentials.

And so it automates these workflows that hackers have to go through and not just deploying malware, not just creating login pages, but also actually writing software to find vulnerabilities themselves. So if they do phish your organization and they do get access to your code base, FraudGBT can analyze your code base and find vulnerabilities that will enable them to exploit your software and take your company down or exfiltrate data. So it's a very powerful tool that attackers are now using that are making attacks much, more simple for them to execute.

Yeah. And it's only two hundred dollars a month. Right? We could, how many coffees is that? Right?

I'm in New York.

Like ten dollars. It's like twenty coffees. And what I find interesting is like, we have a screenshot from, FraudGPT. It's like, instead of using, so for instance, some of the previous iterations of malicious tooling, you have to actually open your terminal and, like, interact with the CLI.

Nowadays, there's a a UI. There's, like, jailbroken, LLMs in the background generating this kind of stuff, and it's almost like a nice user experience. So it it just occurs to me that they really treat this almost like a product. Right?

They iterate. They take feedback from Telegram. No wonder there's almost like a three billion dollar industry kind of underlying this whole exchange.

Yep. So the next one we're gonna talk about, does that exact thing. It's called SpamGBT.

And, previously, when you sent spam, you generally had to do it yourself. There were some, or some pieces of software that helped you, mail and helped you kind of deploy, but there was nothing, that was a slick UI that would completely manage end to end an entire email campaign the way that, b to b SaaS does for a normal organization. And now that exists. So AI has enabled something called SpamGBT.

It allows you to AB test. It allows you to manage all of your DKIM registrations and all of your SMTP and all of your certificates and make sure that your emails are, absolutely perfect so that they get through all of the spam filters so that they have all the right settings, all the right encryption so that they look and they behave just like actual emails. And then after they get into the inbox, they're able to AB test different headlines and say, hey, which of these works best? And then send that to everyone in your organization.

So this is a a very thorough piece of software that, can do entire campaigns, at the push of a button, which makes it much, much easier for attackers to compromise your organization, through spam and phishing emails.

And so the the point that we're trying to make by showing you these tools is that, phishing training is really ineffective now. So it used to be that when you had, humans without the power of AI and they were trying to get into your organization trying to attack you, you could rely on either, spelling and grammar that was not perfect enough or too perfect. You could rely on generic greetings. They didn't know exactly who they were talking to. They didn't have the technology to figure that out. So you would see things like dear customer and to whom it may concern, things like that that were really easy to pick out. They would have urgency and threats and say, oh, if you don't do this within two weeks, you know, we're going to expose all the pictures on your computer.

And, it would be very simple to figure out like, oh, these people are trying to trying to threaten me and trying to make me do something quickly. I know that this is a this is a a spam or a fraud email. And they would have, suspicious links with with mismatched URLs, with no HTTPS, things like that. So it was fairly easy for human attackers to figure out, and that's why we did so much phishing training in organizations, up until today. And today, that phishing training is really ineffective. So, the the AI has allowed attackers to have a tone that is picture perfect to what you would see in an average corporate email. They have personalized greetings where they can pick out the name of the person they are trying to attack and put that directly in.

They use subtle tactics rather than having, that that really obvious urgent threat, email. You'll see things that are much more subtle like, hey, how are you doing? Can you send me information on this piece of software, on this financial report? Things that are things that are friendly and inviting, not threatening. And so it's a whole different tone and a whole different way of attacking humans.

And then they'll still have completely valid links. Right? Because AI enables them to update all of their certificates, to have those things that look just like the real thing. And so, phishing training, we're seeing in the field has become completely ineffective in this new world.

Yeah. So in sort of in other other words, in more, I you know, I'm always trying to, like, avoid FUD, but this really is not a fair fight. Right? This is pitting human beings against, LMs, against AIs.

And this is really not a fight you can afford to lose because I I don't have to sort of belabor the point around, you know, the cost of incidents and the cost of a breach more than regulatory sort of slap on the wrist. Right? There's also the real sort of loss and erosion in brand and trust with your customers, which is really hard to get back. And the thing I just wanna point out about AI and LLMs is that the I think the fatal flaw is AI powered attacks are designed explicitly to learn from and mimic good patterns, human patterns.

They're designed to learn, iterate, and be trained on what human beings look like, right, or what normal behavior looks like. So a probabilistic system that kind of looks at unusual logins, or flags suspicious activity, when when your attack looks normal in every aspect, it actually just fails all of the the detections and probabilistic defense. So it it ultimately comes down to that being a losing proposition in the age of AI. So, like I said, I don't deal in pessimism for too long.

I'll indulge for a little bit. But it turns out there are ways to stand up against the malicious bad actors. And the core principle comes down to let's move away from probabilities and detection and response and actually implement a layer of preventative deterministic defense. And a deterministic system ultimately does not make guesses.

It verifies, and it operates on binaries, cryptography, and hardware backed certainty. So there's four key elements to a deterministic identity defense. Right? So the first, and I would say this is probably your most fundamental layer, is do you have a hardware backed device bound identity that can be secured in hardware and defends against things like phishing or social engineering, no matter how clever the leers look.

Right? So when a user logs in, the service must request a cryptographic signature that can only be signed by the private key stored in that secure enclave. It's the core sort of magic or not I don't wanna say magic. It's the core proposition of Fido.

Except Fido has synced pass keys now and all that, and that's a whole different ballgame. We do have a webinar on FIDO and sync task keys if you're interested. But ultimately, you need to start with a strong identity. And then, you know, it's not just the user that logs in.

It's their endpoints and their devices. So you wanna layer on real time device posture evaluation for things like, is it patched? Is it encrypted? Blah blah blah.

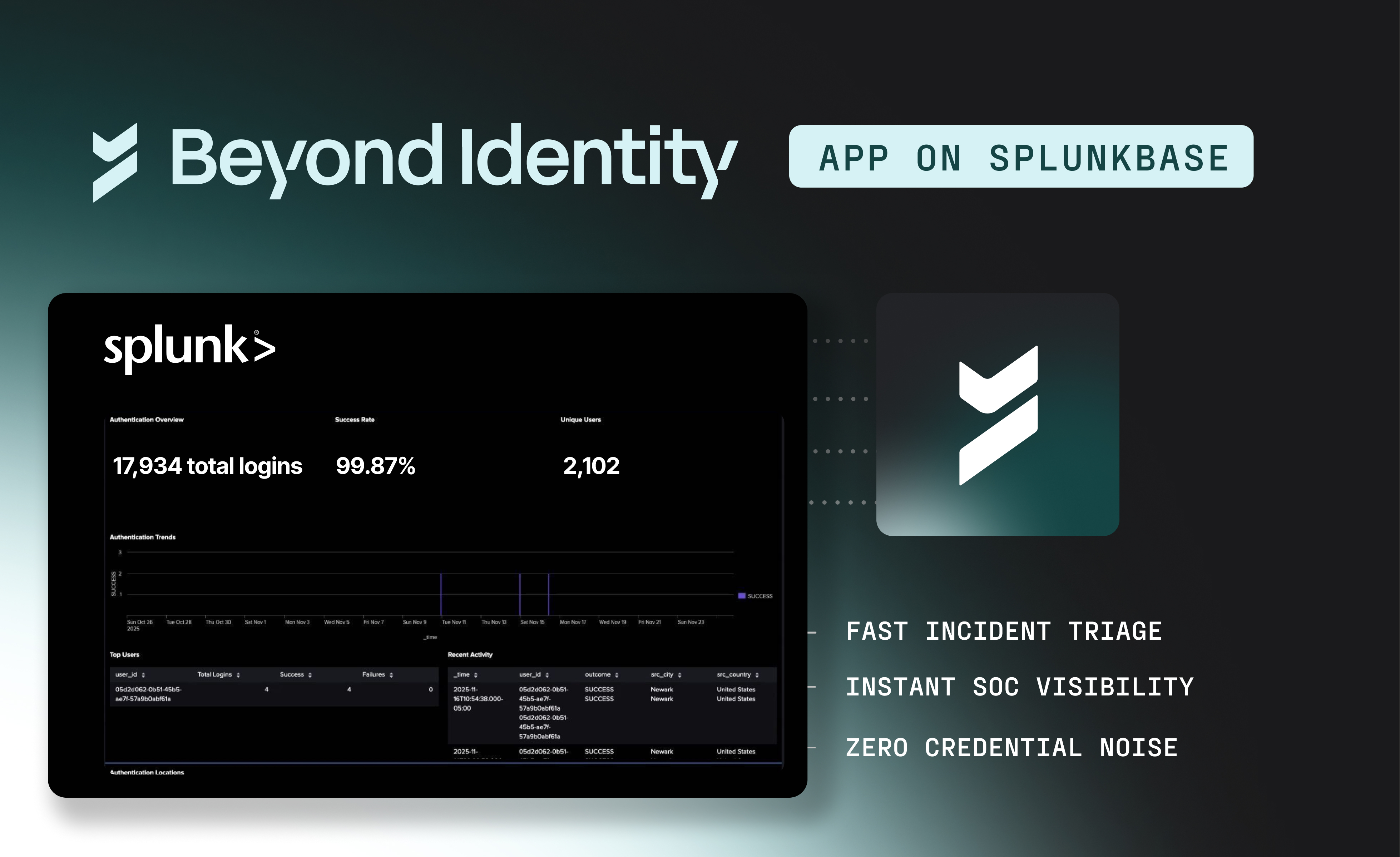

And then you can even pull in third party risk signals as part of that evaluation so you have a complete picture. Right? And then last but not least, for Beyond Identity at least, we think it's very important to validate user and device trust continuously. So once you have that strong identity, once you have these immutable signals of device trust, what if something changes mid session?

So we implement a policy and access control system that continuously enforces your security policy. So it's not just a point in time check. Actually, if something falls out of compliance, you're not dead in the water. Right?

There's something that you can do with it, which is to take a policy action.

I think this is kind of our current state of the world. Right? This is what Beyond the Knitting gives you out of box if you purchase us today and plug us into your IDP as almost like a defense layer. I wanna pass it over to Sarah because we wanna help defend you not just today, but for the threats of tomorrow, and so you can protect where the puck is going. And I'm super excited about this. So, Sarah, close us out.

Yeah. So we have an exclusive early access program going on right now that we wanted to tell you guys about.

It's for an AI security product. And what we're hearing from our customers, the most AI forward customers we have, their developers are using AI to build software, and to build internal tools, and that AI is accessing, external tools. So it might use a tool to access a database, it might use a tool to access something in your SaaS environment, your Salesforce, your Canva, your Oracle, and those connections are, completely invisible to a normal security software because they are executed by AIs. They are coming either through an API key or through OAuth, and they live, many MCP servers live locally on the machine so they can actually do remote code execution.

So our most AI forward companies are vastly more productive, Their developers are getting a lot done, but they're opening up these holes in their infrastructure that they're very worried about. And so they asked us to use the policy engine, that we have, which is, incredible and allows them to do real time policy on access management and just say, hey, I wanna do real time policy access management on my AI tooling. And so this product that we're building gives you control over the tools that are used in your organization, as well as visibility into MCP, which stands for Model Context Protocol.

That is the protocol that AI uses to connect with tools. We provide visibility into all the MCP calls in your environment, And then we enforce policy on those in real time to say these MCP servers are allowed, these MCP servers are not allowed. We do or don't allow elicitation where the the MCP server can talk directly to the client back and forth all the time and it's not just an input output relationship.

So it's a very exciting product for us. We are restricting access to it right now. So it's a very exclusive program only for our most AI forward customers and prospects. So if you are interested in this, please get ahold of us. We may be able to get you in.

And obviously, we'll be opening it up to more people over time. We're specifically looking for people who are shops that are Claude heavy.

That's where we really shine as a product right now. We're working on opening up to Cursor, ChatGPT, other IDEs, other clients. But right now, if you are a big Claude shop and you are very AI forward, please get ahold of us and we may be able to get you into this early access program.

For sure. And I will say, if you if you have questions or you're just like, how does this work? Feel free to, you know, reach out to Sarah on LinkedIn. I'm I'm sure. You know? I guess you could tweet at do you still have a Twitter, Sarah?

I do.

Ah. Yeah. I guess you could find her on Twitter as well. This is a really cool tool. So I I I was using it, and then I built a, local MCP server.

And then on the admin side, you could actually see the, MCPs and all of the tool calls that I had done during my cloud session, which I had no idea I called so many tools. So that's a really interesting, I think, value proposition. And I think, especially if you want to adopt AI and agentic AI without giving up the security side of the house, like, I I would strongly encourage you to reach out.

Alright. I think that is all the content we have today. Thank you, Sarah, for joining us, and thank you all of you for your time.

Until next time. Thank you. Bye.

TL;DR

Full Transcript

Hi, everyone. Welcome to another Beyond Identity webinar. This time, I am super excited about this webinar, not only because I love AI.

I do love AI, but because we get to share almost like a a meta threat research of some of the interesting developments in AI social engineering tactics. But, also, if you stick around, there's a fun, fun surprise at the end where we will actually give you a quick preview of, the next iteration of, Beyond Identity's product and how it applies to Agentic AI. Before I get carried away, my name is Jing. I lead marketing here, and I am joined by the excellent Sarah Cecchetti.

If you wanna quickly introduce yourself, Sarah.

Hi. I lead product strategy for Beyond Identity.

And is also one of the authors on the OIDC white paper. I think you would call that, like, the definitive white paper on agentic AI.

And identity access management. Yeah.

Yeah. We'll try to send a link out, after the show. So we are going to dive right in here.

So we are talking about winning the AI arms race and why your current defenses might be failing, but I don't deal in doom and gloom. So we're actually gonna talk to you about how you can defend yourself in a, deterministic way.

Let's get started. Okay.

So I wanna start with this slide because it turns out there's a lot of conversation about should we deploy AI? How should we use AI? You know, I know at Beyond Identity, we've gone through a few times of, how should we train up our employee workforce. So while we're going through all of this, attackers are actually actively using AI and putting it to use for malicious purposes because a tool is just a tool.

Right? I can take a hammer. I can build a deck. I can't build a deck.

I don't know where to start. But theoretically, one could build a deck or one could chase, you know, their little cousin or whatever. Don't do that either. But a tool is a tool, and how you use that tool determines kind of the outcome and the impact you have in the world.

Right? So while AI is accelerating our workflows, I'm a proud Vibe coder, it is also accelerating malicious workflows. I think the new anthropic report on how they interrupted, a Chinese adversary, using Cloud Code coined the term vibe-hacking. Actually, don't know if Anthropic coined the term vibe-hacking, but it is being done.

And, now there's this two point eight billion dollar industry for malicious AI tools and services. And that's just on the dark web. Right? Two point eight billion is no joke.

I'm pretty sure NVIDIA's, market cap is, what, like three, seven, eight billion? I don't know. But it's pretty big. So there's a there's a whole kind of substrate out there that is just dedicated to the development investment in and the use of these, AI tools and services.

We'll cover three of them today, and they're actually really interesting in terms of how they're being deployed.

Additionally, this number has actually gone up, but the research I had at the time showed a sixty percent surge in AI powered phishing attacks. Phishing and social engineering now just will never look the same. Right? And then in terms of that speed gap I was talking about, you know, we're debating it, attackers are using it, is it's apparently four thousand one hundred and fifty one percent in terms of the attackers that use generative AI versus the defenders who use generative AI to defend themselves.

That is tremendous. Right? So if this is the landscape, what do we do about it? How is this actually being deployed?

Well, you are in for a treat because we'll actually cover, three of the new tools in the AI arsenal. So there's WormGPT, and I gave them some fun names just so they're a little bit differentiated. Once a marketer, always a marketer. But I'm actually gonna hand it to Sarah at this point to kind of walk us through how these tools work and the kinds of outcomes you can drive with them. Let me advance this slide.

The market cap for NVIDIA right now is four trillion dollars.

It is Oh, I was off by, like, a multiple of ten.

So exciting times and much of that is funneling exactly what we're talking about today.

So the first thing that we're seeing in the field is called WormGBT. And, the thing that it allows attackers to do is write perfect English and not just perfect, but, on tone for the person they are trying to imitate. So typically in the past, an attacker would have to, craft their own copy for their phishing emails. Now they can use AI to do that. So you don't need a native English speaker in order to craft a great phishing email.

And likewise, when your employees open up an email, they're not gonna be able to rely on mistakes in punctuation, in grammar, and things like that in order to, get get around this. So it writes flawless phishing emails. And as you can see from the example here, it might say, hey, quick question. That's not grammatically correct English, but that is exactly how people talk in in emails. And so it is hitting the mark right in between, oh, it's so bad that it's easy to tell or it's so perfect that it's easy to tell that it's written by a GPT. It captures the exact tone that your employees use. So it's actually completely undetectable in terms of tone and style of the English copy that's in the phishing email.

Yeah. This one's really fun because, when I was reading over the, threat researchers' sort of write up on this, they called it unsettling and remarkably persuasive. So the first thing I thought about was all of the mandatory phishing training, that we put our users through. Right? Like, what's the point if they sound just like Sarah or just like our CFO. Right?

So Yep.

The next one I wanna talk about is fraud GBT. And this one really does a lot of things. So, when you are an attacker, it requires a lot of work actually on your part. They They sit in offices just like we sit in offices and they have to write computer programs that, deploy malware.

They have to write the computer programs that imitate the login page of your organization so that they can capture the credentials of your employees, right? All of that is work and it slows them down. It means that they can't attack as many employees. They can't attack as many organizations as they could otherwise.

What Fraud GBT does is it enables them to automate all of that. So they can just like we can use AI to automate our software development for good, they can use AI to automate their software development for evil.

And so they can very easily, put up a page and just like, what we talked about before with WormGPT with the perfect copy, that login page will be pixel perfect to your existing login page. So there is no way for an employee to tell the difference between just looking output of the DOM of the browser, oh, this is different from my normal login page because it's not. Ai enabled them to make it perfectly similar to the page that they're used to looking at, where they're used to entering their credentials.

And so it automates these workflows that hackers have to go through and not just deploying malware, not just creating login pages, but also actually writing software to find vulnerabilities themselves. So if they do phish your organization and they do get access to your code base, FraudGBT can analyze your code base and find vulnerabilities that will enable them to exploit your software and take your company down or exfiltrate data. So it's a very powerful tool that attackers are now using that are making attacks much, more simple for them to execute.

Yeah. And it's only two hundred dollars a month. Right? We could, how many coffees is that? Right?

I'm in New York.

Like ten dollars. It's like twenty coffees. And what I find interesting is like, we have a screenshot from, FraudGPT. It's like, instead of using, so for instance, some of the previous iterations of malicious tooling, you have to actually open your terminal and, like, interact with the CLI.

Nowadays, there's a a UI. There's, like, jailbroken, LLMs in the background generating this kind of stuff, and it's almost like a nice user experience. So it it just occurs to me that they really treat this almost like a product. Right?

They iterate. They take feedback from Telegram. No wonder there's almost like a three billion dollar industry kind of underlying this whole exchange.

Yep. So the next one we're gonna talk about, does that exact thing. It's called SpamGBT.

And, previously, when you sent spam, you generally had to do it yourself. There were some, or some pieces of software that helped you, mail and helped you kind of deploy, but there was nothing, that was a slick UI that would completely manage end to end an entire email campaign the way that, b to b SaaS does for a normal organization. And now that exists. So AI has enabled something called SpamGBT.

It allows you to AB test. It allows you to manage all of your DKIM registrations and all of your SMTP and all of your certificates and make sure that your emails are, absolutely perfect so that they get through all of the spam filters so that they have all the right settings, all the right encryption so that they look and they behave just like actual emails. And then after they get into the inbox, they're able to AB test different headlines and say, hey, which of these works best? And then send that to everyone in your organization.

So this is a a very thorough piece of software that, can do entire campaigns, at the push of a button, which makes it much, much easier for attackers to compromise your organization, through spam and phishing emails.

And so the the point that we're trying to make by showing you these tools is that, phishing training is really ineffective now. So it used to be that when you had, humans without the power of AI and they were trying to get into your organization trying to attack you, you could rely on either, spelling and grammar that was not perfect enough or too perfect. You could rely on generic greetings. They didn't know exactly who they were talking to. They didn't have the technology to figure that out. So you would see things like dear customer and to whom it may concern, things like that that were really easy to pick out. They would have urgency and threats and say, oh, if you don't do this within two weeks, you know, we're going to expose all the pictures on your computer.

And, it would be very simple to figure out like, oh, these people are trying to trying to threaten me and trying to make me do something quickly. I know that this is a this is a a spam or a fraud email. And they would have, suspicious links with with mismatched URLs, with no HTTPS, things like that. So it was fairly easy for human attackers to figure out, and that's why we did so much phishing training in organizations, up until today. And today, that phishing training is really ineffective. So, the the AI has allowed attackers to have a tone that is picture perfect to what you would see in an average corporate email. They have personalized greetings where they can pick out the name of the person they are trying to attack and put that directly in.

They use subtle tactics rather than having, that that really obvious urgent threat, email. You'll see things that are much more subtle like, hey, how are you doing? Can you send me information on this piece of software, on this financial report? Things that are things that are friendly and inviting, not threatening. And so it's a whole different tone and a whole different way of attacking humans.

And then they'll still have completely valid links. Right? Because AI enables them to update all of their certificates, to have those things that look just like the real thing. And so, phishing training, we're seeing in the field has become completely ineffective in this new world.

Yeah. So in sort of in other other words, in more, I you know, I'm always trying to, like, avoid FUD, but this really is not a fair fight. Right? This is pitting human beings against, LMs, against AIs.

And this is really not a fight you can afford to lose because I I don't have to sort of belabor the point around, you know, the cost of incidents and the cost of a breach more than regulatory sort of slap on the wrist. Right? There's also the real sort of loss and erosion in brand and trust with your customers, which is really hard to get back. And the thing I just wanna point out about AI and LLMs is that the I think the fatal flaw is AI powered attacks are designed explicitly to learn from and mimic good patterns, human patterns.

They're designed to learn, iterate, and be trained on what human beings look like, right, or what normal behavior looks like. So a probabilistic system that kind of looks at unusual logins, or flags suspicious activity, when when your attack looks normal in every aspect, it actually just fails all of the the detections and probabilistic defense. So it it ultimately comes down to that being a losing proposition in the age of AI. So, like I said, I don't deal in pessimism for too long.

I'll indulge for a little bit. But it turns out there are ways to stand up against the malicious bad actors. And the core principle comes down to let's move away from probabilities and detection and response and actually implement a layer of preventative deterministic defense. And a deterministic system ultimately does not make guesses.

It verifies, and it operates on binaries, cryptography, and hardware backed certainty. So there's four key elements to a deterministic identity defense. Right? So the first, and I would say this is probably your most fundamental layer, is do you have a hardware backed device bound identity that can be secured in hardware and defends against things like phishing or social engineering, no matter how clever the leers look.

Right? So when a user logs in, the service must request a cryptographic signature that can only be signed by the private key stored in that secure enclave. It's the core sort of magic or not I don't wanna say magic. It's the core proposition of Fido.

Except Fido has synced pass keys now and all that, and that's a whole different ballgame. We do have a webinar on FIDO and sync task keys if you're interested. But ultimately, you need to start with a strong identity. And then, you know, it's not just the user that logs in.

It's their endpoints and their devices. So you wanna layer on real time device posture evaluation for things like, is it patched? Is it encrypted? Blah blah blah.

And then you can even pull in third party risk signals as part of that evaluation so you have a complete picture. Right? And then last but not least, for Beyond Identity at least, we think it's very important to validate user and device trust continuously. So once you have that strong identity, once you have these immutable signals of device trust, what if something changes mid session?

So we implement a policy and access control system that continuously enforces your security policy. So it's not just a point in time check. Actually, if something falls out of compliance, you're not dead in the water. Right?

There's something that you can do with it, which is to take a policy action.

I think this is kind of our current state of the world. Right? This is what Beyond the Knitting gives you out of box if you purchase us today and plug us into your IDP as almost like a defense layer. I wanna pass it over to Sarah because we wanna help defend you not just today, but for the threats of tomorrow, and so you can protect where the puck is going. And I'm super excited about this. So, Sarah, close us out.

Yeah. So we have an exclusive early access program going on right now that we wanted to tell you guys about.

It's for an AI security product. And what we're hearing from our customers, the most AI forward customers we have, their developers are using AI to build software, and to build internal tools, and that AI is accessing, external tools. So it might use a tool to access a database, it might use a tool to access something in your SaaS environment, your Salesforce, your Canva, your Oracle, and those connections are, completely invisible to a normal security software because they are executed by AIs. They are coming either through an API key or through OAuth, and they live, many MCP servers live locally on the machine so they can actually do remote code execution.

So our most AI forward companies are vastly more productive, Their developers are getting a lot done, but they're opening up these holes in their infrastructure that they're very worried about. And so they asked us to use the policy engine, that we have, which is, incredible and allows them to do real time policy on access management and just say, hey, I wanna do real time policy access management on my AI tooling. And so this product that we're building gives you control over the tools that are used in your organization, as well as visibility into MCP, which stands for Model Context Protocol.

That is the protocol that AI uses to connect with tools. We provide visibility into all the MCP calls in your environment, And then we enforce policy on those in real time to say these MCP servers are allowed, these MCP servers are not allowed. We do or don't allow elicitation where the the MCP server can talk directly to the client back and forth all the time and it's not just an input output relationship.

So it's a very exciting product for us. We are restricting access to it right now. So it's a very exclusive program only for our most AI forward customers and prospects. So if you are interested in this, please get ahold of us. We may be able to get you in.

And obviously, we'll be opening it up to more people over time. We're specifically looking for people who are shops that are Claude heavy.

That's where we really shine as a product right now. We're working on opening up to Cursor, ChatGPT, other IDEs, other clients. But right now, if you are a big Claude shop and you are very AI forward, please get ahold of us and we may be able to get you into this early access program.

For sure. And I will say, if you if you have questions or you're just like, how does this work? Feel free to, you know, reach out to Sarah on LinkedIn. I'm I'm sure. You know? I guess you could tweet at do you still have a Twitter, Sarah?

I do.

Ah. Yeah. I guess you could find her on Twitter as well. This is a really cool tool. So I I I was using it, and then I built a, local MCP server.

And then on the admin side, you could actually see the, MCPs and all of the tool calls that I had done during my cloud session, which I had no idea I called so many tools. So that's a really interesting, I think, value proposition. And I think, especially if you want to adopt AI and agentic AI without giving up the security side of the house, like, I I would strongly encourage you to reach out.

Alright. I think that is all the content we have today. Thank you, Sarah, for joining us, and thank you all of you for your time.

Until next time. Thank you. Bye.

TL;DR

Full Transcript

Hi, everyone. Welcome to another Beyond Identity webinar. This time, I am super excited about this webinar, not only because I love AI.

I do love AI, but because we get to share almost like a a meta threat research of some of the interesting developments in AI social engineering tactics. But, also, if you stick around, there's a fun, fun surprise at the end where we will actually give you a quick preview of, the next iteration of, Beyond Identity's product and how it applies to Agentic AI. Before I get carried away, my name is Jing. I lead marketing here, and I am joined by the excellent Sarah Cecchetti.

If you wanna quickly introduce yourself, Sarah.

Hi. I lead product strategy for Beyond Identity.

And is also one of the authors on the OIDC white paper. I think you would call that, like, the definitive white paper on agentic AI.

And identity access management. Yeah.

Yeah. We'll try to send a link out, after the show. So we are going to dive right in here.

So we are talking about winning the AI arms race and why your current defenses might be failing, but I don't deal in doom and gloom. So we're actually gonna talk to you about how you can defend yourself in a, deterministic way.

Let's get started. Okay.

So I wanna start with this slide because it turns out there's a lot of conversation about should we deploy AI? How should we use AI? You know, I know at Beyond Identity, we've gone through a few times of, how should we train up our employee workforce. So while we're going through all of this, attackers are actually actively using AI and putting it to use for malicious purposes because a tool is just a tool.

Right? I can take a hammer. I can build a deck. I can't build a deck.

I don't know where to start. But theoretically, one could build a deck or one could chase, you know, their little cousin or whatever. Don't do that either. But a tool is a tool, and how you use that tool determines kind of the outcome and the impact you have in the world.

Right? So while AI is accelerating our workflows, I'm a proud Vibe coder, it is also accelerating malicious workflows. I think the new anthropic report on how they interrupted, a Chinese adversary, using Cloud Code coined the term vibe-hacking. Actually, don't know if Anthropic coined the term vibe-hacking, but it is being done.

And, now there's this two point eight billion dollar industry for malicious AI tools and services. And that's just on the dark web. Right? Two point eight billion is no joke.

I'm pretty sure NVIDIA's, market cap is, what, like three, seven, eight billion? I don't know. But it's pretty big. So there's a there's a whole kind of substrate out there that is just dedicated to the development investment in and the use of these, AI tools and services.

We'll cover three of them today, and they're actually really interesting in terms of how they're being deployed.

Additionally, this number has actually gone up, but the research I had at the time showed a sixty percent surge in AI powered phishing attacks. Phishing and social engineering now just will never look the same. Right? And then in terms of that speed gap I was talking about, you know, we're debating it, attackers are using it, is it's apparently four thousand one hundred and fifty one percent in terms of the attackers that use generative AI versus the defenders who use generative AI to defend themselves.

That is tremendous. Right? So if this is the landscape, what do we do about it? How is this actually being deployed?

Well, you are in for a treat because we'll actually cover, three of the new tools in the AI arsenal. So there's WormGPT, and I gave them some fun names just so they're a little bit differentiated. Once a marketer, always a marketer. But I'm actually gonna hand it to Sarah at this point to kind of walk us through how these tools work and the kinds of outcomes you can drive with them. Let me advance this slide.

The market cap for NVIDIA right now is four trillion dollars.

It is Oh, I was off by, like, a multiple of ten.

So exciting times and much of that is funneling exactly what we're talking about today.

So the first thing that we're seeing in the field is called WormGBT. And, the thing that it allows attackers to do is write perfect English and not just perfect, but, on tone for the person they are trying to imitate. So typically in the past, an attacker would have to, craft their own copy for their phishing emails. Now they can use AI to do that. So you don't need a native English speaker in order to craft a great phishing email.

And likewise, when your employees open up an email, they're not gonna be able to rely on mistakes in punctuation, in grammar, and things like that in order to, get get around this. So it writes flawless phishing emails. And as you can see from the example here, it might say, hey, quick question. That's not grammatically correct English, but that is exactly how people talk in in emails. And so it is hitting the mark right in between, oh, it's so bad that it's easy to tell or it's so perfect that it's easy to tell that it's written by a GPT. It captures the exact tone that your employees use. So it's actually completely undetectable in terms of tone and style of the English copy that's in the phishing email.

Yeah. This one's really fun because, when I was reading over the, threat researchers' sort of write up on this, they called it unsettling and remarkably persuasive. So the first thing I thought about was all of the mandatory phishing training, that we put our users through. Right? Like, what's the point if they sound just like Sarah or just like our CFO. Right?

So Yep.

The next one I wanna talk about is fraud GBT. And this one really does a lot of things. So, when you are an attacker, it requires a lot of work actually on your part. They They sit in offices just like we sit in offices and they have to write computer programs that, deploy malware.

They have to write the computer programs that imitate the login page of your organization so that they can capture the credentials of your employees, right? All of that is work and it slows them down. It means that they can't attack as many employees. They can't attack as many organizations as they could otherwise.

What Fraud GBT does is it enables them to automate all of that. So they can just like we can use AI to automate our software development for good, they can use AI to automate their software development for evil.

And so they can very easily, put up a page and just like, what we talked about before with WormGPT with the perfect copy, that login page will be pixel perfect to your existing login page. So there is no way for an employee to tell the difference between just looking output of the DOM of the browser, oh, this is different from my normal login page because it's not. Ai enabled them to make it perfectly similar to the page that they're used to looking at, where they're used to entering their credentials.

And so it automates these workflows that hackers have to go through and not just deploying malware, not just creating login pages, but also actually writing software to find vulnerabilities themselves. So if they do phish your organization and they do get access to your code base, FraudGBT can analyze your code base and find vulnerabilities that will enable them to exploit your software and take your company down or exfiltrate data. So it's a very powerful tool that attackers are now using that are making attacks much, more simple for them to execute.

Yeah. And it's only two hundred dollars a month. Right? We could, how many coffees is that? Right?

I'm in New York.

Like ten dollars. It's like twenty coffees. And what I find interesting is like, we have a screenshot from, FraudGPT. It's like, instead of using, so for instance, some of the previous iterations of malicious tooling, you have to actually open your terminal and, like, interact with the CLI.

Nowadays, there's a a UI. There's, like, jailbroken, LLMs in the background generating this kind of stuff, and it's almost like a nice user experience. So it it just occurs to me that they really treat this almost like a product. Right?

They iterate. They take feedback from Telegram. No wonder there's almost like a three billion dollar industry kind of underlying this whole exchange.

Yep. So the next one we're gonna talk about, does that exact thing. It's called SpamGBT.

And, previously, when you sent spam, you generally had to do it yourself. There were some, or some pieces of software that helped you, mail and helped you kind of deploy, but there was nothing, that was a slick UI that would completely manage end to end an entire email campaign the way that, b to b SaaS does for a normal organization. And now that exists. So AI has enabled something called SpamGBT.

It allows you to AB test. It allows you to manage all of your DKIM registrations and all of your SMTP and all of your certificates and make sure that your emails are, absolutely perfect so that they get through all of the spam filters so that they have all the right settings, all the right encryption so that they look and they behave just like actual emails. And then after they get into the inbox, they're able to AB test different headlines and say, hey, which of these works best? And then send that to everyone in your organization.

So this is a a very thorough piece of software that, can do entire campaigns, at the push of a button, which makes it much, much easier for attackers to compromise your organization, through spam and phishing emails.

And so the the point that we're trying to make by showing you these tools is that, phishing training is really ineffective now. So it used to be that when you had, humans without the power of AI and they were trying to get into your organization trying to attack you, you could rely on either, spelling and grammar that was not perfect enough or too perfect. You could rely on generic greetings. They didn't know exactly who they were talking to. They didn't have the technology to figure that out. So you would see things like dear customer and to whom it may concern, things like that that were really easy to pick out. They would have urgency and threats and say, oh, if you don't do this within two weeks, you know, we're going to expose all the pictures on your computer.

And, it would be very simple to figure out like, oh, these people are trying to trying to threaten me and trying to make me do something quickly. I know that this is a this is a a spam or a fraud email. And they would have, suspicious links with with mismatched URLs, with no HTTPS, things like that. So it was fairly easy for human attackers to figure out, and that's why we did so much phishing training in organizations, up until today. And today, that phishing training is really ineffective. So, the the AI has allowed attackers to have a tone that is picture perfect to what you would see in an average corporate email. They have personalized greetings where they can pick out the name of the person they are trying to attack and put that directly in.

They use subtle tactics rather than having, that that really obvious urgent threat, email. You'll see things that are much more subtle like, hey, how are you doing? Can you send me information on this piece of software, on this financial report? Things that are things that are friendly and inviting, not threatening. And so it's a whole different tone and a whole different way of attacking humans.

And then they'll still have completely valid links. Right? Because AI enables them to update all of their certificates, to have those things that look just like the real thing. And so, phishing training, we're seeing in the field has become completely ineffective in this new world.

Yeah. So in sort of in other other words, in more, I you know, I'm always trying to, like, avoid FUD, but this really is not a fair fight. Right? This is pitting human beings against, LMs, against AIs.

And this is really not a fight you can afford to lose because I I don't have to sort of belabor the point around, you know, the cost of incidents and the cost of a breach more than regulatory sort of slap on the wrist. Right? There's also the real sort of loss and erosion in brand and trust with your customers, which is really hard to get back. And the thing I just wanna point out about AI and LLMs is that the I think the fatal flaw is AI powered attacks are designed explicitly to learn from and mimic good patterns, human patterns.

They're designed to learn, iterate, and be trained on what human beings look like, right, or what normal behavior looks like. So a probabilistic system that kind of looks at unusual logins, or flags suspicious activity, when when your attack looks normal in every aspect, it actually just fails all of the the detections and probabilistic defense. So it it ultimately comes down to that being a losing proposition in the age of AI. So, like I said, I don't deal in pessimism for too long.

I'll indulge for a little bit. But it turns out there are ways to stand up against the malicious bad actors. And the core principle comes down to let's move away from probabilities and detection and response and actually implement a layer of preventative deterministic defense. And a deterministic system ultimately does not make guesses.

It verifies, and it operates on binaries, cryptography, and hardware backed certainty. So there's four key elements to a deterministic identity defense. Right? So the first, and I would say this is probably your most fundamental layer, is do you have a hardware backed device bound identity that can be secured in hardware and defends against things like phishing or social engineering, no matter how clever the leers look.

Right? So when a user logs in, the service must request a cryptographic signature that can only be signed by the private key stored in that secure enclave. It's the core sort of magic or not I don't wanna say magic. It's the core proposition of Fido.

Except Fido has synced pass keys now and all that, and that's a whole different ballgame. We do have a webinar on FIDO and sync task keys if you're interested. But ultimately, you need to start with a strong identity. And then, you know, it's not just the user that logs in.

It's their endpoints and their devices. So you wanna layer on real time device posture evaluation for things like, is it patched? Is it encrypted? Blah blah blah.

And then you can even pull in third party risk signals as part of that evaluation so you have a complete picture. Right? And then last but not least, for Beyond Identity at least, we think it's very important to validate user and device trust continuously. So once you have that strong identity, once you have these immutable signals of device trust, what if something changes mid session?

So we implement a policy and access control system that continuously enforces your security policy. So it's not just a point in time check. Actually, if something falls out of compliance, you're not dead in the water. Right?

There's something that you can do with it, which is to take a policy action.

I think this is kind of our current state of the world. Right? This is what Beyond the Knitting gives you out of box if you purchase us today and plug us into your IDP as almost like a defense layer. I wanna pass it over to Sarah because we wanna help defend you not just today, but for the threats of tomorrow, and so you can protect where the puck is going. And I'm super excited about this. So, Sarah, close us out.

Yeah. So we have an exclusive early access program going on right now that we wanted to tell you guys about.

It's for an AI security product. And what we're hearing from our customers, the most AI forward customers we have, their developers are using AI to build software, and to build internal tools, and that AI is accessing, external tools. So it might use a tool to access a database, it might use a tool to access something in your SaaS environment, your Salesforce, your Canva, your Oracle, and those connections are, completely invisible to a normal security software because they are executed by AIs. They are coming either through an API key or through OAuth, and they live, many MCP servers live locally on the machine so they can actually do remote code execution.

So our most AI forward companies are vastly more productive, Their developers are getting a lot done, but they're opening up these holes in their infrastructure that they're very worried about. And so they asked us to use the policy engine, that we have, which is, incredible and allows them to do real time policy on access management and just say, hey, I wanna do real time policy access management on my AI tooling. And so this product that we're building gives you control over the tools that are used in your organization, as well as visibility into MCP, which stands for Model Context Protocol.

That is the protocol that AI uses to connect with tools. We provide visibility into all the MCP calls in your environment, And then we enforce policy on those in real time to say these MCP servers are allowed, these MCP servers are not allowed. We do or don't allow elicitation where the the MCP server can talk directly to the client back and forth all the time and it's not just an input output relationship.

So it's a very exciting product for us. We are restricting access to it right now. So it's a very exclusive program only for our most AI forward customers and prospects. So if you are interested in this, please get ahold of us. We may be able to get you in.

And obviously, we'll be opening it up to more people over time. We're specifically looking for people who are shops that are Claude heavy.

That's where we really shine as a product right now. We're working on opening up to Cursor, ChatGPT, other IDEs, other clients. But right now, if you are a big Claude shop and you are very AI forward, please get ahold of us and we may be able to get you into this early access program.

For sure. And I will say, if you if you have questions or you're just like, how does this work? Feel free to, you know, reach out to Sarah on LinkedIn. I'm I'm sure. You know? I guess you could tweet at do you still have a Twitter, Sarah?

I do.

Ah. Yeah. I guess you could find her on Twitter as well. This is a really cool tool. So I I I was using it, and then I built a, local MCP server.

And then on the admin side, you could actually see the, MCPs and all of the tool calls that I had done during my cloud session, which I had no idea I called so many tools. So that's a really interesting, I think, value proposition. And I think, especially if you want to adopt AI and agentic AI without giving up the security side of the house, like, I I would strongly encourage you to reach out.

Alright. I think that is all the content we have today. Thank you, Sarah, for joining us, and thank you all of you for your time.

Until next time. Thank you. Bye.

.avif)

.avif)

.avif)

.avif)

.avif)