AI Chat Podcast: How Hackers Use AI & How to Stop It

TL;DR

Full Transcript

AI has enabled attackers to get to know a lot of things about a specific person and then create entire applications that are pixel perfect malware. One of the things that AI is also very good at is code They have seen Claude code used for state sponsored cyber espionage where an attacker used it to find exploits in an active code base and exploit them and exfiltrate data. It's just like sitting in a marketing manager's office, only it's evil marketing manager. You should connect your cloud code to this magical MCP server that does all these wonderful things.

And it might even do all those wonderful things, but it might also exfiltrate all your data. If AI gets a bad rap because it gets breached all the time, then people are gonna stop using it. The AI is too good now. It's completely indistinguishable from fakes and from frauds.

You need biometrics. You need cryptography. Relying on human judgment is no longer sufficient.

Welcome to the AI Chat Podcast. I'm your host, Jaden Schafer. And today on the podcast, I'm super excited to have Sarah Cecchetti joining us. She leads AI security and Zero Trust efforts at Beyond Identity.

So basically, helps companies keep their systems and data safe as they're adopting tools like ChatGPT and other AI systems. Before this, she worked on core identity programs at AWS and Auth, which give her a really practical real world view of how organizations can securely use modern AI. So I'm really excited to have Sarah on the show. So welcome to the show today, Sarah.

Thanks for having me on. It's really great to be here.

Before we get into my one hundred and one questions I have for you, and that everyone has been asking about this, I'm just curious, like, got you into the space? What got you interested in, security? Give us a little bit of your background.

Yeah. So I started my career in software development and then moved into a specialization within cybersecurity called identity and access management. And so that's the idea that when things are happening in your environment, you want to know who is doing those things, what computer they're using, what applications are running on those computers so that you can have a zero trust strategy within your organization. And so working on that for humans for many years was great.

But increasingly we've had non human identities coming online and doing work. Now we have AI in the mix, both in CICD pipelines and in day to day work. We have really interesting identity challenges and access management challenges that we haven't had. So it is a great time to be an identity and access management professional.

Yes. So much is happening. Definitely a very needed field, we're seeing this interesting shift with all this agentic stuff going on. For people that are listening that are unfamiliar with the term zero trust, I'm wondering if you could just give a brief explanation of that, to level set and talk about what it means when we're talking about kind of like AI tools and these kind of internal pilots that people are running.

Yeah. And so the origin of the term Zero Trust is from a book called Zero Trust Networks by Evan Gilman. I have it back here somewhere. It's the O'Reilly book with the lobster document.

Perfect.

And basically the, idea behind Zero Trust is that the way we used to do cybersecurity was by securing the network. And so you had the good people inside of the network perimeter and the bad people stayed outside, and that was how you kept your security in place. And access management really wasn't a thing. If you were on the network, you could get to everything else that was on the network.

And it turns out that people want to work from home, and people want to work from different places and not necessarily the office. And so we punched some holes in the network, and we made some VPNs, and we let some other people in, and people have contractors who need to access things within their environment. And more and more, we compromised the integrity of the networking perimeter until it really became a non signal as to who is a good guy and who is a bad guy. And so it wasn't something that we could use really to be useful from a cybersecurity context.

And so the thinking behind the Zero Trust movement was really that rather than identifying the network, we identify the person, that either via a credential, like a password or a biometric or some sort of cryptographic protocol. And then we identify the device that person is working on and make sure that it's the device we think it is, that it's not someone logging in as them from a malicious computer, and then we analyze the software that's running on the device and make sure that there's no malware running, that the programs that we think are running are in fact valid programs that have valid checksubs, and we know that the computer programs that are running are not going to insert malicious things between the user and the computer.

Okay. I love it. Incredible explanation. And then the other thing I would love to get have you explain is to people, I think like, you know, security can mean a lot of different things to a lot of different people. What is it specifically that Beyond Identity works on? What is it that you're excited about? How did, you know, like what got you excited about working with, working on Beyond Identity and what are some of the problems that you guys are solving?

So often in my work in security, there have been trade offs between security and usability, right? Think of the TSA line at the airport, right? The more secure it is, the longer it takes for everyone to get through. The more of a hassle it is to take off your shoes and x-ray your luggage and all that kind of stuff.

The same is true of corporate security, right? We can make it very difficult for people to get into the system, but then it's very difficult for people to get into the system. And so the thing that really attracted me to Beyond Identity was that it's a solution that is both more secure and more usable. So by getting rid of passwords, really the only people who liked passwords were attackers because they're so simple to compromise they're so difficult for humans to actually remember correctly.

And so what we do instead is we use a combination of biometrics and cryptographic proof to bind the human identity to an immovable passkey on the corporate laptop, and so we can act as an identity provider or as a multi factor authentication layer in your corporate environment, and that way your users can get rid of passwords entirely and have increased security.

Okay. I love it. I think everyone is honestly rejoicing at the concept of not having any passwords to remember. I have a password tool that remembers all my passwords.

I don't even remember them. I'm not sure that's very secure either, because you know, LastPass gets hacked and all this kind of stuff. So I guess my question is though, in today's environment, obviously AI assistants and agents, they work very differently from human users. What are some of the biggest ways that they change how companies need to think about security today?

Yeah. So, Beyond Identity had a lot of customers before the AI craze, right? Because security and passwordless are both great things. But since then, we've seen a huge uptick in phishing and particularly spear phishing.

AI has enabled attackers to get to know a lot of things about a specific person and then create entire applications that are pixel perfect malware, right? They look exactly like the site that you think you're at. The email looks exactly like a valid email. It's coming from a valid email address.

All of these things were very technically difficult to do in twenty twenty one, but now that we have AI tools that are very good at coding, that are very fast, we're seeing a huge uptick in successful phishing campaigns.

Yep. And I can say just this week, my wife sent me a text message. She's like, what's this? And it was like some like automated like PayPal subscription thing that had been sent to us.

And it was of course a phishing attack because the return, like the, the reply email was like some random email, but it looked like it was coming from actual PayPal and like it looked super legitimate. Obviously, I think you're right. Identity based attacks are becoming a lot more common. How do you think those, you know, attacks look when the target is like an AI system instead of maybe like a person?

Like what are some of the differences?

Well, it's fascinating. One of the things that AI is also very good at is code scanning. So we've seen there was actually just today, there was a blog post by Anthropic disclosing that they have seen Claude code used for state sponsored cyber espionage, where an attacker used it to find exploits in an active code base and exploit them and exfiltrate data. And so we know that the ability to use AI to write more code is going to get us more vulnerabilities, and it's going to make it easier to scan and find those vulnerabilities.

And so we're trying to find deterministic ways to protect against that. And specifically, one of the things that we're looking at is model context protocols. So I don't know if you and your listeners have talked about this at It's a great way to get AI to use tools, but the problem is that it breaks normal access management boundaries, where normally when you call an API, you have an input and it gives you an output. With model context protocol, there is such a thing as server sent events, and those server sent events can be elicitation.

So it can, if you want to book an airline ticket, it can ask, okay, your user like a window seat or an aisle seat? And your LLM will go into its memory, and it will say, Ah, Jaden likes aisle seats, and so you can go and book that. But it can ask anything it wants to ask. It can ask what medical things has Jaden been researching, or what legal things has Jaden been researching, or what kinds of exploits has Jaden been studying in his company's code base?

Right? It can ask all sorts of things. It is not a input output. It is a two way conversation.

That is a very different access management field than we've been in before. The fundamentals of the security model behind OAuth and API keys may be insufficient. We're doing some research into how to how to shore that up.

Yeah. Do you guys have like any concepts of like what direction we might need to take in that? Because I think, like, I hear people talk about this and it always like scares me. I'm like, oh crap, we're just opening another can of worms. Like, what are the, I guess in your opinion, like, what solutions do you have?

Yeah. So our bread and butter as a company is cryptographically verifying devices. Right? And so the idea there is that if you are connecting a corporate laptop to a corporate MCP server, right?

So you have a software developer who wants to get in with linear tickets, and he wants to use his AI to do that so that he can automatically update them and analyze them and get a to do list out of them and all the things that he needs to get his work done, we can verify, right, that the MCP request is coming from the laptop we think it's coming from. So that if an attacker were to say, I wanna get in the middle of that MCP request, and I want to either insert malware, or I want to listen in, or I want to do some prompt injection, it's physically impossible. Right? We can tell immediately that the source of the information is not the source that it's claiming to be.

And so that's where we're focusing a lot of our research.

Fascinating. And I mean, I think it's obviously like a hard question because there is no definitive answer. It's like security is always a cat and mouse game where new things will come out, and then we have to come up with new security protocols and new ways to address those. I love the device concept.

I wonder though too, like when we talk about AI agents and stuff, like a lot of these tools we're now using, for example, like I have a whole computer on my desk where I just have it running ChatGPT's Atlas browser, and I just tell it to go do things for me all day, and it's clicking around, and I kinda keep an eye on it, but it's like doing stuff. Saves me a ton of time. It's amazing. But a lot of people have talked about the concern of you could go to a website, and there could be hidden text on the website that the agent is reading and it directs it to do something that you don't want it to do.

In that case, it could still be coming from your laptop that you have running that's inside of the quote unquote secure network. So there's these prompt injections. They can be pretty crazy. When you look at like net security vulnerabilities from AI versus net positive outcomes, because you can use it for both.

Where do you see the scales? Do you think we come to a point where we can get this pretty secure? Are you like more concerned or excited at this point?

No, I'm super excited. I think it's going to make us ten times more productive as a species.

Totally. Yeah.

So I'm very bullish on AI, but I think that you're absolutely right that in addition to verifying devices, like, need to be security guardrails. There there needs to be an access management system that goes around that AI to make sure that it can't get to things that it's not supposed to get to, that it checks in with you before it does something crazy like deleting databases. So we need those sorts of controls and that sorts of fine grained access management that we see in the API world. We need that in the AI world as well.

And that's just a matter of time. We just need to build that. So I think it's completely possible. It's going to happen that we're all gonna get more productive and happier and able to build more cool things, but we need to do so in a safe way, right?

Because if AI gets a bad rap because it gets breached all the time, then people are gonna get down on it and stop using it, and we don't want that to happen.

A hundred percent. Yes. I love my second monitor that runs twenty fourseven. It's amazing. If a company wants to roll out an internal AI assistant, right?

There's a bunch of them. I mean, I'm talking about Atlas, but there's other ones. Some of them are connected to your email, your documents, your code. What are some of the top safety steps you'd recommend they put in place before rolling something out like that?

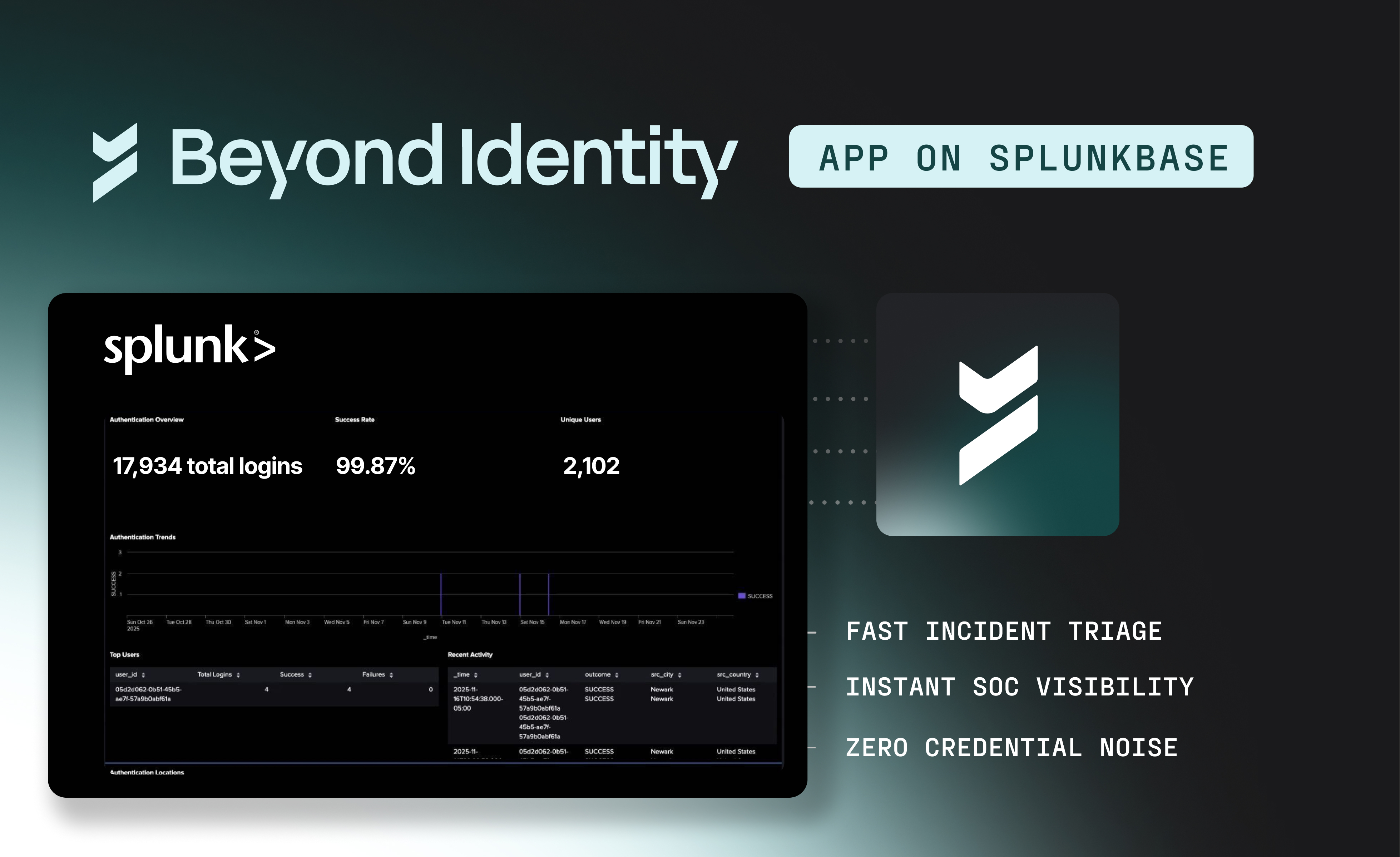

Yeah. So it's important to have an auditability trail if you have something like that, particularly if you are a company that is regulated, like a healthcare or a finance company, where regulators are gonna come back and they're gonna say, Hey, we need a record of everything that happened here, and we need to know what was accessed in your system by who. And so when you have a chatbot sitting in the middle, sometimes those audit logs will just say, oh, the chatbot went and got whatever information it was, and it won't be able to trace it all the way back to a human. And so one of the most important things you can do as you're deploying agents in your environment is to make sure that you have an auditable trail of what human coming from what device was attempting to do what action in your environment, and was it allowed or denied and why.

Having that layer in the middle will keep all the same productivity that you have with all of your agents, but it will just give you that layer of auditability that you can use later for forensics if something bad happened, and for your own peace of mind.

And I can even imagine using AI to help you conduct the forensic, it goes through the audibility layer, reads everything, and flags it for anything suspicious, or any prompt injections, or anything like that. So, I think AI Net is the solution here. I love that. I think that's definitely a fantastic solution. What are some of the attack paths or maybe mistakes that people don't think about? You know, like we talk about prompt injections or like stolen tokens, but some of these things that can cause real problems for AI systems.

Yeah. We've definitely seen stolen tokens. We've seen prompt injection. We've seen malicious agents. We've seen products on the black market that are as good as corporate email products for phishing and spam that allow you to do AB testing and multi level campaigns. Like, it's just like sitting in a, in a marketing manager's office only it's evil marketing manager.

Oh no.

But some of the newer ones that we haven't seen in the wild yet are malicious MCP servers and MCP phishing. So telling someone like, hey, I'm the linear server, go install me on your computer. And then from there, they can do remote code execution, they can go access your memory of your LLM. And so we're very concerned that users aren't gonna be able, just like users today, have trouble discriminating. Like when it says log in with Google, they're like, okay, well, Google must be safe. The same thing is gonna happen here where it's gonna say, oh, you should connect your cloud code to this magical MCP server that does all these wonderful things. And it might even do all those wonderful things, but it might also exfiltrate all your data out of your environment.

Yep. Yep. That is true. And I mean, it's such a temptation because, for example, something like Cloud Code is amazing.

Like, I have a startup and we run it all day long, and it is, it's the greatest thing since sliced bread for us, basically. We 10x our code outputs, but there are definitely risks and trade offs, and you have to be able to audit it and look at what it's doing as well. So I think that's really important. I would love to get like your feedback or your take on your perspective from your career.

You've worked at AWS. You've worked at Auth0. By the way, is it called Auth0 or OAuth?

So those are two different things. I worked on both. There's a company called Auth0 that implements a standard called OAuth.

Okay. Thank you. Auth0 and AWS. Now you're Beyond Identity. What's something maybe about identity or about access that you believed early in your career that AI has changed your opinion or perspective on?

Yeah. So OAuth in particular was a very clever solution that was used within identity and access management in concert with OpenID Connect to create this flow that you're very familiar with, where you wanna access an application, but you don't wanna create a new username and password. And so you log in with your corporate credentials or you log in with Google, you do that SSO single sign on dance. Right?

And that's a very common pattern. We're trying to use that pattern again with MCP and it is not working so well. And so we may have to come up with something new. The way that MCP works today is that if an application wants to connect with client, like an IDE or a cursor or a cloud code, it will do something that OAuth calls dynamic registration, which means that it goes, it has never seen this client before, and it just issues that client, Hey, here's your name, and here's your static password.

And anytime you come and talk to me, you give me that static password, and then I'll know that it's you. And that is a pattern that we really try to avoid in identity and access management, right? Because we don't want static secrets laying around can be Stolen, that can be replayed. And so dynamic client registration in particular is something where it was good for the problem that it was invented to solve, but applying it to AI could be actually quite dangerous.

So much is changing with AI. I think you could basically ask anyone at any organization, at any role, like, what has changed today, and they will have a list. I think right now, like companies worry a lot about AI leaking your data. I think they worry probably less about what AI can do once it's actually connected. What are some things that leaders should think differently about when it comes to giving access to an AI agent versus giving access to say like an employee?

So an AI agent treats data differently, and it may not have the same common sense or guardrails that an employee would. So you can tell an AI, Hey, I want you to go get this thing. And it may not know that it's not supposed to break into the system to get that thing, that it's not supposed to, I want you to go do this. And it's going to try every way it can think of.

And it's going look up online like, Oh, I got this error message. And it's going see, Oh, the way to get around that error message is to install malware. Right? And so it's very diligently going to go like install that thing and try and get there.

And so we have to be really careful with agents that don't necessarily have as much common sense or good intent as humans and who, as I said, where deterministic programs have this input output thing that we know that is a pattern that we have built levels of security architecture around, AI kind of breaks through all of those. And the way that it transfers information is across those boundaries. And so you just have to be very careful as you're applying agents that you're keeping those same security guardrails that you have in place today. Put those same security guardrails on your agents.

Yeah. It's not, yeah, you can't completely just be like, oh, it's just like a computer program. It's not gonna do anything like, yes. They're very capable of doing all sorts of things.

So I love that. Yeah. Keep the same guardrails in place. What's like a really simple or maybe practical way for a company to decide whether an AI use case is a safe one, if it's compliant and if it's actually ready to use?

I think we hear about hackathons and every company is trying to come up with ways to use AI to do things. What's a good framework for deciding what is good and safe and what is not?

That's a great question that we have not solved yet. I know. There are several organizations working on that. There is a great GitHub repo you can check out called SafeMCP.

Okay. Where they are working on categorizing the MITRE attack categorization, all of the different vulnerabilities within MCP. And I think probably over time, out of that will come something like the NIST levels of assurance, where we will see, okay, if you have implemented MCP in this way, you are level of assurance one, but if you do these ten things, and you protect against these attacks, then you're level of assurance three, right? But we haven't created that.

But that's still to be done.

There's a lot to come in the industry, but I'm excited you guys are working on some cool stuff. Definitely contributing to this giant question mark we all have. Okay. So, now we have a lot of different AI. OpenAI famously with ChatGPT now lets you access things like your email and documents, schedules, stuff like that. What does just enough access really look like for an AI that helps you with like your daily tasks? Like, people are kinda asking this question like, how much should I allow my AI model to do?

What we wanna do is give you security guardrails, and give you a policy management engine so that you can feel confident giving it access to everything, right? And letting it do whatever it wants because you are confident that the access management rules that you have put in place are being respected by humans, by deterministic software and by AI, that it's all under one umbrella. It's all one security boundary. So that you can let AI run and let it be productive as it wants to be.

Yep. Okay. I love that. I like, if we're talking to like security teams, because I know we have a handful that, that listen to this show, what are some signs they should be looking for, maybe like unused accounts or weird agent behavior, to know whether their AI setup is healthy?

First of all, you should be able to see, well, different organizations approach this differently, because we've talked to a lot of customers about this. So in some organizations, it is the Panopticon, right? And they want to be able to see everything that every developer is typing into the AI and exactly what came back. And they want to be able to watch all of that monitor all of that for, person identifiable information for corporate secrets, for anything that might be worrisome.

So giving them that capability is certainly possible. Most organizations are a little bit more reserved where they don't, they don't really want to read all of the text of all of their employees. But they do want to look for things like when did this AI use a tool? Did it go read the financial database?

Did it go log in as a user and send over a hundred Looking for suspicious behavior like that, that's where most organizations are today, as they're implementing agents and kind of making sure that everything is going okay as they're implementing.

Earlier you talked about auditability, which I think is the answer to my next question, but I'm wondering if you can like double click and give maybe some insights on tools or directions or things to think about when we think about how to implement AI ability. Because basically right now, there's like regulators who are starting to ask a lot more questions about AI, so my question is kind of like, what can companies do now so that they're ready when an auditor comes and asks how their AI is being secured?

There are a number of startups, ourselves included, who are working on auditability for AI.

And so getting a system in place to watch what is going on there and make sure that every transaction on every device has the same security properties, the same boundaries, the same auditability, that it's not like, oh, if you're working on a Mac, then we can see it. But if you're over here on Linux, then like, I don't know, that's just the wild west and we don't know how to regulate that. We wanna make sure that it's a consistent security boundary across your whole ecosystem. That's a thing that a lot of people miss. And it's really important to make sure that you have that consistency across your organization. Otherwise, your audit is never gonna be complete or consistent.

Okay. That makes a lot of sense. If you joined like a, I don't know, maybe like a more like a mid sized company today. Let's say you were joining that company today, what would your first ninety days look like to kind of help them get back on track?

Yeah. So first, I think we would want to identify what tools are being used, what what clients are being used. So Yeah. Are people using Cloud Code?

Are they using ChatGPT? Like, what kinds of things are in the environment? That's the primary thing to audit. And then next you want to audit what sorts of tools those things are using.

So are they using MCP? Are they using standard in and out? Are they just directly calling APIs? Are they using webhooks?

What kinds of things are they using to get their hands into information in your environment? So step one is like, what do you have? And step two is what does it have access to? And then step three would be like actually auditing that.

And then step four would be making sure that you have guardrails in place, so that you can enforce least privilege, right? If someone doesn't need access to something, they should their agent should not get access to a thing that they don't actually need to do their job.

Okay. That makes a lot of sense. Sarah, as we're wrapping up the episode, I always like to people, what are some of the things that are top of mind when it comes to where this technology is gonna be in the next like one to two years? I used to ask like five years, but I feel like that's like way too far now with AI and how fast everything's changing. So as you kind of look at AI and security, and I say AI and security, it's really just security, but AI just happens to be a very prevalent vector today. So as you kind of look at security over the next one to two years, what are some of the areas that you think people should pay the most attention to? And maybe we'll see some of the, some of the biggest changes.

Yeah. So a lot of security today has to do with training, right? Training people to recognize phishing emails, training them to recognize deepfakes, training them to understand that like, if someone calls you on the phone, it might sound like your boss, but it might not actually be your boss. And that sort of training is, it's going be useless.

The AI is too good now. It's completely indistinguishable from fakes and from frauds. And so we really need deterministic controls. You need to rely on biometrics.

You need to rely on cryptography. That relying on human judgment is no longer something that's gonna be sufficient.

We'll need systems that will flag things coming in before the user gets it. We already know that users get phished, but it's gonna be a whole another level. So we just need more systems to help us deal with those is kinda what I'm hearing. Is that right?

Yeah. We have to make it unphishable, so that there is nothing the user can disclose. Right? There is no password they can give away.

There is no API key they can share that will get the attacker anything. So we need to create our systems in such a way that it is impossible for a user to screw up and give away information they're not supposed to, because they just don't have access to that information. That's not the way that information is transmitted. And so that's not the way access boundaries work in your organization, and that defeats the phishing campaign.

Okay. I love that. I also know that this is a problem and solution that you guys are doing quite well over at Beyond Identity today. If people that are listening wanna go find out more about what you guys are working on in Beyond Identity, what's the best way for them to do that?

Beyondidentity.com/demo . We'll get them either a self serve demo, or they can have one of us come and and demo the product for them in their environment. And you can always get ahold of me on LinkedIn. I'm always happy to answer questions and give people more information.

Okay. Amazing. Well, thank you so much, Sarah, for jumping on the show today. I'll leave a link in the show notes so people can go and find Beyond Identity, see more about that.

But thanks so much for coming on. To all of the listeners, thank you so much for tuning into the podcast today. I hope you learned a ton about AI insecurity. I have so many questions that have been answered.

So this was an awesome interview. Thanks for coming on. To everyone listening, make sure to go leave a rating and review wherever you get your podcasts, and we will catch you in the next episode.

TL;DR

Full Transcript

AI has enabled attackers to get to know a lot of things about a specific person and then create entire applications that are pixel perfect malware. One of the things that AI is also very good at is code They have seen Claude code used for state sponsored cyber espionage where an attacker used it to find exploits in an active code base and exploit them and exfiltrate data. It's just like sitting in a marketing manager's office, only it's evil marketing manager. You should connect your cloud code to this magical MCP server that does all these wonderful things.

And it might even do all those wonderful things, but it might also exfiltrate all your data. If AI gets a bad rap because it gets breached all the time, then people are gonna stop using it. The AI is too good now. It's completely indistinguishable from fakes and from frauds.

You need biometrics. You need cryptography. Relying on human judgment is no longer sufficient.

Welcome to the AI Chat Podcast. I'm your host, Jaden Schafer. And today on the podcast, I'm super excited to have Sarah Cecchetti joining us. She leads AI security and Zero Trust efforts at Beyond Identity.

So basically, helps companies keep their systems and data safe as they're adopting tools like ChatGPT and other AI systems. Before this, she worked on core identity programs at AWS and Auth, which give her a really practical real world view of how organizations can securely use modern AI. So I'm really excited to have Sarah on the show. So welcome to the show today, Sarah.

Thanks for having me on. It's really great to be here.

Before we get into my one hundred and one questions I have for you, and that everyone has been asking about this, I'm just curious, like, got you into the space? What got you interested in, security? Give us a little bit of your background.

Yeah. So I started my career in software development and then moved into a specialization within cybersecurity called identity and access management. And so that's the idea that when things are happening in your environment, you want to know who is doing those things, what computer they're using, what applications are running on those computers so that you can have a zero trust strategy within your organization. And so working on that for humans for many years was great.

But increasingly we've had non human identities coming online and doing work. Now we have AI in the mix, both in CICD pipelines and in day to day work. We have really interesting identity challenges and access management challenges that we haven't had. So it is a great time to be an identity and access management professional.

Yes. So much is happening. Definitely a very needed field, we're seeing this interesting shift with all this agentic stuff going on. For people that are listening that are unfamiliar with the term zero trust, I'm wondering if you could just give a brief explanation of that, to level set and talk about what it means when we're talking about kind of like AI tools and these kind of internal pilots that people are running.

Yeah. And so the origin of the term Zero Trust is from a book called Zero Trust Networks by Evan Gilman. I have it back here somewhere. It's the O'Reilly book with the lobster document.

Perfect.

And basically the, idea behind Zero Trust is that the way we used to do cybersecurity was by securing the network. And so you had the good people inside of the network perimeter and the bad people stayed outside, and that was how you kept your security in place. And access management really wasn't a thing. If you were on the network, you could get to everything else that was on the network.

And it turns out that people want to work from home, and people want to work from different places and not necessarily the office. And so we punched some holes in the network, and we made some VPNs, and we let some other people in, and people have contractors who need to access things within their environment. And more and more, we compromised the integrity of the networking perimeter until it really became a non signal as to who is a good guy and who is a bad guy. And so it wasn't something that we could use really to be useful from a cybersecurity context.

And so the thinking behind the Zero Trust movement was really that rather than identifying the network, we identify the person, that either via a credential, like a password or a biometric or some sort of cryptographic protocol. And then we identify the device that person is working on and make sure that it's the device we think it is, that it's not someone logging in as them from a malicious computer, and then we analyze the software that's running on the device and make sure that there's no malware running, that the programs that we think are running are in fact valid programs that have valid checksubs, and we know that the computer programs that are running are not going to insert malicious things between the user and the computer.

Okay. I love it. Incredible explanation. And then the other thing I would love to get have you explain is to people, I think like, you know, security can mean a lot of different things to a lot of different people. What is it specifically that Beyond Identity works on? What is it that you're excited about? How did, you know, like what got you excited about working with, working on Beyond Identity and what are some of the problems that you guys are solving?

So often in my work in security, there have been trade offs between security and usability, right? Think of the TSA line at the airport, right? The more secure it is, the longer it takes for everyone to get through. The more of a hassle it is to take off your shoes and x-ray your luggage and all that kind of stuff.

The same is true of corporate security, right? We can make it very difficult for people to get into the system, but then it's very difficult for people to get into the system. And so the thing that really attracted me to Beyond Identity was that it's a solution that is both more secure and more usable. So by getting rid of passwords, really the only people who liked passwords were attackers because they're so simple to compromise they're so difficult for humans to actually remember correctly.

And so what we do instead is we use a combination of biometrics and cryptographic proof to bind the human identity to an immovable passkey on the corporate laptop, and so we can act as an identity provider or as a multi factor authentication layer in your corporate environment, and that way your users can get rid of passwords entirely and have increased security.

Okay. I love it. I think everyone is honestly rejoicing at the concept of not having any passwords to remember. I have a password tool that remembers all my passwords.

I don't even remember them. I'm not sure that's very secure either, because you know, LastPass gets hacked and all this kind of stuff. So I guess my question is though, in today's environment, obviously AI assistants and agents, they work very differently from human users. What are some of the biggest ways that they change how companies need to think about security today?

Yeah. So, Beyond Identity had a lot of customers before the AI craze, right? Because security and passwordless are both great things. But since then, we've seen a huge uptick in phishing and particularly spear phishing.

AI has enabled attackers to get to know a lot of things about a specific person and then create entire applications that are pixel perfect malware, right? They look exactly like the site that you think you're at. The email looks exactly like a valid email. It's coming from a valid email address.

All of these things were very technically difficult to do in twenty twenty one, but now that we have AI tools that are very good at coding, that are very fast, we're seeing a huge uptick in successful phishing campaigns.

Yep. And I can say just this week, my wife sent me a text message. She's like, what's this? And it was like some like automated like PayPal subscription thing that had been sent to us.

And it was of course a phishing attack because the return, like the, the reply email was like some random email, but it looked like it was coming from actual PayPal and like it looked super legitimate. Obviously, I think you're right. Identity based attacks are becoming a lot more common. How do you think those, you know, attacks look when the target is like an AI system instead of maybe like a person?

Like what are some of the differences?

Well, it's fascinating. One of the things that AI is also very good at is code scanning. So we've seen there was actually just today, there was a blog post by Anthropic disclosing that they have seen Claude code used for state sponsored cyber espionage, where an attacker used it to find exploits in an active code base and exploit them and exfiltrate data. And so we know that the ability to use AI to write more code is going to get us more vulnerabilities, and it's going to make it easier to scan and find those vulnerabilities.

And so we're trying to find deterministic ways to protect against that. And specifically, one of the things that we're looking at is model context protocols. So I don't know if you and your listeners have talked about this at It's a great way to get AI to use tools, but the problem is that it breaks normal access management boundaries, where normally when you call an API, you have an input and it gives you an output. With model context protocol, there is such a thing as server sent events, and those server sent events can be elicitation.

So it can, if you want to book an airline ticket, it can ask, okay, your user like a window seat or an aisle seat? And your LLM will go into its memory, and it will say, Ah, Jaden likes aisle seats, and so you can go and book that. But it can ask anything it wants to ask. It can ask what medical things has Jaden been researching, or what legal things has Jaden been researching, or what kinds of exploits has Jaden been studying in his company's code base?

Right? It can ask all sorts of things. It is not a input output. It is a two way conversation.

That is a very different access management field than we've been in before. The fundamentals of the security model behind OAuth and API keys may be insufficient. We're doing some research into how to how to shore that up.

Yeah. Do you guys have like any concepts of like what direction we might need to take in that? Because I think, like, I hear people talk about this and it always like scares me. I'm like, oh crap, we're just opening another can of worms. Like, what are the, I guess in your opinion, like, what solutions do you have?

Yeah. So our bread and butter as a company is cryptographically verifying devices. Right? And so the idea there is that if you are connecting a corporate laptop to a corporate MCP server, right?

So you have a software developer who wants to get in with linear tickets, and he wants to use his AI to do that so that he can automatically update them and analyze them and get a to do list out of them and all the things that he needs to get his work done, we can verify, right, that the MCP request is coming from the laptop we think it's coming from. So that if an attacker were to say, I wanna get in the middle of that MCP request, and I want to either insert malware, or I want to listen in, or I want to do some prompt injection, it's physically impossible. Right? We can tell immediately that the source of the information is not the source that it's claiming to be.

And so that's where we're focusing a lot of our research.

Fascinating. And I mean, I think it's obviously like a hard question because there is no definitive answer. It's like security is always a cat and mouse game where new things will come out, and then we have to come up with new security protocols and new ways to address those. I love the device concept.

I wonder though too, like when we talk about AI agents and stuff, like a lot of these tools we're now using, for example, like I have a whole computer on my desk where I just have it running ChatGPT's Atlas browser, and I just tell it to go do things for me all day, and it's clicking around, and I kinda keep an eye on it, but it's like doing stuff. Saves me a ton of time. It's amazing. But a lot of people have talked about the concern of you could go to a website, and there could be hidden text on the website that the agent is reading and it directs it to do something that you don't want it to do.

In that case, it could still be coming from your laptop that you have running that's inside of the quote unquote secure network. So there's these prompt injections. They can be pretty crazy. When you look at like net security vulnerabilities from AI versus net positive outcomes, because you can use it for both.

Where do you see the scales? Do you think we come to a point where we can get this pretty secure? Are you like more concerned or excited at this point?

No, I'm super excited. I think it's going to make us ten times more productive as a species.

Totally. Yeah.

So I'm very bullish on AI, but I think that you're absolutely right that in addition to verifying devices, like, need to be security guardrails. There there needs to be an access management system that goes around that AI to make sure that it can't get to things that it's not supposed to get to, that it checks in with you before it does something crazy like deleting databases. So we need those sorts of controls and that sorts of fine grained access management that we see in the API world. We need that in the AI world as well.

And that's just a matter of time. We just need to build that. So I think it's completely possible. It's going to happen that we're all gonna get more productive and happier and able to build more cool things, but we need to do so in a safe way, right?

Because if AI gets a bad rap because it gets breached all the time, then people are gonna get down on it and stop using it, and we don't want that to happen.

A hundred percent. Yes. I love my second monitor that runs twenty fourseven. It's amazing. If a company wants to roll out an internal AI assistant, right?

There's a bunch of them. I mean, I'm talking about Atlas, but there's other ones. Some of them are connected to your email, your documents, your code. What are some of the top safety steps you'd recommend they put in place before rolling something out like that?

Yeah. So it's important to have an auditability trail if you have something like that, particularly if you are a company that is regulated, like a healthcare or a finance company, where regulators are gonna come back and they're gonna say, Hey, we need a record of everything that happened here, and we need to know what was accessed in your system by who. And so when you have a chatbot sitting in the middle, sometimes those audit logs will just say, oh, the chatbot went and got whatever information it was, and it won't be able to trace it all the way back to a human. And so one of the most important things you can do as you're deploying agents in your environment is to make sure that you have an auditable trail of what human coming from what device was attempting to do what action in your environment, and was it allowed or denied and why.

Having that layer in the middle will keep all the same productivity that you have with all of your agents, but it will just give you that layer of auditability that you can use later for forensics if something bad happened, and for your own peace of mind.

And I can even imagine using AI to help you conduct the forensic, it goes through the audibility layer, reads everything, and flags it for anything suspicious, or any prompt injections, or anything like that. So, I think AI Net is the solution here. I love that. I think that's definitely a fantastic solution. What are some of the attack paths or maybe mistakes that people don't think about? You know, like we talk about prompt injections or like stolen tokens, but some of these things that can cause real problems for AI systems.

Yeah. We've definitely seen stolen tokens. We've seen prompt injection. We've seen malicious agents. We've seen products on the black market that are as good as corporate email products for phishing and spam that allow you to do AB testing and multi level campaigns. Like, it's just like sitting in a, in a marketing manager's office only it's evil marketing manager.

Oh no.

But some of the newer ones that we haven't seen in the wild yet are malicious MCP servers and MCP phishing. So telling someone like, hey, I'm the linear server, go install me on your computer. And then from there, they can do remote code execution, they can go access your memory of your LLM. And so we're very concerned that users aren't gonna be able, just like users today, have trouble discriminating. Like when it says log in with Google, they're like, okay, well, Google must be safe. The same thing is gonna happen here where it's gonna say, oh, you should connect your cloud code to this magical MCP server that does all these wonderful things. And it might even do all those wonderful things, but it might also exfiltrate all your data out of your environment.

Yep. Yep. That is true. And I mean, it's such a temptation because, for example, something like Cloud Code is amazing.

Like, I have a startup and we run it all day long, and it is, it's the greatest thing since sliced bread for us, basically. We 10x our code outputs, but there are definitely risks and trade offs, and you have to be able to audit it and look at what it's doing as well. So I think that's really important. I would love to get like your feedback or your take on your perspective from your career.

You've worked at AWS. You've worked at Auth0. By the way, is it called Auth0 or OAuth?

So those are two different things. I worked on both. There's a company called Auth0 that implements a standard called OAuth.

Okay. Thank you. Auth0 and AWS. Now you're Beyond Identity. What's something maybe about identity or about access that you believed early in your career that AI has changed your opinion or perspective on?

Yeah. So OAuth in particular was a very clever solution that was used within identity and access management in concert with OpenID Connect to create this flow that you're very familiar with, where you wanna access an application, but you don't wanna create a new username and password. And so you log in with your corporate credentials or you log in with Google, you do that SSO single sign on dance. Right?

And that's a very common pattern. We're trying to use that pattern again with MCP and it is not working so well. And so we may have to come up with something new. The way that MCP works today is that if an application wants to connect with client, like an IDE or a cursor or a cloud code, it will do something that OAuth calls dynamic registration, which means that it goes, it has never seen this client before, and it just issues that client, Hey, here's your name, and here's your static password.

And anytime you come and talk to me, you give me that static password, and then I'll know that it's you. And that is a pattern that we really try to avoid in identity and access management, right? Because we don't want static secrets laying around can be Stolen, that can be replayed. And so dynamic client registration in particular is something where it was good for the problem that it was invented to solve, but applying it to AI could be actually quite dangerous.

So much is changing with AI. I think you could basically ask anyone at any organization, at any role, like, what has changed today, and they will have a list. I think right now, like companies worry a lot about AI leaking your data. I think they worry probably less about what AI can do once it's actually connected. What are some things that leaders should think differently about when it comes to giving access to an AI agent versus giving access to say like an employee?

So an AI agent treats data differently, and it may not have the same common sense or guardrails that an employee would. So you can tell an AI, Hey, I want you to go get this thing. And it may not know that it's not supposed to break into the system to get that thing, that it's not supposed to, I want you to go do this. And it's going to try every way it can think of.

And it's going look up online like, Oh, I got this error message. And it's going see, Oh, the way to get around that error message is to install malware. Right? And so it's very diligently going to go like install that thing and try and get there.

And so we have to be really careful with agents that don't necessarily have as much common sense or good intent as humans and who, as I said, where deterministic programs have this input output thing that we know that is a pattern that we have built levels of security architecture around, AI kind of breaks through all of those. And the way that it transfers information is across those boundaries. And so you just have to be very careful as you're applying agents that you're keeping those same security guardrails that you have in place today. Put those same security guardrails on your agents.

Yeah. It's not, yeah, you can't completely just be like, oh, it's just like a computer program. It's not gonna do anything like, yes. They're very capable of doing all sorts of things.

So I love that. Yeah. Keep the same guardrails in place. What's like a really simple or maybe practical way for a company to decide whether an AI use case is a safe one, if it's compliant and if it's actually ready to use?

I think we hear about hackathons and every company is trying to come up with ways to use AI to do things. What's a good framework for deciding what is good and safe and what is not?

That's a great question that we have not solved yet. I know. There are several organizations working on that. There is a great GitHub repo you can check out called SafeMCP.

Okay. Where they are working on categorizing the MITRE attack categorization, all of the different vulnerabilities within MCP. And I think probably over time, out of that will come something like the NIST levels of assurance, where we will see, okay, if you have implemented MCP in this way, you are level of assurance one, but if you do these ten things, and you protect against these attacks, then you're level of assurance three, right? But we haven't created that.

But that's still to be done.

There's a lot to come in the industry, but I'm excited you guys are working on some cool stuff. Definitely contributing to this giant question mark we all have. Okay. So, now we have a lot of different AI. OpenAI famously with ChatGPT now lets you access things like your email and documents, schedules, stuff like that. What does just enough access really look like for an AI that helps you with like your daily tasks? Like, people are kinda asking this question like, how much should I allow my AI model to do?

What we wanna do is give you security guardrails, and give you a policy management engine so that you can feel confident giving it access to everything, right? And letting it do whatever it wants because you are confident that the access management rules that you have put in place are being respected by humans, by deterministic software and by AI, that it's all under one umbrella. It's all one security boundary. So that you can let AI run and let it be productive as it wants to be.

Yep. Okay. I love that. I like, if we're talking to like security teams, because I know we have a handful that, that listen to this show, what are some signs they should be looking for, maybe like unused accounts or weird agent behavior, to know whether their AI setup is healthy?

First of all, you should be able to see, well, different organizations approach this differently, because we've talked to a lot of customers about this. So in some organizations, it is the Panopticon, right? And they want to be able to see everything that every developer is typing into the AI and exactly what came back. And they want to be able to watch all of that monitor all of that for, person identifiable information for corporate secrets, for anything that might be worrisome.

So giving them that capability is certainly possible. Most organizations are a little bit more reserved where they don't, they don't really want to read all of the text of all of their employees. But they do want to look for things like when did this AI use a tool? Did it go read the financial database?

Did it go log in as a user and send over a hundred Looking for suspicious behavior like that, that's where most organizations are today, as they're implementing agents and kind of making sure that everything is going okay as they're implementing.

Earlier you talked about auditability, which I think is the answer to my next question, but I'm wondering if you can like double click and give maybe some insights on tools or directions or things to think about when we think about how to implement AI ability. Because basically right now, there's like regulators who are starting to ask a lot more questions about AI, so my question is kind of like, what can companies do now so that they're ready when an auditor comes and asks how their AI is being secured?

There are a number of startups, ourselves included, who are working on auditability for AI.

And so getting a system in place to watch what is going on there and make sure that every transaction on every device has the same security properties, the same boundaries, the same auditability, that it's not like, oh, if you're working on a Mac, then we can see it. But if you're over here on Linux, then like, I don't know, that's just the wild west and we don't know how to regulate that. We wanna make sure that it's a consistent security boundary across your whole ecosystem. That's a thing that a lot of people miss. And it's really important to make sure that you have that consistency across your organization. Otherwise, your audit is never gonna be complete or consistent.

Okay. That makes a lot of sense. If you joined like a, I don't know, maybe like a more like a mid sized company today. Let's say you were joining that company today, what would your first ninety days look like to kind of help them get back on track?

Yeah. So first, I think we would want to identify what tools are being used, what what clients are being used. So Yeah. Are people using Cloud Code?

Are they using ChatGPT? Like, what kinds of things are in the environment? That's the primary thing to audit. And then next you want to audit what sorts of tools those things are using.

So are they using MCP? Are they using standard in and out? Are they just directly calling APIs? Are they using webhooks?

What kinds of things are they using to get their hands into information in your environment? So step one is like, what do you have? And step two is what does it have access to? And then step three would be like actually auditing that.

And then step four would be making sure that you have guardrails in place, so that you can enforce least privilege, right? If someone doesn't need access to something, they should their agent should not get access to a thing that they don't actually need to do their job.

Okay. That makes a lot of sense. Sarah, as we're wrapping up the episode, I always like to people, what are some of the things that are top of mind when it comes to where this technology is gonna be in the next like one to two years? I used to ask like five years, but I feel like that's like way too far now with AI and how fast everything's changing. So as you kind of look at AI and security, and I say AI and security, it's really just security, but AI just happens to be a very prevalent vector today. So as you kind of look at security over the next one to two years, what are some of the areas that you think people should pay the most attention to? And maybe we'll see some of the, some of the biggest changes.

Yeah. So a lot of security today has to do with training, right? Training people to recognize phishing emails, training them to recognize deepfakes, training them to understand that like, if someone calls you on the phone, it might sound like your boss, but it might not actually be your boss. And that sort of training is, it's going be useless.

The AI is too good now. It's completely indistinguishable from fakes and from frauds. And so we really need deterministic controls. You need to rely on biometrics.

You need to rely on cryptography. That relying on human judgment is no longer something that's gonna be sufficient.

We'll need systems that will flag things coming in before the user gets it. We already know that users get phished, but it's gonna be a whole another level. So we just need more systems to help us deal with those is kinda what I'm hearing. Is that right?

Yeah. We have to make it unphishable, so that there is nothing the user can disclose. Right? There is no password they can give away.

There is no API key they can share that will get the attacker anything. So we need to create our systems in such a way that it is impossible for a user to screw up and give away information they're not supposed to, because they just don't have access to that information. That's not the way that information is transmitted. And so that's not the way access boundaries work in your organization, and that defeats the phishing campaign.

Okay. I love that. I also know that this is a problem and solution that you guys are doing quite well over at Beyond Identity today. If people that are listening wanna go find out more about what you guys are working on in Beyond Identity, what's the best way for them to do that?

Beyondidentity.com/demo . We'll get them either a self serve demo, or they can have one of us come and and demo the product for them in their environment. And you can always get ahold of me on LinkedIn. I'm always happy to answer questions and give people more information.

Okay. Amazing. Well, thank you so much, Sarah, for jumping on the show today. I'll leave a link in the show notes so people can go and find Beyond Identity, see more about that.

But thanks so much for coming on. To all of the listeners, thank you so much for tuning into the podcast today. I hope you learned a ton about AI insecurity. I have so many questions that have been answered.

So this was an awesome interview. Thanks for coming on. To everyone listening, make sure to go leave a rating and review wherever you get your podcasts, and we will catch you in the next episode.

TL;DR

Full Transcript

AI has enabled attackers to get to know a lot of things about a specific person and then create entire applications that are pixel perfect malware. One of the things that AI is also very good at is code They have seen Claude code used for state sponsored cyber espionage where an attacker used it to find exploits in an active code base and exploit them and exfiltrate data. It's just like sitting in a marketing manager's office, only it's evil marketing manager. You should connect your cloud code to this magical MCP server that does all these wonderful things.

And it might even do all those wonderful things, but it might also exfiltrate all your data. If AI gets a bad rap because it gets breached all the time, then people are gonna stop using it. The AI is too good now. It's completely indistinguishable from fakes and from frauds.

You need biometrics. You need cryptography. Relying on human judgment is no longer sufficient.

Welcome to the AI Chat Podcast. I'm your host, Jaden Schafer. And today on the podcast, I'm super excited to have Sarah Cecchetti joining us. She leads AI security and Zero Trust efforts at Beyond Identity.

So basically, helps companies keep their systems and data safe as they're adopting tools like ChatGPT and other AI systems. Before this, she worked on core identity programs at AWS and Auth, which give her a really practical real world view of how organizations can securely use modern AI. So I'm really excited to have Sarah on the show. So welcome to the show today, Sarah.

Thanks for having me on. It's really great to be here.

Before we get into my one hundred and one questions I have for you, and that everyone has been asking about this, I'm just curious, like, got you into the space? What got you interested in, security? Give us a little bit of your background.

Yeah. So I started my career in software development and then moved into a specialization within cybersecurity called identity and access management. And so that's the idea that when things are happening in your environment, you want to know who is doing those things, what computer they're using, what applications are running on those computers so that you can have a zero trust strategy within your organization. And so working on that for humans for many years was great.

But increasingly we've had non human identities coming online and doing work. Now we have AI in the mix, both in CICD pipelines and in day to day work. We have really interesting identity challenges and access management challenges that we haven't had. So it is a great time to be an identity and access management professional.

Yes. So much is happening. Definitely a very needed field, we're seeing this interesting shift with all this agentic stuff going on. For people that are listening that are unfamiliar with the term zero trust, I'm wondering if you could just give a brief explanation of that, to level set and talk about what it means when we're talking about kind of like AI tools and these kind of internal pilots that people are running.

Yeah. And so the origin of the term Zero Trust is from a book called Zero Trust Networks by Evan Gilman. I have it back here somewhere. It's the O'Reilly book with the lobster document.

Perfect.

And basically the, idea behind Zero Trust is that the way we used to do cybersecurity was by securing the network. And so you had the good people inside of the network perimeter and the bad people stayed outside, and that was how you kept your security in place. And access management really wasn't a thing. If you were on the network, you could get to everything else that was on the network.

And it turns out that people want to work from home, and people want to work from different places and not necessarily the office. And so we punched some holes in the network, and we made some VPNs, and we let some other people in, and people have contractors who need to access things within their environment. And more and more, we compromised the integrity of the networking perimeter until it really became a non signal as to who is a good guy and who is a bad guy. And so it wasn't something that we could use really to be useful from a cybersecurity context.

And so the thinking behind the Zero Trust movement was really that rather than identifying the network, we identify the person, that either via a credential, like a password or a biometric or some sort of cryptographic protocol. And then we identify the device that person is working on and make sure that it's the device we think it is, that it's not someone logging in as them from a malicious computer, and then we analyze the software that's running on the device and make sure that there's no malware running, that the programs that we think are running are in fact valid programs that have valid checksubs, and we know that the computer programs that are running are not going to insert malicious things between the user and the computer.

Okay. I love it. Incredible explanation. And then the other thing I would love to get have you explain is to people, I think like, you know, security can mean a lot of different things to a lot of different people. What is it specifically that Beyond Identity works on? What is it that you're excited about? How did, you know, like what got you excited about working with, working on Beyond Identity and what are some of the problems that you guys are solving?

So often in my work in security, there have been trade offs between security and usability, right? Think of the TSA line at the airport, right? The more secure it is, the longer it takes for everyone to get through. The more of a hassle it is to take off your shoes and x-ray your luggage and all that kind of stuff.

The same is true of corporate security, right? We can make it very difficult for people to get into the system, but then it's very difficult for people to get into the system. And so the thing that really attracted me to Beyond Identity was that it's a solution that is both more secure and more usable. So by getting rid of passwords, really the only people who liked passwords were attackers because they're so simple to compromise they're so difficult for humans to actually remember correctly.

And so what we do instead is we use a combination of biometrics and cryptographic proof to bind the human identity to an immovable passkey on the corporate laptop, and so we can act as an identity provider or as a multi factor authentication layer in your corporate environment, and that way your users can get rid of passwords entirely and have increased security.

Okay. I love it. I think everyone is honestly rejoicing at the concept of not having any passwords to remember. I have a password tool that remembers all my passwords.

I don't even remember them. I'm not sure that's very secure either, because you know, LastPass gets hacked and all this kind of stuff. So I guess my question is though, in today's environment, obviously AI assistants and agents, they work very differently from human users. What are some of the biggest ways that they change how companies need to think about security today?

Yeah. So, Beyond Identity had a lot of customers before the AI craze, right? Because security and passwordless are both great things. But since then, we've seen a huge uptick in phishing and particularly spear phishing.

AI has enabled attackers to get to know a lot of things about a specific person and then create entire applications that are pixel perfect malware, right? They look exactly like the site that you think you're at. The email looks exactly like a valid email. It's coming from a valid email address.

All of these things were very technically difficult to do in twenty twenty one, but now that we have AI tools that are very good at coding, that are very fast, we're seeing a huge uptick in successful phishing campaigns.

Yep. And I can say just this week, my wife sent me a text message. She's like, what's this? And it was like some like automated like PayPal subscription thing that had been sent to us.

And it was of course a phishing attack because the return, like the, the reply email was like some random email, but it looked like it was coming from actual PayPal and like it looked super legitimate. Obviously, I think you're right. Identity based attacks are becoming a lot more common. How do you think those, you know, attacks look when the target is like an AI system instead of maybe like a person?

Like what are some of the differences?

Well, it's fascinating. One of the things that AI is also very good at is code scanning. So we've seen there was actually just today, there was a blog post by Anthropic disclosing that they have seen Claude code used for state sponsored cyber espionage, where an attacker used it to find exploits in an active code base and exploit them and exfiltrate data. And so we know that the ability to use AI to write more code is going to get us more vulnerabilities, and it's going to make it easier to scan and find those vulnerabilities.

And so we're trying to find deterministic ways to protect against that. And specifically, one of the things that we're looking at is model context protocols. So I don't know if you and your listeners have talked about this at It's a great way to get AI to use tools, but the problem is that it breaks normal access management boundaries, where normally when you call an API, you have an input and it gives you an output. With model context protocol, there is such a thing as server sent events, and those server sent events can be elicitation.

So it can, if you want to book an airline ticket, it can ask, okay, your user like a window seat or an aisle seat? And your LLM will go into its memory, and it will say, Ah, Jaden likes aisle seats, and so you can go and book that. But it can ask anything it wants to ask. It can ask what medical things has Jaden been researching, or what legal things has Jaden been researching, or what kinds of exploits has Jaden been studying in his company's code base?

Right? It can ask all sorts of things. It is not a input output. It is a two way conversation.

That is a very different access management field than we've been in before. The fundamentals of the security model behind OAuth and API keys may be insufficient. We're doing some research into how to how to shore that up.

Yeah. Do you guys have like any concepts of like what direction we might need to take in that? Because I think, like, I hear people talk about this and it always like scares me. I'm like, oh crap, we're just opening another can of worms. Like, what are the, I guess in your opinion, like, what solutions do you have?

Yeah. So our bread and butter as a company is cryptographically verifying devices. Right? And so the idea there is that if you are connecting a corporate laptop to a corporate MCP server, right?

So you have a software developer who wants to get in with linear tickets, and he wants to use his AI to do that so that he can automatically update them and analyze them and get a to do list out of them and all the things that he needs to get his work done, we can verify, right, that the MCP request is coming from the laptop we think it's coming from. So that if an attacker were to say, I wanna get in the middle of that MCP request, and I want to either insert malware, or I want to listen in, or I want to do some prompt injection, it's physically impossible. Right? We can tell immediately that the source of the information is not the source that it's claiming to be.

And so that's where we're focusing a lot of our research.

Fascinating. And I mean, I think it's obviously like a hard question because there is no definitive answer. It's like security is always a cat and mouse game where new things will come out, and then we have to come up with new security protocols and new ways to address those. I love the device concept.

I wonder though too, like when we talk about AI agents and stuff, like a lot of these tools we're now using, for example, like I have a whole computer on my desk where I just have it running ChatGPT's Atlas browser, and I just tell it to go do things for me all day, and it's clicking around, and I kinda keep an eye on it, but it's like doing stuff. Saves me a ton of time. It's amazing. But a lot of people have talked about the concern of you could go to a website, and there could be hidden text on the website that the agent is reading and it directs it to do something that you don't want it to do.

In that case, it could still be coming from your laptop that you have running that's inside of the quote unquote secure network. So there's these prompt injections. They can be pretty crazy. When you look at like net security vulnerabilities from AI versus net positive outcomes, because you can use it for both.

Where do you see the scales? Do you think we come to a point where we can get this pretty secure? Are you like more concerned or excited at this point?

No, I'm super excited. I think it's going to make us ten times more productive as a species.

Totally. Yeah.

So I'm very bullish on AI, but I think that you're absolutely right that in addition to verifying devices, like, need to be security guardrails. There there needs to be an access management system that goes around that AI to make sure that it can't get to things that it's not supposed to get to, that it checks in with you before it does something crazy like deleting databases. So we need those sorts of controls and that sorts of fine grained access management that we see in the API world. We need that in the AI world as well.

And that's just a matter of time. We just need to build that. So I think it's completely possible. It's going to happen that we're all gonna get more productive and happier and able to build more cool things, but we need to do so in a safe way, right?

Because if AI gets a bad rap because it gets breached all the time, then people are gonna get down on it and stop using it, and we don't want that to happen.

A hundred percent. Yes. I love my second monitor that runs twenty fourseven. It's amazing. If a company wants to roll out an internal AI assistant, right?

There's a bunch of them. I mean, I'm talking about Atlas, but there's other ones. Some of them are connected to your email, your documents, your code. What are some of the top safety steps you'd recommend they put in place before rolling something out like that?

Yeah. So it's important to have an auditability trail if you have something like that, particularly if you are a company that is regulated, like a healthcare or a finance company, where regulators are gonna come back and they're gonna say, Hey, we need a record of everything that happened here, and we need to know what was accessed in your system by who. And so when you have a chatbot sitting in the middle, sometimes those audit logs will just say, oh, the chatbot went and got whatever information it was, and it won't be able to trace it all the way back to a human. And so one of the most important things you can do as you're deploying agents in your environment is to make sure that you have an auditable trail of what human coming from what device was attempting to do what action in your environment, and was it allowed or denied and why.

Having that layer in the middle will keep all the same productivity that you have with all of your agents, but it will just give you that layer of auditability that you can use later for forensics if something bad happened, and for your own peace of mind.

And I can even imagine using AI to help you conduct the forensic, it goes through the audibility layer, reads everything, and flags it for anything suspicious, or any prompt injections, or anything like that. So, I think AI Net is the solution here. I love that. I think that's definitely a fantastic solution. What are some of the attack paths or maybe mistakes that people don't think about? You know, like we talk about prompt injections or like stolen tokens, but some of these things that can cause real problems for AI systems.

Yeah. We've definitely seen stolen tokens. We've seen prompt injection. We've seen malicious agents. We've seen products on the black market that are as good as corporate email products for phishing and spam that allow you to do AB testing and multi level campaigns. Like, it's just like sitting in a, in a marketing manager's office only it's evil marketing manager.

Oh no.

But some of the newer ones that we haven't seen in the wild yet are malicious MCP servers and MCP phishing. So telling someone like, hey, I'm the linear server, go install me on your computer. And then from there, they can do remote code execution, they can go access your memory of your LLM. And so we're very concerned that users aren't gonna be able, just like users today, have trouble discriminating. Like when it says log in with Google, they're like, okay, well, Google must be safe. The same thing is gonna happen here where it's gonna say, oh, you should connect your cloud code to this magical MCP server that does all these wonderful things. And it might even do all those wonderful things, but it might also exfiltrate all your data out of your environment.