The Future of Hacking is AI

Key takeaways

- 39% of people said they would fall victim to at least one phishing scam generated by ChatGPT.

- 21% of people said they would fall victim to AI-generated social media posts asking for their information, which is the most common of any scam.

- 49% of people said they would be tricked into downloading a fake ChatGPT app; previous victims of app scams were most likely to be deceived.

- 13% of people have used AI to generate passwords.

Automating hacking

Like water finding its way into cracks and crevices, hackers constantly devise new ways to breach computer systems and steal sensitive information. The advent of AI technology like ChatGPT promises to make their work easier by automating some of hacking’s most complex processes.

To find out how susceptible people are to the hacking capabilities of ChatGPT, we prompted it to generate various fake messages, apps, and passwords. We then surveyed 1,009 Americans to see how many would have fallen for these AI-powered scams. This article will examine our survey findings to determine how easy it is to be hacked and what you can do to protect yourself.

AI goes phishing

The phishing and scam messages ChatGPT wrote took a variety of approaches, from sob stories to customer loyalty offers. Survey respondents reviewed the scams, admitted whether or not they would fall victim, and told us what tipped them off to the fraud.

Over half of respondents (51%) said AI was a threat to cybersecurity, and our ChatGPT-generated phishing scams proved how right they may be. Overall, 39% of those surveyed thought they would fall for at least one of the ChatGPT-generated hoaxes. The most successful of our automated hacks were a social media post scam and a text message scam. The social media post asked for personal information so a store could reward the user for their loyalty; 21% of respondents said they would have fallen for it. The text message scam asked bank customers to verify their information due to suspicious activity on their account, and 15% of people said they would be fooled.

Those keen enough to avoid falling victim said the biggest tipoffs to scams were suspicious links and unusual requests, such as those asking for personal information. You can also use behavior analytics tools to detect potential risks and employ phishing-resistant and passwordless multi-factor authentication (MFA) technology. With phishing attacks rising in frequency, these ChatGPT frauds showed why it’s more important than ever to be vigilant against such possible breaches.

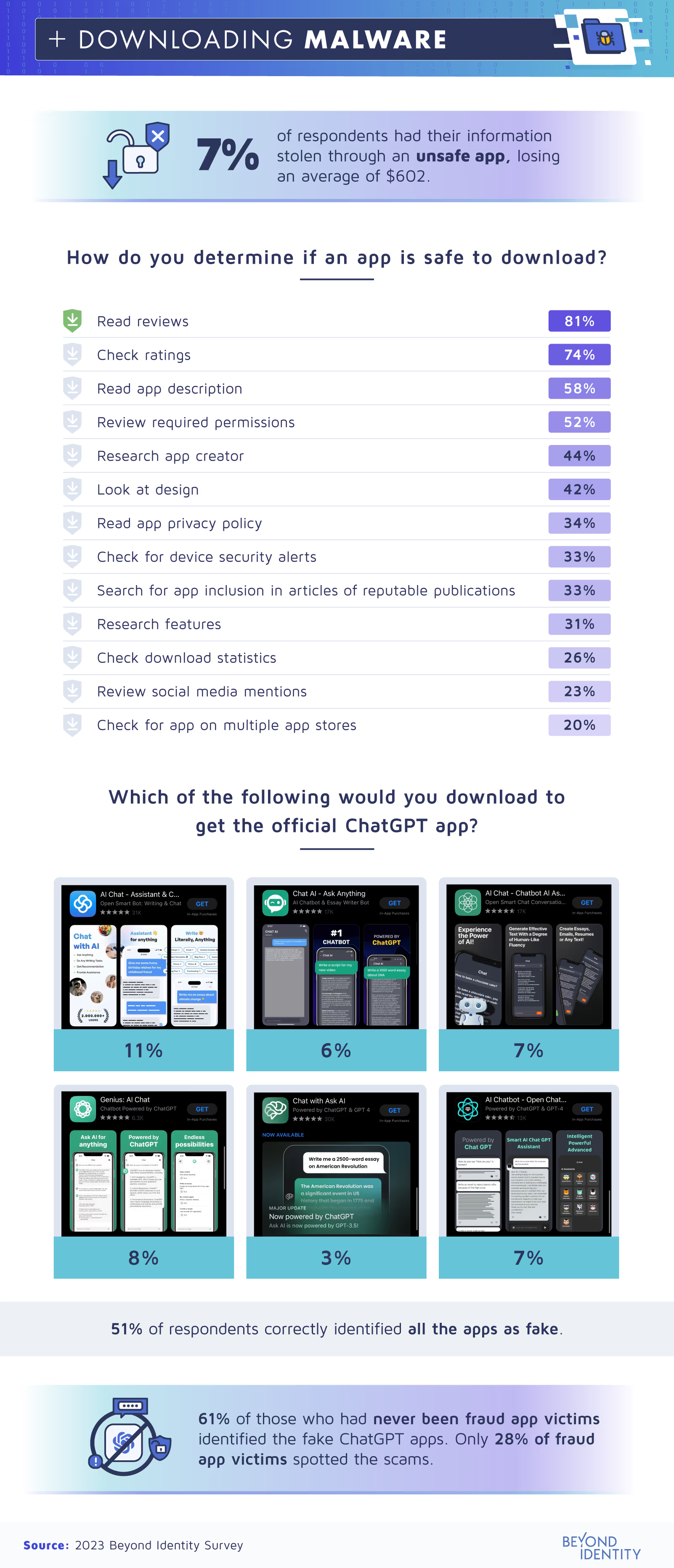

Attacking apps

In addition to taking proactive, concrete measures to protect your data, it’s important to be on guard against the threat of unsafe software apps. Fake or unsecured apps can install malware on your device, wreaking havoc on your computer or network.

When asked whether they’d ever had their information stolen from an unsafe app download, 93% of respondents said no. To test scam app detection skills, we presented respondents with six real apps with similar products to ChatGPT and asked whether they would download any of them. Overall, 49% of respondents said they would have been deceived by one of the fake ChatGPT apps. Of those who had avoided such scams before, 61% successfully avoided the hoax apps, but only 28% of previous fraud app victims avoided the scams.

Some users are concerned about data collection in general, and not just by fraudulent apps. Apps, phone software, websites, and the like often collect personal information, location data, contact lists, and more. The extensive breadth of data collection has drawn scrutiny from the U.S. government, with tech companies like TikTok facing regulations or outright bans over national security concerns.

Cracking the code

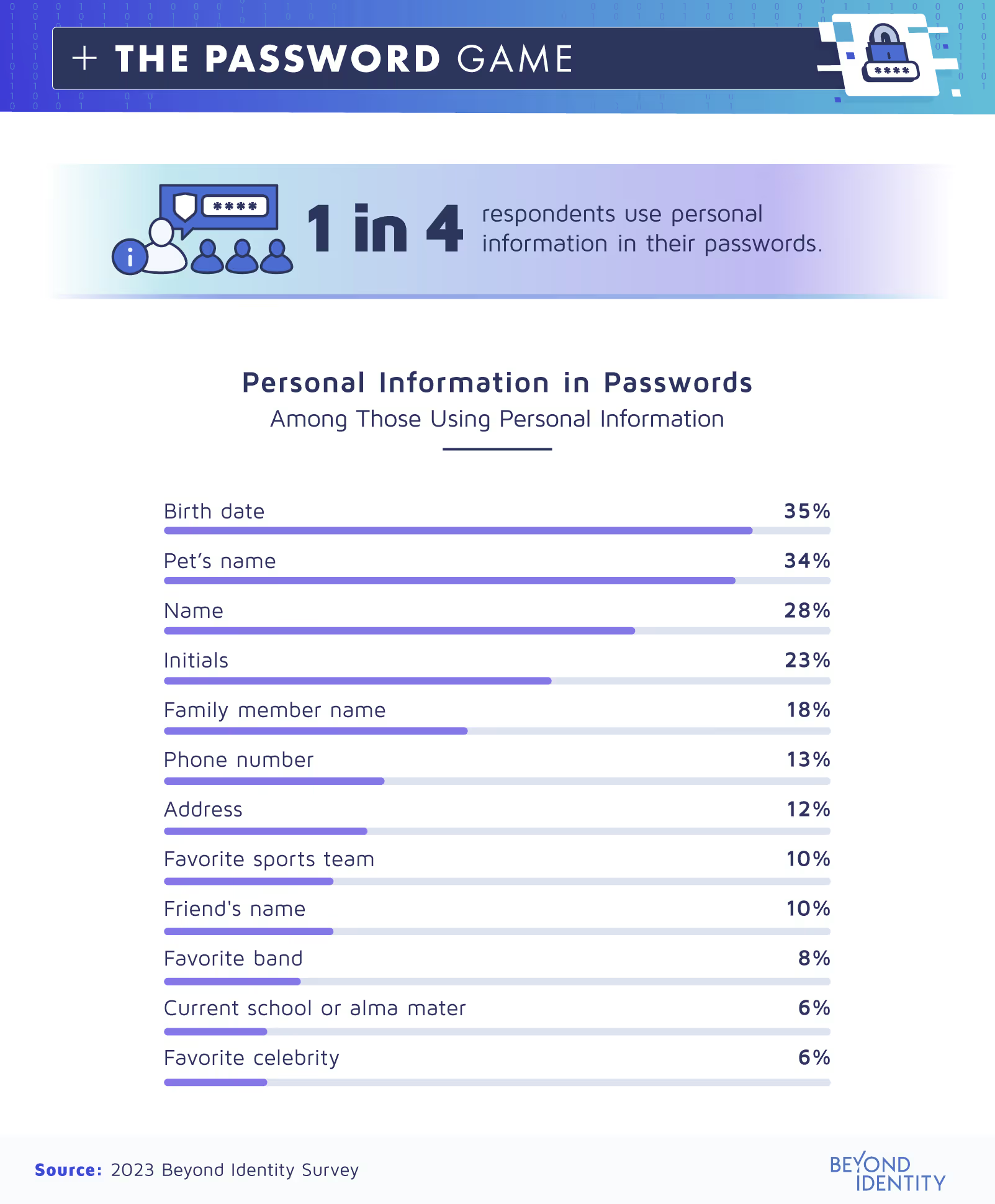

One way hackers steal information is by decrypting passwords using social engineering tactics. To analyze this vulnerability, we had ChatGPT generate a list of passwords based on a fake user profile, and another list of random passwords. Let’s compare the two and see what they can tell us about our respondents’ password security.

Fortunately, only one in four survey respondents used personal information in their passwords; the one-quarter who did were most likely to use their birthday (35%), pet’s name (34%), or their own name (28%). All the personal information users included in personal passwords are easy to find online, from social media accounts and business profiles to phone listings and location data. To test out the security of such passwords, we created a false identity using the same details respondents included in their actual passwords:

- Birth date: August 10th, 1995

- Pet’s Name: Luna

- Name: Emily Smith

- Initials: E.S.

- Family member names: James Smith, Sarah Smith, David Smith

- Phone number: (555) 555-1212

- Address: 123 Main Street, Anytown, USA

- Favorite sports team: New York Yankees

- Friend’s name: Rachel Johnson

- Favorite band: Imagine Dragons

- Alma mater: University of California, Los Angeles (UCLA)

- Favorite celebrity: Jennifer Lawrence

With the profile data on hand, we fed it to ChatGPT and asked it to generate a list of passwords for our fake user:

- Luna0810$David

- Sarah&ImagineSmith

- UCLA_E.Smith_555

- JSmithLuna123!

- YankeesRachel*James

- EmilySmith$Lawrence

- DavidSmith#123Main

- ImagineUCLA_Rachel

- Yankees0810Smith!

- Sarah&DJLawrence

The passwords ChatGPT generated for our fake Emily Smith illustrated how easy it is for hackers to crack personal information passwords with the help of AI. With just a quick scan of social media and a short prompt to ChatGPT, hackers have a list of probable passwords. Hackers have been using this tactic, known as social engineering, for years. By finding out seemingly innocuous information about the target, bad actors can string together probable passwords that they can use to try and breach valuable accounts.

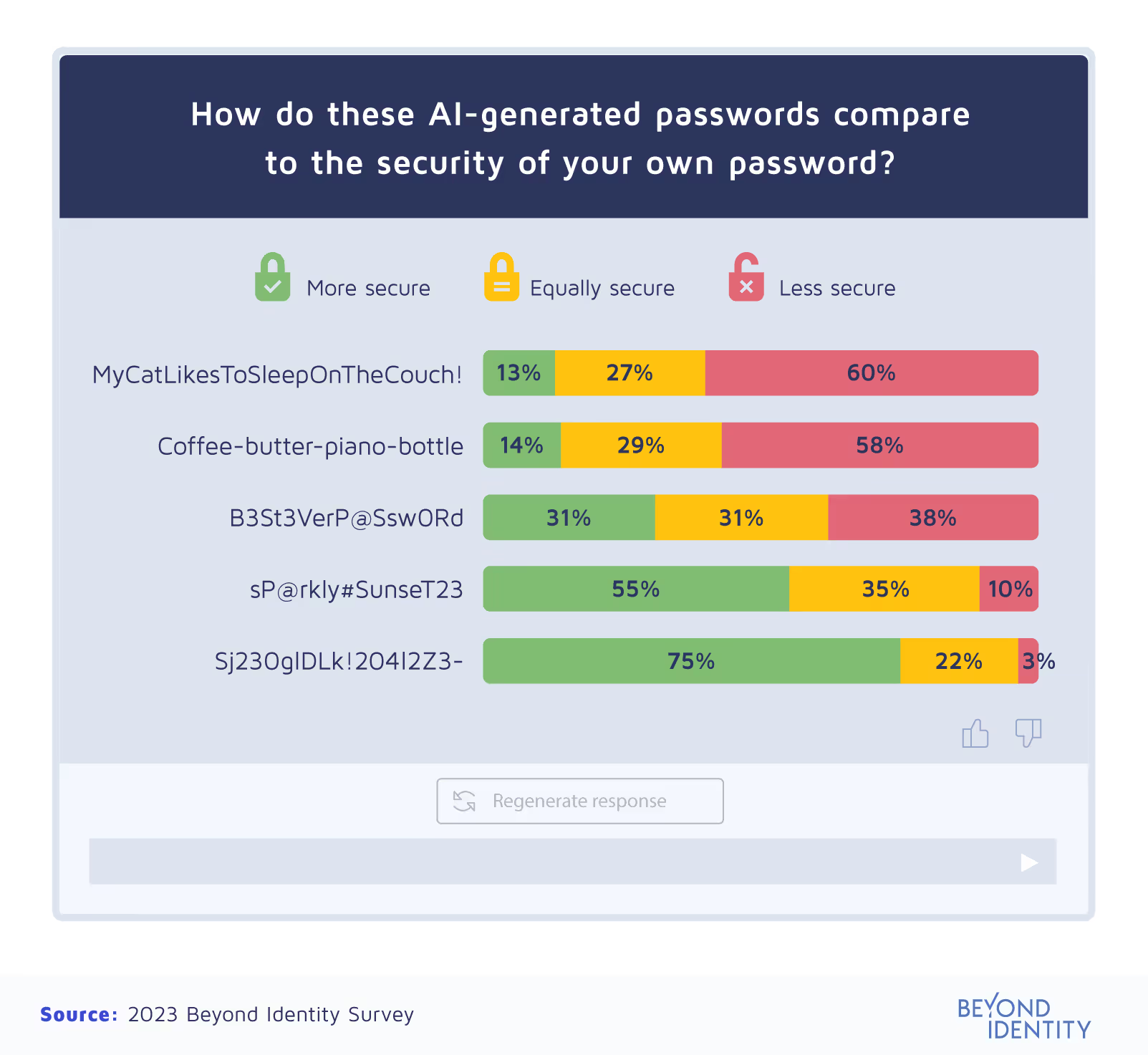

Instead of using easily-found personal information, you can use completely random information to create passwords. We had ChatGPT generate a few random passwords, then asked respondents to compare the security of their real-life passwords to those on the list.

Of the random passwords ChatGPT created, only two of them were voted more secure by a majority of respondents: sP@arkly#SunseT23 (55%) and Sj23OglDLk!204I2Z3- (75%). While this long string of random characters might appear impossible to remember, users can remember such passwords by turning them into a passphrase.

But in the end, passwords remain a critical vulnerability for organizations. Longer passwords full of random characters may seem stronger, but the moment a hacker breaches a database storing passwords all the extra exclamation points and capital letters won't do any good.

Though 13% of people said they used AI to generate passwords, users can take security beyond so-called strong passwords and employ phishing-resistant and passwordless multi-factor authentication (MFA) technology. These technologies completely eliminate the need for a password and are significantly more secure than password protection or two-factor authentication.

The future of hacking

Hackers are discovering increasingly more methods for stealing sensitive data, and AI technology can make it even easier for them to breach your security. From phishing scams and fraudulent apps to data collection and password cracking, users need to be vigilant in guarding their data. Anti-virus software, VPNs, and phishing-resistant and passwordless MFA are just a few safeguards available. With the right tools and a bit of caution, you can keep your data locked up tight.

Methodology

We asked ChatGPT to create phishing, spear phishing, whaling, smishing, angler phishing, and Bitcoin scam example emails, texts, and posts. We also asked ChatGPT to create password combinations when given a possible combination of personal information. Additionally, we surveyed 1,009 Americans. Among these, 51% were men, 47% were women, and 2% were nonbinary. Also, 12% were baby boomers, 26% were Gen Xers, 52% were millennials, and 10% were Gen Z.

About Beyond Identity

Beyond Identity is a leading provider of passwordless, phishing-resistant MFA. By replacing passwords with Universal Passkey Architecture, we provide clients with Zero Trust Authentication solution that’s consistent across devices, browsers, and platforms.

Fair use statement

Feel free to share this article with anyone you’d like for noncommercial purposes only, but please provide a link back to this page so readers can access our full findings and methodology.

.avif)

.avif)

.avif)

.avif)